nycx360

Superclocked Member

- Total Posts : 212

- Reward points : 0

- Joined: 2009/08/23 14:46:09

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 05:46:27

(permalink)

ajreynol

Wallzii

ajreynol

nycx360

I have 2 strix ocs and only 1 ftw3 atm. I was going to flash the strix bios today, but this bios saves me the hassle, so its much appreciated. Now that they are both 450 watt. 3090 bios would be nice since the strix is 480 on that. However, I am still power limited. I would say the ftw3 cooler does a better job at 100% fan than the strix cooler does, even though by looks and build the strix oc looks more beefy and effective. I think the ftw3 card has some catching up to do compared to the strix but it is 40 bucks less at 809 vs 849. Hopefully we can get a true xoc bios

Oddly I'm in a similar boat. I accidentally ended up with a FTW3 Ultra and a StrixOC trying to rush through a checkout though the Newegg App last week.

Now with performance likely being functionally equal at worst (I haven't tried out the FTW3 yet but I will), I'm left trying to decide how much value I place on the second HDMI 2 port. Strix OC is beautiful no doubt, but from the side both cards are beautiful. FTW3 provides a level of peace of mind via Step Up that the Strix simply cannot. But one less HDMI port is a hard limit that I've been meditating over since the card configurations were announced.

If I decide to do a 4K 120Hz TV AND a 4K, high refresh monitor next year, I'd have to buy some sort of HDMI switch or manually swap cables when I want to use one vs the other with the card. Where with the Strix, I can have both plugged in and not have to worry about it at all.

I guess I'll take another day or two to decide if I prefer 2x HDMI ports vs the potential Step Up to a 3090 or similar in the next 3 months. Man, if the EVGA cards had 2 HDMI ports like all the Asus and Gigabyte cards do, this would have been the easiest decision ever. SIGH.

Why do you need two HDMI 2.1 ports? Wouldn't DisplayPort give.you everything you need for monitor connectivity?

No, DP doesn't have the bandwidth that HDMI 2.1 does. For anything above 4K 120, monitors that offer this require the color bit depth to be reduced, dropping image quality to compensate for the bandwidth limitations of DP. Or they have to use a compression algorithm that reduces quality some.

HDMI 2.1 doesn't have to make such compromises. As such, the question is one of future purchase plans than current ones. At some point, I'll want to have a 4K 120Hz TV and a monitor that goes even higher...or even something that can manage 8K. We expect quite a few HDMI 2.1 monitors announced at CES 2021 in just a couple of months. For those, DP won't be ideal if you want to maximize their performance at their highest bitrate settings. The question is whether the day where I own (or want to own) both a TV and monitor capable of better performance than DP can offer comes before the 4000-series cards are out (or, in the next 2 years). Other brands made the conscious decision to future proof in this area, as all the Gigabyte and Asus cards have 2 or more HDMI 2.1 ports.

I'll be meditating on that question today as I compare my FTW3 Ultra to my Strix. With this Bios update, I expect them to be equivalent in every performance metric (unlucky bin nothwithstanding). So it's just 2 HDMI ports vs the likelihood that I initiate a Step Up to a 20GB 3080 or a 3090 between now and January. It's a good problem to have, but I often find myself struggling with paralysis of analysis. Hopefully this doesn't end up being one of those times.

The bin on the ftw3 i got is very similar to the better strix i got. I don't think either are binned. I find most of time launch cards have better lottery chances. For many who are asking for more power, temps will smack your clocks before power in most cases. Using a box fan blowing 50s F outside air, i can maintain between 2175-2160 on my ftw3 in a bench run, and 2160-2145 on the better strix (it runs a bit warmer at 100). Awaiting blocks. With blocks I will just shut both cards. I don't think every card will be power limited. My 2nd strix, never hit the power limit and will not clock higher than the 2080-2100 range.

|

Reedey

New Member

- Total Posts : 21

- Reward points : 0

- Joined: 2020/10/10 04:56:12

- Status: offline

- Ribbons : 1

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 05:56:00

(permalink)

No matter what I do with this bios, my card still seems to peg itself at 400W. Max power, max voltage, overclocked or not. 400W is all she will do.

Last week on the BIOS that the card came with I could get clean runs with +140 on the core using a portable A/C unit but now I can't get a clean run in timespy with any kind of overclock at all, that's with the original bios as well as this one. It still runs games reliably with modest overclocks but it will not hold more than 400W, and can't get a single clean pass in 3dmark. Maybe I just lucked out in the silicon lottery and those early runs were the best this card will ever do?

12350 in Port Royal and 19127 graphics score in Timespy were good while they lasted, but nothing I do can get close to those scores now, even with this new bios :(

|

ajreynol

New Member

- Total Posts : 41

- Reward points : 0

- Joined: 2012/12/28 17:24:48

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 06:00:02

(permalink)

nycx360

ajreynol

Wallzii

ajreynol

nycx360

I have 2 strix ocs and only 1 ftw3 atm. I was going to flash the strix bios today, but this bios saves me the hassle, so its much appreciated. Now that they are both 450 watt. 3090 bios would be nice since the strix is 480 on that. However, I am still power limited. I would say the ftw3 cooler does a better job at 100% fan than the strix cooler does, even though by looks and build the strix oc looks more beefy and effective. I think the ftw3 card has some catching up to do compared to the strix but it is 40 bucks less at 809 vs 849. Hopefully we can get a true xoc bios

Oddly I'm in a similar boat. I accidentally ended up with a FTW3 Ultra and a StrixOC trying to rush through a checkout though the Newegg App last week.

Now with performance likely being functionally equal at worst (I haven't tried out the FTW3 yet but I will), I'm left trying to decide how much value I place on the second HDMI 2 port. Strix OC is beautiful no doubt, but from the side both cards are beautiful. FTW3 provides a level of peace of mind via Step Up that the Strix simply cannot. But one less HDMI port is a hard limit that I've been meditating over since the card configurations were announced.

If I decide to do a 4K 120Hz TV AND a 4K, high refresh monitor next year, I'd have to buy some sort of HDMI switch or manually swap cables when I want to use one vs the other with the card. Where with the Strix, I can have both plugged in and not have to worry about it at all.

I guess I'll take another day or two to decide if I prefer 2x HDMI ports vs the potential Step Up to a 3090 or similar in the next 3 months. Man, if the EVGA cards had 2 HDMI ports like all the Asus and Gigabyte cards do, this would have been the easiest decision ever. SIGH.

Why do you need two HDMI 2.1 ports? Wouldn't DisplayPort give.you everything you need for monitor connectivity?

No, DP doesn't have the bandwidth that HDMI 2.1 does. For anything above 4K 120, monitors that offer this require the color bit depth to be reduced, dropping image quality to compensate for the bandwidth limitations of DP. Or they have to use a compression algorithm that reduces quality some.

HDMI 2.1 doesn't have to make such compromises. As such, the question is one of future purchase plans than current ones. At some point, I'll want to have a 4K 120Hz TV and a monitor that goes even higher...or even something that can manage 8K. We expect quite a few HDMI 2.1 monitors announced at CES 2021 in just a couple of months. For those, DP won't be ideal if you want to maximize their performance at their highest bitrate settings. The question is whether the day where I own (or want to own) both a TV and monitor capable of better performance than DP can offer comes before the 4000-series cards are out (or, in the next 2 years). Other brands made the conscious decision to future proof in this area, as all the Gigabyte and Asus cards have 2 or more HDMI 2.1 ports.

I'll be meditating on that question today as I compare my FTW3 Ultra to my Strix. With this Bios update, I expect them to be equivalent in every performance metric (unlucky bin nothwithstanding). So it's just 2 HDMI ports vs the likelihood that I initiate a Step Up to a 20GB 3080 or a 3090 between now and January. It's a good problem to have, but I often find myself struggling with paralysis of analysis. Hopefully this doesn't end up being one of those times.

The bin on the ftw3 i got is very similar to the better strix i got. I don't think either are binned. I find most of time launch cards have better lottery chances. For many who are asking for more power, temps will smack your clocks before power in most cases. Using a box fan blowing 50s F outside air, i can maintain between 2175-2160 on my ftw3 in a bench run, and 2160-2145 on the better strix (it runs a bit warmer at 100). Awaiting blocks. With blocks I will just shut both cards. I don't think every card will be power limited. My 2nd strix, never hit the power limit and will not clock higher than the 2080-2100 range.

Oh I agree completely. I have ZERO concerns that the cards will be equal performers. And now that I've read about the Ampere +TSMC news...I'm getting the feeling I won't be able to use Step Up for a 20GB 3080. Rumors had put such a launch in December, but if they're shifting the entire process to TSMC (they are), I can't imagine those cards will be available until maybe in February/March. Well beyond a Step Up window for my current card. So now the question is simply: 2 HDMI ports (Strix) vs possible Step Up to a 480W Samsung-based 3090 (EVGA). And either way...will I be looking to sell my current card to get a superior TSMC variant? Cooler, quieter cards with possibly lower power draw.

post edited by ajreynol - 2020/10/15 06:08:22

|

TheGuz4L

Superclocked Member

- Total Posts : 130

- Reward points : 0

- Joined: 2016/06/09 09:24:29

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 06:08:57

(permalink)

While I am personally waiting for the full release before applying this, just to clear up some misconceptions about what this will do for your card: Your highest stable overclock will probably not change. What will change is the sustained clockspeed throughout. For instance, my card does 2100->2070 (depending on how high temp gets) rock stable. HOWEVER once I hit the 400W power limit, my card has to sacrifice these boost clocks, and drops me down to 1985-1999 or so. In theory, having another 50W for the graphics card will not have me hit that power wall and my 2100-2070 clocks will stay stable throughout. That will give an overall average frame boost when gaming for long periods. If you are running at 1440P or 1080P, you may not even be hitting the power limit wall and won't notice much difference. This really shines when you're running at 4K or upscaled 4K. In CoD Warzone at 1440P, i never hit higher than 370w or so. My clocks then always stay at 2100-2085 etc. Once I cranked up the resolution to 4K, it hit the power limit and my clocks dropped to the 1985-1999 as mentioned. One question though, I don't mess with the voltage slider. Has this helped anyone get higher clocks? I found after 2100Mhz, these chips are very unstable. I am able to run benchmarks at 2150+ but thats about it.

post edited by TheGuz4L - 2020/10/15 06:12:04

|

ehabash1

iCX Member

- Total Posts : 463

- Reward points : 0

- Joined: 2019/01/03 12:02:48

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 06:32:48

(permalink)

bcavanagh

bkhan530

This is what makes EVGA one of the best AIBs. Most others would have ignored their customers but EVGA listened and delivered a new bios, good job guys keep it up!

Kind of an odd statement. If they had just made the power limit what was promised to begin with they wouldn't have needed to do this. Surely doing it right from the start is better.

yea but they promised 420... and if they started with 420 we wouldnt have the 450 bios. They went above and beyond and matched the more expensive strix card

|

SAVIAR

New Member

- Total Posts : 9

- Reward points : 0

- Joined: 2020/01/07 06:19:13

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 06:35:46

(permalink)

Yes, it provides you more voltage than def values and it made OC more stable. But temps will be higher related to higher voltages.

|

jankerson

SSC Member

- Total Posts : 901

- Reward points : 0

- Joined: 2017/07/13 06:50:53

- Status: offline

- Ribbons : 1

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 06:59:55

(permalink)

ty_ger07

jankerson

Well 1st off thanks a bunch.

However I must have the worst piece of silicone I could get...

ZERO, NADDA, ZIPPO improvement in clocks.

My card will not do 2100 period, no matter what will not do it....

I even lost 10 MHz on clock in Port Royal and Superposition.

This is depressing.... I gained nothing....

I think I got a bum card....

As I posted on the previous page:

"An increased power limit won't make a previously unstable frequency suddenly stable. Never will. Never, ever. That is a silicon quality thing, and also depends on voltage and temperature and signal integrity and all sorts of other things which power limit can't fix.

An increased power limit affects how much the GPU will boost and how soon it will start tapering off to lower boosts. That's it.

I think that you will see that at the SAME over clock which was previously stable, your benchmark scores will be higher. Not because the maximum stable frequency is higher. Instead, because the average frequency is higher."

I worked back from scratch on the OC. I figured that out on my own, higher scores at less clock speed due to more stable numbers. Was working on it this morning early. Still wish I could get higher clocks of this card though. Oh well luck of the draw I suppose. Silicon Lottery.

i9 9900K @ 5.0 GHz, NH D15, 32 GB GSKILL Trident Z RGB, AORUS Z390 MASTER, EVGA RTX 3080 FTW3 Ultra, Samsung 970 EVO Plus 500GB, Samsung 860 EVO 1TB, Samsung 860 EVO 500GB, ASUS ROG Swift PG279Q, Steel Series APEX PRO, Logitech Gaming Pro Mouse, CM Master Case 5, Corsair AXI 1600W. i7 8086K, AORUS Z370 Gaming 5, 16GB GSKILL RJV 3200, EVGA 2080TI FTW3 Ultra, Samsung 970 EVO 250GB, (2)SAMSUNG 860 EVO 500 GB, Acer Predator XB1 XB271HU, Corsair HXI 850W. i7 8700K, AORUS Z370 Ultra Gaming, 16GB 16GB DDR4 3000, EVGA 1080Ti FTW3 Ultra, Samsung 960 EVO 250GB, Corsair HX 850W.

|

ajreynol

New Member

- Total Posts : 41

- Reward points : 0

- Joined: 2012/12/28 17:24:48

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 07:10:02

(permalink)

TheGuz4L

While I am personally waiting for the full release before applying this, just to clear up some misconceptions about what this will do for your card:

Your highest stable overclock will probably not change. What will change is the sustained clockspeed throughout. For instance, my card does 2100->2070 (depending on how high temp gets) rock stable. HOWEVER once I hit the 400W power limit, my card has to sacrifice these boost clocks, and drops me down to 1985-1999 or so.

In theory, having another 50W for the graphics card will not have me hit that power wall and my 2100-2070 clocks will stay stable throughout. That will give an overall average frame boost when gaming for long periods.

If you are running at 1440P or 1080P, you may not even be hitting the power limit wall and won't notice much difference. This really shines when you're running at 4K or upscaled 4K. In CoD Warzone at 1440P, i never hit higher than 370w or so. My clocks then always stay at 2100-2085 etc. Once I cranked up the resolution to 4K, it hit the power limit and my clocks dropped to the 1985-1999 as mentioned.

One question though, I don't mess with the voltage slider. Has this helped anyone get higher clocks? I found after 2100Mhz, these chips are very unstable. I am able to run benchmarks at 2150+ but thats about it.

For what it's worth, I'm gaming at 2115 MHz locked. Note the core clock. This was the end of a 2-hour gaming session. Dips are me tabbing out to check temps and clocks.  edit, this image is really small for some reason. Here's a direct link: https://i.imgur.com/WoA88Gl.png

|

turboD

New Member

- Total Posts : 91

- Reward points : 0

- Joined: 2007/02/14 11:10:44

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 07:27:11

(permalink)

Make sure that Afterburner is controlling all 3 fans on that FTW 3... I read some reports that one fan is not controlled by the Afterburner curve and you only have 2 fans running fast ?

|

johnnytimmer1991

New Member

- Total Posts : 3

- Reward points : 0

- Joined: 2020/09/25 18:30:58

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 07:27:32

(permalink)

Can you detail the new normal BIOS in comparison to the original?

|

aka_STEVE_b

EGC Admin

- Total Posts : 17692

- Reward points : 0

- Joined: 2006/02/26 06:45:46

- Location: OH

- Status: offline

- Ribbons : 69

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 07:36:22

(permalink)

.

AMD RYZEN 9 5900X 12-core cpu~ ASUS ROG Crosshair VIII Dark Hero ~ EVGA RTX 3080 Ti FTW3~ G.SKILL Trident Z NEO 32GB DDR4-3600 ~ Phanteks Eclipse P400s red case ~ EVGA SuperNOVA 1000 G+ PSU ~ Intel 660p M.2 drive~ Crucial MX300 275 GB SSD ~WD 2TB SSD ~CORSAIR H115i RGB Pro XT 280mm cooler ~ CORSAIR Dark Core RGB Pro mouse ~ CORSAIR K68 Mech keyboard ~ HGST 4TB Hd.~ AOC AGON 32" monitor 1440p @ 144Hz ~ Win 10 x64

|

chase10784

New Member

- Total Posts : 99

- Reward points : 0

- Joined: 2020/09/25 18:25:00

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 07:41:04

(permalink)

Honestly I wouldn't mind seeing actual gameplay fps differences with the new 450w oc bios versus the previous oc bios. Does it help improve frames by 2 or 10 or 15. It makes a difference to if it's worth doing it not. I don't have a card but the information would be valuable to when I'm able to get one in 2025 at some point.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 07:51:20

(permalink)

Reedey

No matter what I do with this bios, my card still seems to peg itself at 400W. Max power, max voltage, overclocked or not. 400W is all she will do.

Did you power cycle your computer?

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

Wallzii

New Member

- Total Posts : 69

- Reward points : 0

- Joined: 2020/09/07 18:09:30

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 08:49:29

(permalink)

ajreynol

No, DP doesn't have the bandwidth that HDMI 2.1 does. For anything above 4K 120, monitors that offer this require the color bit depth to be reduced, dropping image quality to compensate for the bandwidth limitations of DP.

I should have been more specific, with DP being more or less equivalent to a HDMI 2.1 port up to 4K@120Hz with HDR (where the spec maxes out). Wouldn't this be enough, or does the extra 24Hz of a 144Hz display matter that much? Also, a 3080 wouldn't be hitting the refresh rate limits of these types of monitors anyways, so is this even something to worry about (120Hz vs. 144Hz)? Just curious. At the end of the day, it isn't a bad problem to have. I'm still trying to get my hands on my 3080 (9/17 9:07AM for me).

post edited by Wallzii - 2020/10/15 08:55:25

EVGA RTX 3080 FTW3 Ultra | Ryzen 5 3600 | 16GB Corsair Vengeance LPX 3600MHzSabrent Rocket 1TB NVMe PCIe M.2 2280 SSD | 2x Samsung 860 EVO 1TB SATA SSDCorsair RM750x | Dark Rock Pro 4 | Meshify C

|

jedixjarf

New Member

- Total Posts : 25

- Reward points : 0

- Joined: 2007/10/20 20:48:20

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 08:49:41

(permalink)

Any way we can get the actual bios rom files?

5950xx570 Dark3090 Kingpin HC

|

markuaw1

iCX Member

- Total Posts : 329

- Reward points : 0

- Joined: 2017/12/15 17:17:39

- Status: offline

- Ribbons : 1

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 09:08:42

(permalink)

jedixjarf

Any way we can get the actual bios rom files?

yes you can flash it with there EXE then save it with NVFLASH

|

punkasscrab

New Member

- Total Posts : 10

- Reward points : 0

- Joined: 2020/10/05 21:27:00

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 09:29:59

(permalink)

I gained 200 Port Royal points with the beta bios and no other changes. The card drew 448 watts and gpuz still reports power limit as what is throttling but now it is striped rather than constant limit. I love this card.

|

justin_43

CLASSIFIED Member

- Total Posts : 3326

- Reward points : 0

- Joined: 2008/01/04 18:54:42

- Status: offline

- Ribbons : 7

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 09:30:31

(permalink)

This is great news. Thank you EVGA. Now please release a 500w+ for 3090 FTW. (And let me buy one. lol) Great work guys!

ASUS RTX 4090 TUF OC • Intel Core i7 12700K • MSI Z690 Edge WiFi • 32GB G.Skill Trident Z • EVGA 1600T2 PSU 3x 2TB Samsung 980 Pros in RAID 0 • 250GB Samsung 980 Pro • 2x WD 2TB Blacks in RAID 0 • Lian-Li PC-D600WB EK Quantum Velocity • EK Quantum Vector² • EK Quantum Kinetic TBE 200 D5 • 2x Alphacool 420mm Rads LG CX 48" • 2x Wasabi Mango UHD430s 43" • HP LP3065 30" • Ducky Shine 7 Blackout • Logitech MX Master Sennheiser HD660S w/ XLR • Creative SB X-Fi Titanium HD • Drop + THX AAA 789 • DarkVoice 336SE OTL

|

Verdalix

New Member

- Total Posts : 4

- Reward points : 0

- Joined: 2012/08/05 22:10:17

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 09:44:22

(permalink)

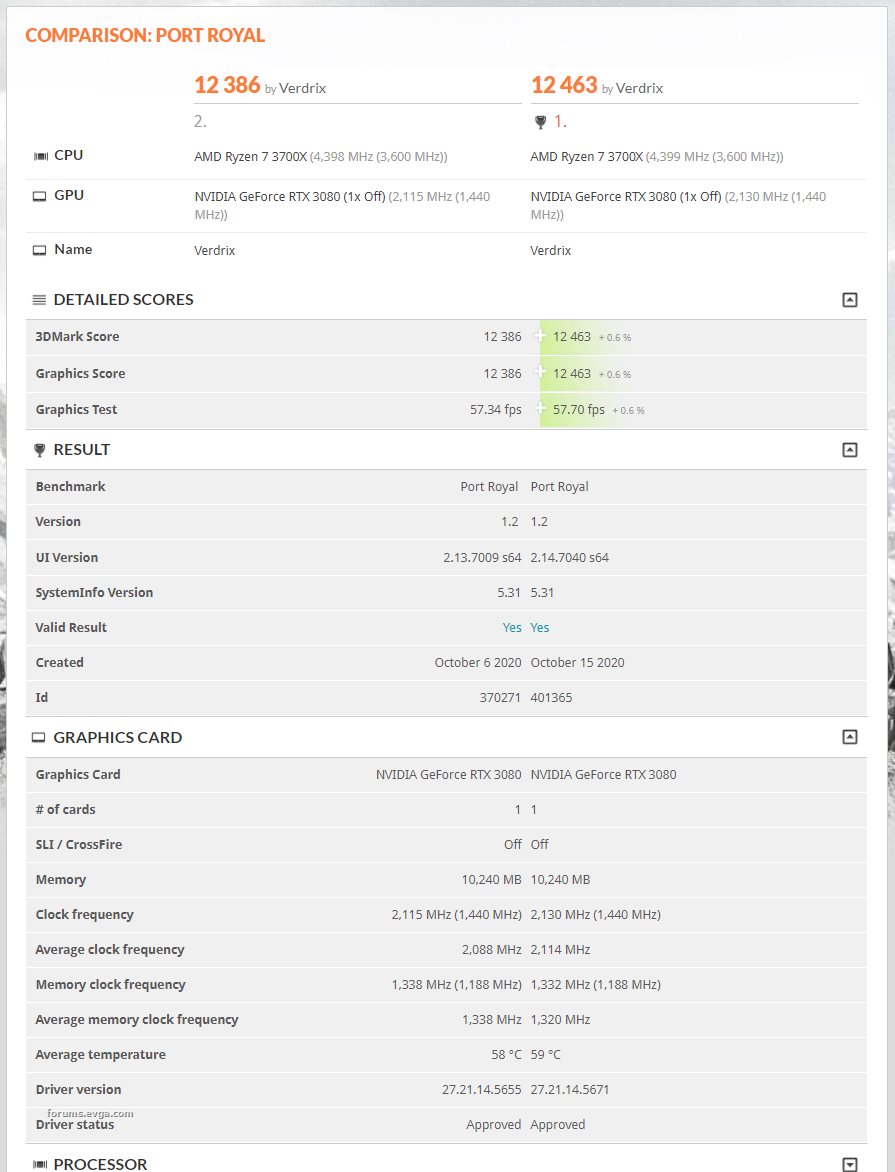

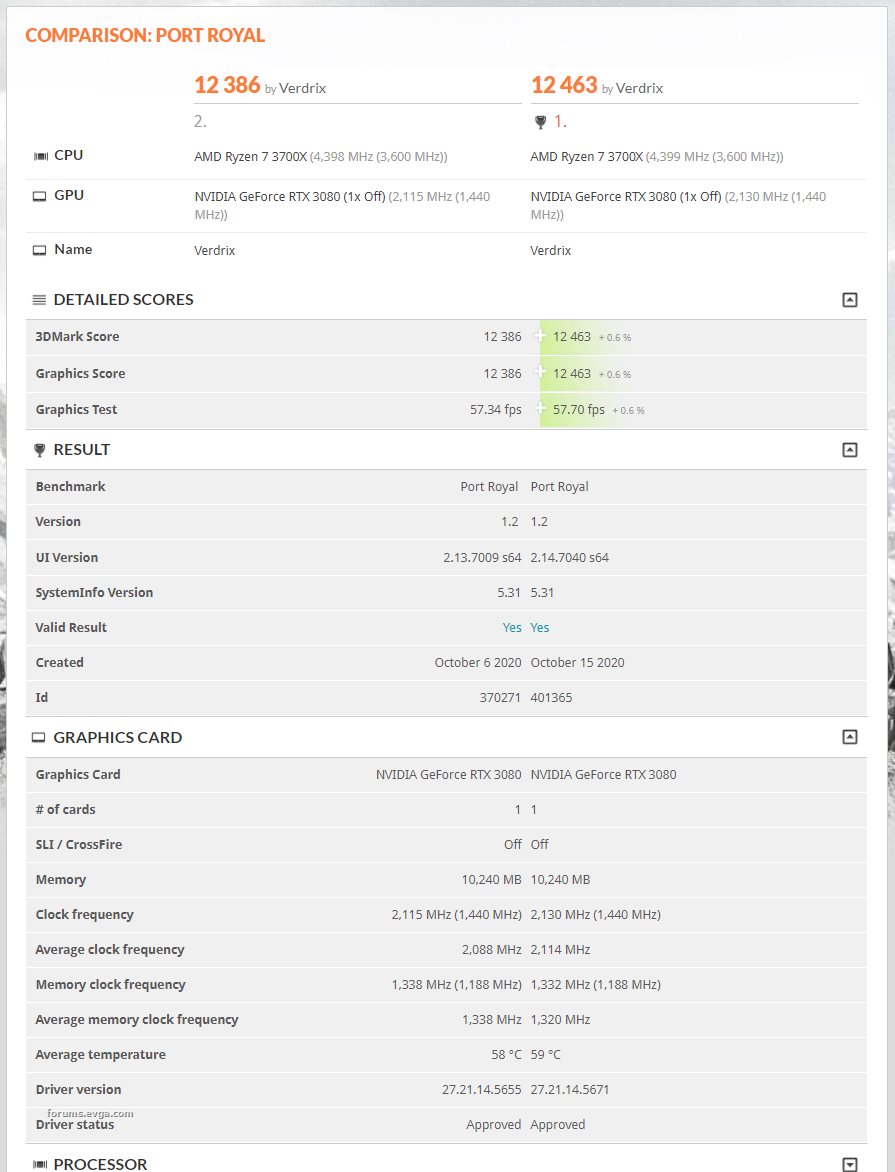

Here's a comparison on my setup, stock vs beta BIOS. I kept the offsets the same for each BIOS and maxed out the power/voltage limit for each. Clock was stable at 2114 MHz average compared to 2088 MHz previously. Results look promising and temperatures are looking good (all fans maxed out for these runs). Will see if I can try to overclock it a bit more and keep it stable. Core clock offset: +190 Mem clock offset: +1150

Attached Image(s)

|

ReZpawN

iCX Member

- Total Posts : 304

- Reward points : 0

- Joined: 2020/09/17 16:22:46

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 09:48:19

(permalink)

Desaccorde

So, what about the temperatures under humanly acceptable fan speeds? Does the air cooler hold up or we need at least the Hybrid Kit?

Depends on your case and fan setup, I got fractal define r5 which has sound deadening lining inside the case and I can't hear this card at all over my case fans, I have it set to ramp up fans to hundred % as it gets to 65c but it never does and I have power and voltage maxed out with a good oc

|

F5alcon

New Member

- Total Posts : 74

- Reward points : 0

- Joined: 2020/09/01 13:10:40

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 10:15:20

(permalink)

ajreynol

And now that I've read about the Ampere +TSMC news...I'm getting the feeling I won't be able to use Step Up for a 20GB 3080. Rumors had put such a launch in December, but if they're shifting the entire process to TSMC (they are), I can't imagine those cards will be available until maybe in February/March. Well beyond a Step Up window for my current card.

So now the question is simply: 2 HDMI ports (Strix) vs possible Step Up to a 480W Samsung-based 3090 (EVGA).

And either way...will I be looking to sell my current card to get a superior TSMC variant? Cooler, quieter cards with possibly lower power draw.

Don't be so sure that the 20GB and the 7nm are going to be the same upgrade. We could easily see the 20GB 8nm in Dec and a 3080/3090 Super next year with 7nm and memory that actually clocks to 21.5Gbps. The other possibility is that the 7nm card is a new Titan or A series workstation and not related to the current cards.

|

zogge

New Member

- Total Posts : 68

- Reward points : 0

- Joined: 2020/10/12 02:59:38

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 10:55:54

(permalink)

Maybe a stupid question but this bios works on a non-ultra card as well ? (FTW3)

|

TheGuz4L

Superclocked Member

- Total Posts : 130

- Reward points : 0

- Joined: 2016/06/09 09:24:29

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 11:28:44

(permalink)

Verdalix

Here's a comparison on my setup, stock vs beta BIOS. I kept the offsets the same for each BIOS and maxed out the power/voltage limit for each. Clock was stable at 2114 MHz average compared to 2088 MHz previously. Results look promising and temperatures are looking good (all fans maxed out for these runs). Will see if I can try to overclock it a bit more and keep it stable.

Core clock offset: +190

Mem clock offset: +1150

Is your memory really working that high? Once I go past +600Mhz my FPS drops. It of course works fine because it is ECC ram now, but I lose frames. Curious if you tried lower memory speeds if your score would be higher.

|

NexusSix

New Member

- Total Posts : 42

- Reward points : 0

- Joined: 2006/09/18 05:06:19

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 11:46:55

(permalink)

TheGuz4L

Is your memory really working that high? Once I go past +600Mhz my FPS drops. It of course works fine because it is ECC ram now, but I lose frames. Curious if you tried lower memory speeds if your score would be higher.

It can't be, I'm beating his score with +130 on the core and +900 on the memory (above 900 it flakes out and protection starts kicking in). For reference I got a 12486 with +130/900. 70 more MHz on the core should be at least 100 points higher than that I'd imagine.

|

Verdalix

New Member

- Total Posts : 4

- Reward points : 0

- Joined: 2012/08/05 22:10:17

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 11:47:33

(permalink)

TheGuz4L

Verdalix

Here's a comparison on my setup, stock vs beta BIOS. I kept the offsets the same for each BIOS and maxed out the power/voltage limit for each. Clock was stable at 2114 MHz average compared to 2088 MHz previously. Results look promising and temperatures are looking good (all fans maxed out for these runs). Will see if I can try to overclock it a bit more and keep it stable.

Core clock offset: +190

Mem clock offset: +1150

Is your memory really working that high? Once I go past +600Mhz my FPS drops. It of course works fine because it is ECC ram now, but I lose frames. Curious if you tried lower memory speeds if your score would be higher.

I tested with different memory clocks back when I had the stock BIOS. I'll need to dig up the results that I have written down somewhere but I saw continual average FPS increases up until +1150 offset. Beyond that I saw diminishing returns and FPS started dropped due to error correction.

|

Dabadger84

CLASSIFIED Member

- Total Posts : 3426

- Reward points : 0

- Joined: 2018/05/11 23:49:52

- Location: de_Overpass, USA

- Status: offline

- Ribbons : 10

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 11:49:44

(permalink)

I'm curious: Why is it that some people's GPUz is showing GPU power numbers in Watts... and mine looks like this:  And right as I'm posting this a GPUz update came up, mayhaps that'll fix it... Edit: Adding someone's post earlier that has what I'm talkin' about: bloodshot45

post edited by Dabadger84 - 2020/10/15 11:56:15

ModRigs: https://www.modsrigs.com/detail.aspx?BuildID=42891 Specs:5950x @ 4.7GHz 1.3V - Asus Crosshair VIII Hero - eVGA 1200W P2 - 4x8GB G.Skill Trident Z Royal Silver @ 3800 CL14Gigabyte RTX 4090 Gaming OC w/ Core: 2850MHz @ 1000mV, Mem: +1500MHz - Samsung Odyssey G9 49" Super-Ultrawide 240Hz Monitor

|

Verdalix

New Member

- Total Posts : 4

- Reward points : 0

- Joined: 2012/08/05 22:10:17

- Status: offline

- Ribbons : 0

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 11:55:06

(permalink)

NexusSix

TheGuz4L

Is your memory really working that high? Once I go past +600Mhz my FPS drops. It of course works fine because it is ECC ram now, but I lose frames. Curious if you tried lower memory speeds if your score would be higher.

It can't be, I'm beating his score with +130 on the core and +900 on the memory (above 900 it flakes out and protection starts kicking in).

For reference I got a 12486 with +130/900.

70 more MHz on the core should be at least 100 points higher than that I'd imagine.

I can re-test to confirm, but back when I was testing the different offsets I had my scores drop if I start reducing the offset from +1150. I'll see if I can sweep through different offsets and record all the results once I get home.

|

arestavo

CLASSIFIED ULTRA Member

- Total Posts : 6916

- Reward points : 0

- Joined: 2008/02/06 06:58:57

- Location: Through the Scary Door

- Status: offline

- Ribbons : 76

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 12:04:21

(permalink)

Dabadger84

I'm curious: Why is it that some people's GPUz is showing GPU power numbers in Watts... and mine looks like this:

And right as I'm posting this a GPUz update came up, mayhaps that'll fix it...

Edit: Adding someone's post earlier that has what I'm talkin' about:

bloodshot45

Select "Nvidia BIOS" instead of "General" at the top.

|

Dabadger84

CLASSIFIED Member

- Total Posts : 3426

- Reward points : 0

- Joined: 2018/05/11 23:49:52

- Location: de_Overpass, USA

- Status: offline

- Ribbons : 10

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 12:07:27

(permalink)

arestavo

Dabadger84

I'm curious: Why is it that some people's GPUz is showing GPU power numbers in Watts... and mine looks like this:

And right as I'm posting this a GPUz update came up, mayhaps that'll fix it...

Edit: Adding someone's post earlier that has what I'm talkin' about:

bloodshot45

Select "Nvidia BIOS" instead of "General" at the top.

:-D I'm special I never even noticed there was a dropdown there.

ModRigs: https://www.modsrigs.com/detail.aspx?BuildID=42891 Specs:5950x @ 4.7GHz 1.3V - Asus Crosshair VIII Hero - eVGA 1200W P2 - 4x8GB G.Skill Trident Z Royal Silver @ 3800 CL14Gigabyte RTX 4090 Gaming OC w/ Core: 2850MHz @ 1000mV, Mem: +1500MHz - Samsung Odyssey G9 49" Super-Ultrawide 240Hz Monitor

|

Reedey

New Member

- Total Posts : 21

- Reward points : 0

- Joined: 2020/10/10 04:56:12

- Status: offline

- Ribbons : 1

Re: EVGA GeForce RTX 3080 FTW3 (3897) XOC BIOS BETA

2020/10/15 12:55:48

(permalink)

ty_ger07

Reedey

No matter what I do with this bios, my card still seems to peg itself at 400W. Max power, max voltage, overclocked or not. 400W is all she will do.

Did you power cycle your computer?

Yep, the sliders update to allow 118% max power but the card runs at exactly the same power levels as before. just bouncing around 390-400W dring a run. I am back to the original bios and am getting better scores at lower overclocks now so it's not all bad. My overclocking efforts have been stunted, but the real world daily performance of the card is actually better. So it's not all bad :)

post edited by Reedey - 2020/10/15 13:51:49

|