squall-leonhart

CLASSIFIED Member

- Total Posts : 2904

- Reward points : 0

- Joined: 2009/07/27 19:57:03

- Location: Australia

- Status: offline

- Ribbons : 24

Re: Dual 8 pin PCIE cable?

2019/10/26 03:45:31

(permalink)

Prior issues with HXi power supplies and 2080ti indicate that it is best to either use the HXi configured to single rail, or run two cables from PSU to card and use multi rail.

History has demonstrated a 2080ti can overload the unit in Multirail mode.

CPU:Intel Xeon x5690 @ 4.2Ghz, Mainboard:Asus Rampage III Extreme, Memory:48GB Corsair Vengeance LP 1600

Video:EVGA Geforce GTX 1080 Founders Edition, NVidia Geforce GTX 1060 Founders Edition

Monitor:BenQ G2400WD, BenQ BL2211, Sound:Creative XFI Titanium Fatal1ty Pro

SDD:Crucial MX300 275, Crucial MX300 525, Crucial MX300 1000

HDD:500GB Spinpoint F3, 1TB WD Black, 2TB WD Red, 1TB WD Black

Case:NZXT Phantom 820, PSU:Seasonic X-850, OS:Windows 7 SP1

Cooler: ThermalRight Silver Arrow IB-E Extreme

|

squall-leonhart

CLASSIFIED Member

- Total Posts : 2904

- Reward points : 0

- Joined: 2009/07/27 19:57:03

- Location: Australia

- Status: offline

- Ribbons : 24

Re: Dual 8 pin PCIE cable?

2019/10/26 03:47:24

(permalink)

bob16314

Don't forget the card can get up to 75W from the PCIe slot itself, above and beyond the rated 150W from an 8-pin and 75W from a 6-pin PCIe connector.

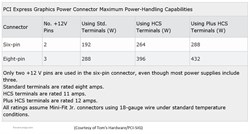

Your unit also uses HCS terminals (High-Current System for the MB, CPU and PCIe connectors) and related components..Each HCS terminal is rated to carry up to 11 Amps of current (132 Watts for the 3x 12V wires) for a total of 396 Watts on the 12V in an 8-pin connector.

Very nice box there, by the way

2080ti's can have sudden spikes of up to 380w+, you need high precision equipment to capture this but it has > blown up power supplies > tripped OCP in otherwise fine UPS setups.

CPU:Intel Xeon x5690 @ 4.2Ghz, Mainboard:Asus Rampage III Extreme, Memory:48GB Corsair Vengeance LP 1600

Video:EVGA Geforce GTX 1080 Founders Edition, NVidia Geforce GTX 1060 Founders Edition

Monitor:BenQ G2400WD, BenQ BL2211, Sound:Creative XFI Titanium Fatal1ty Pro

SDD:Crucial MX300 275, Crucial MX300 525, Crucial MX300 1000

HDD:500GB Spinpoint F3, 1TB WD Black, 2TB WD Red, 1TB WD Black

Case:NZXT Phantom 820, PSU:Seasonic X-850, OS:Windows 7 SP1

Cooler: ThermalRight Silver Arrow IB-E Extreme

|

kevinc313

CLASSIFIED ULTRA Member

- Total Posts : 5004

- Reward points : 0

- Joined: 2019/02/28 09:27:55

- Status: offline

- Ribbons : 22

Re: Dual 8 pin PCIE cable?

2019/10/26 08:46:45

(permalink)

DeadlyMercury

Graphite8five

bob16314

My reasoning is, is that they wouldn't make them and include the with PSUs if they weren't rated for the load (which they are)..Many people use them to reduce cable clutter and for aesthetic reasons.

I think you are right. I don't know why separate PCIE cables are recommended here so much, especially if they are single cable dual 8 pin cables.

Because less wires - more current in single wire - more heat and more stress to connector. Less wires - more resistance - more voltage drop.

Due to specification you need to have 4 power wires in 6pin connector and 6 power wires (3 12v + 3 ground) in 8pin connector: because there is specific maximum current that wire can stand safely, with no harm. So gpu connectors depend on how much current plate need: one 8pin connector can stand for 150w, that mean that single wire can handle up to 4A, so if your card need 300W - it should have 2 8pin connectors. If more (like kingpin with average 360w and 520w max) - you need 3rd connector.

But of course these safety standards doesn't mean that if you get 370w (like 2080ti ftw3) with 2 8pin connectors - your card will explode or something :) But if you have a single 8pin wire with same power draw - that means that current in single wire is up to 10A, that is way to much. So this wire should be 2x times thicker or it will heat up, there is will be huge stress on connector and some day it will burn up or loses it conductivity, so your card will affected by voltage drop, and that will affect card stability.

This is generally correct. There are very real electrical engineering specs rating cables for current because of heating and voltage drop. This is a pretty big concern for low voltage DC / high current power supply in many applications. The common solution to for this in the PC space is multiple smaller wires, it is a good scaled solution and is actually the less bulkly solution due to how derating works. USE THE TWO CABLES. Even if you could get a special single cable made that used correctly rated thicker wire and split into two 8 pin connectors, you would still have the single connector at the PSU that would be overwhelmed.

post edited by kevinc313 - 2019/10/26 08:50:49

|

bob16314

CLASSIFIED ULTRA Member

- Total Posts : 7859

- Reward points : 0

- Joined: 2008/11/07 22:33:22

- Location: Planet of the Babes

- Status: offline

- Ribbons : 761

Re: Dual 8 pin PCIE cable?

2019/10/26 21:39:41

(permalink)

kevinc313

This is generally correct. There are very real electrical engineering specs rating cables for current because of heating and voltage drop. This is a pretty big concern for low voltage DC / high current power supply in many applications. The common solution to for this in the PC space is multiple smaller wires, it is a good scaled solution and is actually the less bulkly solution due to how derating works. USE THE TWO CABLES.

Even if you could get a special single cable made that used correctly rated thicker wire and split into two 8 pin connectors, you would still have the single connector at the PSU that would be overwhelmed.

Cool story, bro..But here are some 'Fun Facts.' 8-pin/6-pin Y cables and their connectors are rated for more than the allowed load..Miners/Folders commonly use them in buku GPU setups. 8-pin PCIe Supplemantal Power Connectors (also referred to as PEG connectors, or PCI Express Graphics connectors) have three +12V terminals..Each single terminal is rated to carry up to 8 Amps of current (96 Watts @ 12V) using 'Standard' terminals for a total of 288-watts per connector. Each 'HCS' (High-Current System) terminal is rated to carry up to 11 Amps of current (132 Watts @ 12V) for a total of 396 Watts, per connector. Each 'Plus HCS' terminal is rated to carry up to 12 Amps of current (144 Watts @ 12V) for a total of 432 Watts, per connector. HCS and Plus HCS terminals are sometimes advertised as 'gold plated terminals' or 'high current' connectors. The terminals/connectors on the PSU and GPU should be rated similarly. Another 'Fun Fact' is that electric/current flows on the surface of wires..The size/number of strands in a braided wire determines the current handling rating/capability, and braided wires have a higher current handling rating/capability than a solid wire due to the increased surface area. It's also a common misconception/misstatement that a PSU can 'provide' up to 75W (6.25A @ 12V) and 150W (12.5A @ 12V) total to the 6-pin and 8-pin PCIe Supplemantal Power Connectors..The PSU can 'provide' what the rail is rated at and is way more than enough to overheat/melt/catch things on fire, it's the graphics card's VBIOS and voltage regulation/monitoring circuitry that determines how much the card is 'allowed' to 'draw' from the PCIe slot/6-pin/8-pin within the PCI-SIG spec of 75W/75W/150W and is often programmed to 'draw' less. PCI-SIG is the group that writes the industry-standard specifications for all things PCI/PCIe that manufacturers 'should' adhere to, just like Intel writes the industry-standard Power Supply Design Guide specifications for PSUs that manufacturers 'should' adhere to..There are parts of the PCI-SIG and Power Supply Design Guide specs that say manufacturers 'must' adhere to these certain things and parts saying they 'should' adhere to these other things. It used to be an issue when Intel specified that PSUs have mandatory multiple +12V rails for safety reasons..Then, graphics card manufacturers complained that Intel's +12V multi-rail spec didn't meet the needs of their more powerful graphics cards and would sometimes trip the OCP (Over Current Protection) on multi-rail PSUs..So then, single-rail +12V units were born due to backlash from graphics card manufacturers that demanded that Intel's multi-rail specs be voluntarily abandoned to meet their power needs.

Attached Image(s)

* Corsair Obsidian 450D Mid-Tower - Airflow Edition * ASUS ROG Maximus X Hero (Wi-Fi AC) * Intel i7-8700K @ 5.0 GHz * 16GB G.SKILL Trident Z 4133MHz * Sabrent Rocket 1TB M.2 SSD * WD Black 500 GB HDD * Seasonic M12 II 750W * Corsair H115i Elite Capellix 280mm * EVGA GTX 760 SC * Win7 Home/Win10 Home * "Whatever it takes, as long as it works" - Me

|

fergusonll

FTW Member

- Total Posts : 1686

- Reward points : 0

- Joined: 2013/02/21 09:49:10

- Status: offline

- Ribbons : 3

Re: Dual 8 pin PCIE cable?

2019/10/26 23:02:23

(permalink)

Tris1066

Well, from my own experience using a Corsair HX1000i (fairly low end psu or so I'm told), with a splitter cable my 2080ti ftw3 does run, but at reduced performance (probably a drop of 10-15% in benchmarks).

Using 2 separate cables everything is fine.

I would say the Corsair HXi series is far from being low end psu, here's a jonnyGuru review http://www.jonnyguru.com/...00w-power-supply showing performance and build quality at top notch.

|

fergusonll

FTW Member

- Total Posts : 1686

- Reward points : 0

- Joined: 2013/02/21 09:49:10

- Status: offline

- Ribbons : 3

Re: Dual 8 pin PCIE cable?

2019/10/26 23:26:32

(permalink)

squall-leonhart

Prior issues with HXi power supplies and 2080ti indicate that it is best to either use the HXi configured to single rail, or run two cables from PSU to card and use multi rail.

History has demonstrated a 2080ti can overload the unit in Multirail mode.

I didn't know there were issues with HXi psu's, I have my HX1000i powering an oc 9900k and 2080ti XC Ultra set to multi-rail(with separate pcie cables), guess to be on the safe side I'm going to stop procrastinating installing icue and configure it to single rail.

post edited by fergusonll - 2019/10/26 23:29:50

|

bob16314

CLASSIFIED ULTRA Member

- Total Posts : 7859

- Reward points : 0

- Joined: 2008/11/07 22:33:22

- Location: Planet of the Babes

- Status: offline

- Ribbons : 761

Re: Dual 8 pin PCIE cable?

2019/10/26 23:56:47

(permalink)

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

* Corsair Obsidian 450D Mid-Tower - Airflow Edition * ASUS ROG Maximus X Hero (Wi-Fi AC) * Intel i7-8700K @ 5.0 GHz * 16GB G.SKILL Trident Z 4133MHz * Sabrent Rocket 1TB M.2 SSD * WD Black 500 GB HDD * Seasonic M12 II 750W * Corsair H115i Elite Capellix 280mm * EVGA GTX 760 SC * Win7 Home/Win10 Home * "Whatever it takes, as long as it works" - Me

|

kevinc313

CLASSIFIED ULTRA Member

- Total Posts : 5004

- Reward points : 0

- Joined: 2019/02/28 09:27:55

- Status: offline

- Ribbons : 22

Re: Dual 8 pin PCIE cable?

2019/10/27 02:07:48

(permalink)

bob16314

kevinc313

This is generally correct. There are very real electrical engineering specs rating cables for current because of heating and voltage drop. This is a pretty big concern for low voltage DC / high current power supply in many applications. The common solution to for this in the PC space is multiple smaller wires, it is a good scaled solution and is actually the less bulkly solution due to how derating works. USE THE TWO CABLES.

Even if you could get a special single cable made that used correctly rated thicker wire and split into two 8 pin connectors, you would still have the single connector at the PSU that would be overwhelmed.

Cool story, bro..But here are some 'Fun Facts.'

8-pin/6-pin Y cables and their connectors are rated for more than the allowed load..Miners/Folders commonly use them in buku GPU setups.

8-pin PCIe Supplemantal Power Connectors (also referred to as PEG connectors, or PCI Express Graphics connectors) have three +12V terminals..Each single terminal is rated to carry up to 8 Amps of current (96 Watts @ 12V) using 'Standard' terminals for a total of 288-watts per connector.

Each 'HCS' (High-Current System) terminal is rated to carry up to 11 Amps of current (132 Watts @ 12V) for a total of 396 Watts, per connector.

Each 'Plus HCS' terminal is rated to carry up to 12 Amps of current (144 Watts @ 12V) for a total of 432 Watts, per connector.

HCS and Plus HCS terminals are sometimes advertised as 'gold plated terminals' or 'high current' connectors.

The terminals/connectors on the PSU and GPU should be rated similarly.

Another 'Fun Fact' is that electric/current flows on the surface of wires..The size/number of strands in a braided wire determines the current handling rating/capability, and braided wires have a higher current handling rating/capability than a solid wire due to the increased surface area.

It's also a common misconception/misstatement that a PSU can 'provide' up to 75W (6.25A @ 12V) and 150W (12.5A @ 12V) total to the 6-pin and 8-pin PCIe Supplemantal Power Connectors..The PSU can 'provide' what the rail is rated at and is way more than enough to overheat/melt/catch things on fire, it's the graphics card's VBIOS and voltage regulation/monitoring circuitry that determines how much the card is 'allowed' to 'draw' from the PCIe slot/6-pin/8-pin within the PCI-SIG spec of 75W/75W/150W and is often programmed to 'draw' less.

PCI-SIG is the group that writes the industry-standard specifications for all things PCI/PCIe that manufacturers 'should' adhere to, just like Intel writes the industry-standard Power Supply Design Guide specifications for PSUs that manufacturers 'should' adhere to..There are parts of the PCI-SIG and Power Supply Design Guide specs that say manufacturers 'must' adhere to these certain things and parts saying they 'should' adhere to these other things.

It used to be an issue when Intel specified that PSUs have mandatory multiple +12V rails for safety reasons..Then, graphics card manufacturers complained that Intel's +12V multi-rail spec didn't meet the needs of their more powerful graphics cards and would sometimes trip the OCP (Over Current Protection) on multi-rail PSUs..So then, single-rail +12V units were born due to backlash from graphics card manufacturers that demanded that Intel's multi-rail specs be voluntarily abandoned to meet their power needs.

Great facts. Still sounds like running one cable is a bad idea.

|

squall-leonhart

CLASSIFIED Member

- Total Posts : 2904

- Reward points : 0

- Joined: 2009/07/27 19:57:03

- Location: Australia

- Status: offline

- Ribbons : 24

Re: Dual 8 pin PCIE cable?

2019/10/27 03:12:06

(permalink)

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

there are still cases of melting atx cables now.

CPU:Intel Xeon x5690 @ 4.2Ghz, Mainboard:Asus Rampage III Extreme, Memory:48GB Corsair Vengeance LP 1600

Video:EVGA Geforce GTX 1080 Founders Edition, NVidia Geforce GTX 1060 Founders Edition

Monitor:BenQ G2400WD, BenQ BL2211, Sound:Creative XFI Titanium Fatal1ty Pro

SDD:Crucial MX300 275, Crucial MX300 525, Crucial MX300 1000

HDD:500GB Spinpoint F3, 1TB WD Black, 2TB WD Red, 1TB WD Black

Case:NZXT Phantom 820, PSU:Seasonic X-850, OS:Windows 7 SP1

Cooler: ThermalRight Silver Arrow IB-E Extreme

|

kevinc313

CLASSIFIED ULTRA Member

- Total Posts : 5004

- Reward points : 0

- Joined: 2019/02/28 09:27:55

- Status: offline

- Ribbons : 22

Re: Dual 8 pin PCIE cable?

2019/10/27 10:02:26

(permalink)

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

I wasn't saying it would melt. I was saying there would be heating and voltage drop to the point that the card wouldn't operate well under load, even if everything was within spec. Common issue in high power low voltage DC electronics.

post edited by kevinc313 - 2019/10/27 10:05:56

|

bob16314

CLASSIFIED ULTRA Member

- Total Posts : 7859

- Reward points : 0

- Joined: 2008/11/07 22:33:22

- Location: Planet of the Babes

- Status: offline

- Ribbons : 761

Re: Dual 8 pin PCIE cable?

2019/10/28 20:39:15

(permalink)

kevinc313

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

I wasn't saying it would melt. I was saying there would be heating and voltage drop to the point that the card wouldn't operate well under load, even if everything was within spec. Common issue in high power low voltage DC electronics.

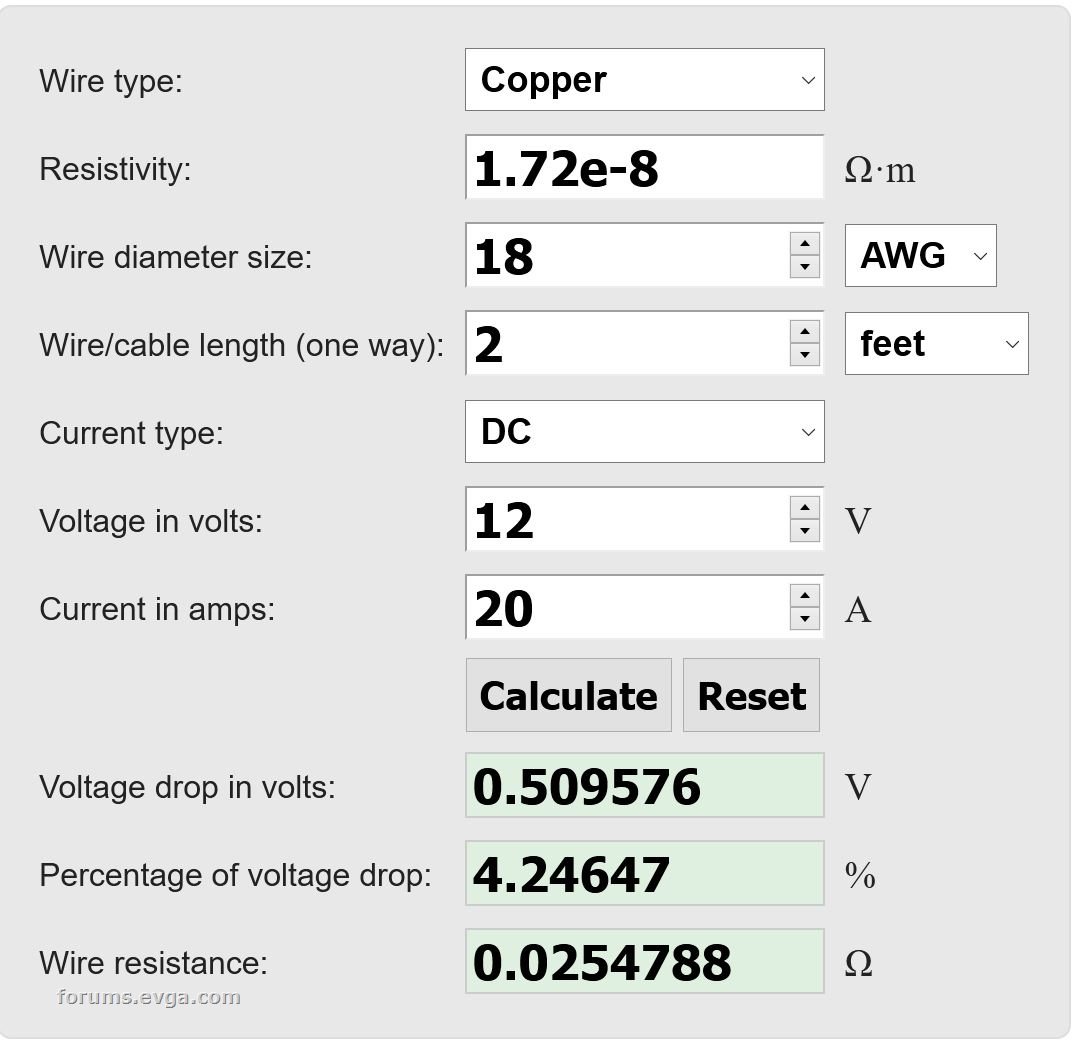

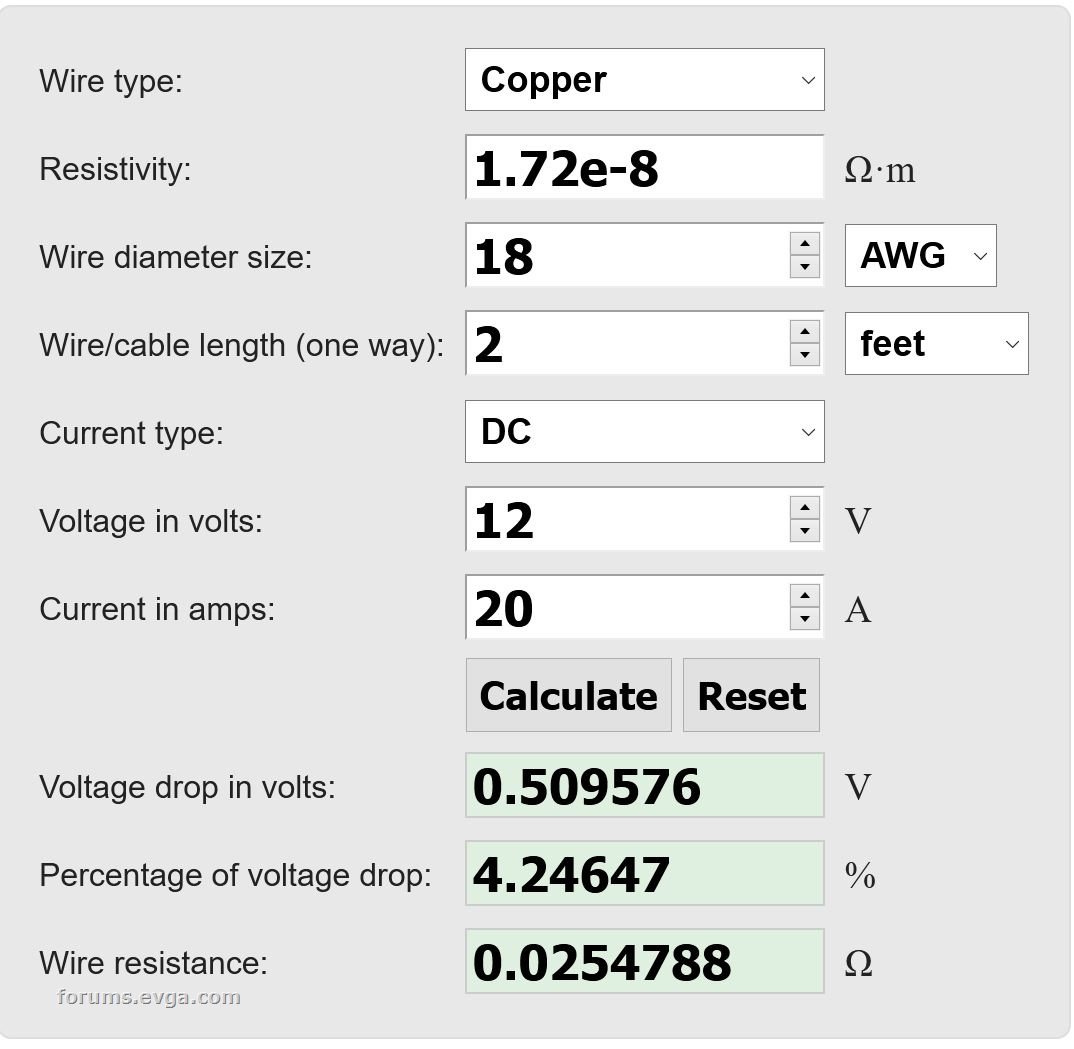

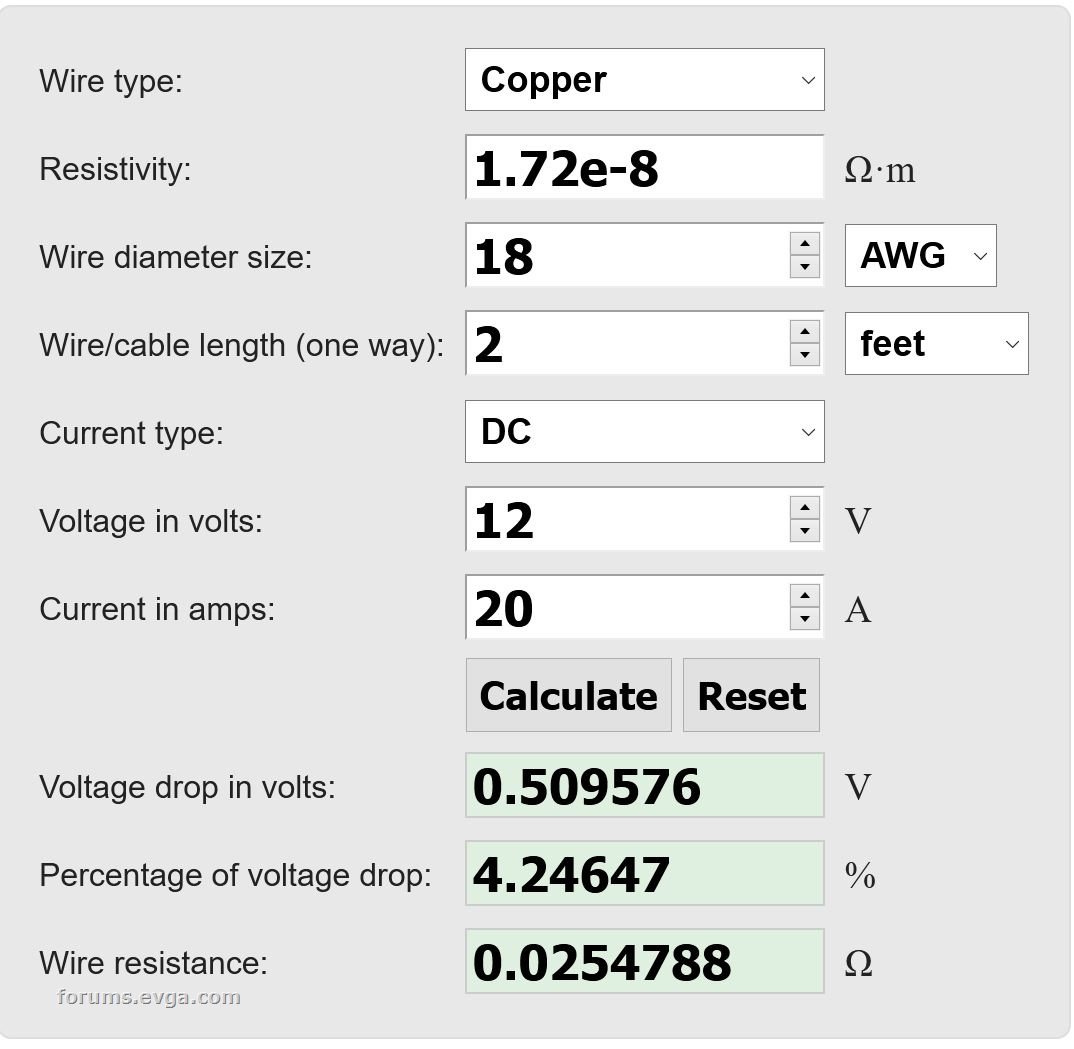

Mmmk..And the Voltage Drop in 1 foot of AWG 18 copper wire passing 12VDC in a solid wire using Standard 8A (max) rated terminals is approx. 0.849% or 0.102V..Braided wire used in PSU cables would be slightly more..Not enough to matter, especially when considering that the voltage tolerance on the nominal +12VDC line is +/- 5%, or a Min/Max of 11.4VDC to 12.6VDC as stated in the Intel Power Supply Design Guide.

post edited by bob16314 - 2019/10/28 20:43:03

* Corsair Obsidian 450D Mid-Tower - Airflow Edition * ASUS ROG Maximus X Hero (Wi-Fi AC) * Intel i7-8700K @ 5.0 GHz * 16GB G.SKILL Trident Z 4133MHz * Sabrent Rocket 1TB M.2 SSD * WD Black 500 GB HDD * Seasonic M12 II 750W * Corsair H115i Elite Capellix 280mm * EVGA GTX 760 SC * Win7 Home/Win10 Home * "Whatever it takes, as long as it works" - Me

|

kevinc313

CLASSIFIED ULTRA Member

- Total Posts : 5004

- Reward points : 0

- Joined: 2019/02/28 09:27:55

- Status: offline

- Ribbons : 22

Re: Dual 8 pin PCIE cable?

2019/10/28 21:10:10

(permalink)

bob16314

kevinc313

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

I wasn't saying it would melt. I was saying there would be heating and voltage drop to the point that the card wouldn't operate well under load, even if everything was within spec. Common issue in high power low voltage DC electronics.

Mmmk..And the Voltage Drop in 1 foot of AWG 18 copper wire passing 12VDC in a solid wire using Standard 8A (max) rated terminals is approx. 0.849% or 0.102V..Braided wire used in PSU cables would be slightly more..Not enough to matter, especially when considering that the voltage tolerance on the nominal +12VDC line is +/- 5%, or a Min/Max of 11.4VDC to 12.6VDC as stated in the Intel Power Supply Design Guide.

One has to figure that PSU and GPU manufacturers have tested the real voltage drop and heating that a single cable encounters feeding a high power GPU mounted in a PC case, thus recommending dual power cables, while not even providing dual 8-pin cables with their modular power supplies. Clearly a simple voltage drop calculation doesn't capture the nuances of the real application.

post edited by kevinc313 - 2019/10/28 21:15:06

|

HeavyHemi

Omnipotent Enthusiast

- Total Posts : 13887

- Reward points : 0

- Joined: 2008/11/28 20:31:42

- Location: Western Washington

- Status: offline

- Ribbons : 135

Re: Dual 8 pin PCIE cable?

2019/10/28 21:45:38

(permalink)

bob16314

kevinc313

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

I wasn't saying it would melt. I was saying there would be heating and voltage drop to the point that the card wouldn't operate well under load, even if everything was within spec. Common issue in high power low voltage DC electronics.

Mmmk..And the Voltage Drop in 1 foot of AWG 18 copper wire passing 12VDC in a solid wire using Standard 8A (max) rated terminals is approx. 0.849% or 0.102V..Braided wire used in PSU cables would be slightly more..Not enough to matter, especially when considering that the voltage tolerance on the nominal +12VDC line is +/- 5%, or a Min/Max of 11.4VDC to 12.6VDC as stated in the Intel Power Supply Design Guide.

Have you considered the issue could be more related to supplying the entire current via one connector on the PSU? Back in the day, it was rather trivial to trip a HX1000 if you were running SLI and did not spread your load correctly. It was internally two separate 500 watt power supplies one for each rail. While these are 'virtual rails' or single rails, you're still again, using one molex style connector on a PSU. You're running upwards of 20+ amps on the connector. That all being said, generally speaking you will be okay using a single dual head cable. Especially if you're running stock. However, if you're overclocking or having stability issues, using two or using two for troubling shooting is just common sense. There's too many cases of just using two, solving a stability issue for it to be a myth.

post edited by HeavyHemi - 2019/10/28 21:49:52

Attached Image(s)

EVGA X99 FTWK / i7 6850K @ 4.5ghz / RTX 3080Ti FTW Ultra / 32GB Corsair LPX 3600mhz / Samsung 850Pro 256GB / Be Quiet BN516 Straight Power 12-1000w 80 Plus Platinum / Window 10 Pro

|

kevinc313

CLASSIFIED ULTRA Member

- Total Posts : 5004

- Reward points : 0

- Joined: 2019/02/28 09:27:55

- Status: offline

- Ribbons : 22

Re: Dual 8 pin PCIE cable?

2019/10/29 05:32:32

(permalink)

HeavyHemi

bob16314

kevinc313

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

I wasn't saying it would melt. I was saying there would be heating and voltage drop to the point that the card wouldn't operate well under load, even if everything was within spec. Common issue in high power low voltage DC electronics.

Mmmk..And the Voltage Drop in 1 foot of AWG 18 copper wire passing 12VDC in a solid wire using Standard 8A (max) rated terminals is approx. 0.849% or 0.102V..Braided wire used in PSU cables would be slightly more..Not enough to matter, especially when considering that the voltage tolerance on the nominal +12VDC line is +/- 5%, or a Min/Max of 11.4VDC to 12.6VDC as stated in the Intel Power Supply Design Guide.

Have you considered the issue could be more related to supplying the entire current via one connector on the PSU? Back in the day, it was rather trivial to trip a HX1000 if you were running SLI and did not spread your load correctly.

It was internally two separate 500 watt power supplies one for each rail. While these are 'virtual rails' or single rails, you're still again, using one molex style connector on a PSU. You're running upwards of 20+ amps on the connector. That all being said, generally speaking you will be okay using a single dual head cable. Especially if you're running stock. However, if you're overclocking or having stability issues, using two or using two for troubling shooting is just common sense. There's too many cases of just using two, solving a stability issue for it to be a myth.

Heating and voltage drop through any connector pin and mating pair is a common concern. Not to mention safety factor of the system when combined.

post edited by kevinc313 - 2019/10/29 05:36:04

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31351

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: online

- Ribbons : 123

Re: Dual 8 pin PCIE cable?

2019/10/29 05:43:54

(permalink)

loose connections are Hot (more resistance) & Can cause melting under "normal loads"

Poorly assembled Cable terminals can also make a huge impact on the load capacity of any wire ... unfortunately production is never always perfect

with many variables one can always - just Test Their system, if the look of multiple cables is an issue for their build

I'll continue to split the load over as many separate PCIe cables as possible ..... It has served me well with many OC Rigs running 24/7

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

bob16314

CLASSIFIED ULTRA Member

- Total Posts : 7859

- Reward points : 0

- Joined: 2008/11/07 22:33:22

- Location: Planet of the Babes

- Status: offline

- Ribbons : 761

Re: Dual 8 pin PCIE cable?

2019/10/29 06:02:31

(permalink)

☼ Best Answerby Cool GTX 2019/10/29 06:34:01

Seasonic kinda gave me a yes, but no, be probably maybe answer  And it goes like this: Hello Robert, Thank you for contacting Seasonic. We recommend to use two different cables for high end GPU's in order to balance the load. While a cable with a daisy chain can easily handle the load, the pins by themselves may heat a lot if load is importrant and melt the connector. The issue itself, if any, is not really the connector or the pins but combination of both. This is why, while cables can handle such load, we prefer to be on the safe side and recommend to use two cables with high end GPU's like RTX 2080/2080Ti, Vega, etc... Thank you.So as far as load goes, you can use one cable if it or the connectors don't get hot.

* Corsair Obsidian 450D Mid-Tower - Airflow Edition * ASUS ROG Maximus X Hero (Wi-Fi AC) * Intel i7-8700K @ 5.0 GHz * 16GB G.SKILL Trident Z 4133MHz * Sabrent Rocket 1TB M.2 SSD * WD Black 500 GB HDD * Seasonic M12 II 750W * Corsair H115i Elite Capellix 280mm * EVGA GTX 760 SC * Win7 Home/Win10 Home * "Whatever it takes, as long as it works" - Me

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31351

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: online

- Ribbons : 123

Re: Dual 8 pin PCIE cable?

2019/10/29 06:34:58

(permalink)

bob16314

Seasonic kinda gave me a yes, but no, be probably maybe answer

And it goes like this:

Hello Robert,

Thank you for contacting Seasonic.

We recommend to use two different cables for high end GPU's in order to balance the load. While a cable with a daisy chain can easily handle the load, the pins by themselves may heat a lot if load is importrant and melt the connector. The issue itself, if any, is not really the connector or the pins but combination of both. This is why, while cables can handle such load, we prefer to be on the safe side and recommend to use two cables with high end GPU's like RTX 2080/2080Ti, Vega, etc...

Thank you.

So as far as load goes, you can use one cable if it or the connectors don't get hot.

Thank you for Sharing the Official Seasonic "Answer" Yes if it Works

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

kevinc313

CLASSIFIED ULTRA Member

- Total Posts : 5004

- Reward points : 0

- Joined: 2019/02/28 09:27:55

- Status: offline

- Ribbons : 22

Re: Dual 8 pin PCIE cable?

2019/10/29 06:49:32

(permalink)

I'm going to replace all my house wiring with 18G, it's rated for over 15 amps.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31351

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: online

- Ribbons : 123

Re: Dual 8 pin PCIE cable?

2019/10/29 06:53:09

(permalink)

kevinc313

I'm going to replace all my house wiring with 18G, it's rated for over 15 amps.

You'll need to rewrite the NFPA 70 National Electric Code first

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

castrator86

SSC Member

- Total Posts : 841

- Reward points : 0

- Joined: 2010/07/24 09:33:21

- Status: offline

- Ribbons : 4

Re: Dual 8 pin PCIE cable?

2019/10/29 07:29:49

(permalink)

Cool GTX

Yes if it Works

Until it doesn't and things start going kablooey.

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Dual 8 pin PCIE cable?

2019/10/29 09:29:56

(permalink)

bob16314

Seasonic kinda gave me a yes, but no, be probably maybe answer

And it goes like this:

Hello Robert,

Thank you for contacting Seasonic.

We recommend to use two different cables for high end GPU's in order to balance the load. While a cable with a daisy chain can easily handle the load, the pins by themselves may heat a lot if load is importrant and melt the connector. The issue itself, if any, is not really the connector or the pins but combination of both. This is why, while cables can handle such load, we prefer to be on the safe side and recommend to use two cables with high end GPU's like RTX 2080/2080Ti, Vega, etc...

Thank you.

So as far as load goes, you can use one cable if it or the connectors don't get hot.

And who is going to monitor their connectors 24/7 to see if they are getting hot? Nobody. Just use two cables and be safe about it.

|

castrator86

SSC Member

- Total Posts : 841

- Reward points : 0

- Joined: 2010/07/24 09:33:21

- Status: offline

- Ribbons : 4

Re: Dual 8 pin PCIE cable?

2019/10/29 09:33:02

(permalink)

Seriously. I don't know why so many people are blowing thousands of dollars on GPUs and building these incredible rigs only to cheap out on something as easy as just running separate, dedicated, power cables to the PSU.

|

DeadlyMercury

iCX Member

- Total Posts : 422

- Reward points : 0

- Joined: 2019/09/11 14:05:07

- Location: Moscow

- Status: offline

- Ribbons : 14

Re: Dual 8 pin PCIE cable?

2019/10/29 09:39:13

(permalink)

I think that is not about "cheap" - but about good looking and less cables.

But still there is not much difference about running single or two pci-e wires for gpu.

"An original idea. That can't be too hard. The library must be full of them." Stephen Fry

|

castrator86

SSC Member

- Total Posts : 841

- Reward points : 0

- Joined: 2010/07/24 09:33:21

- Status: offline

- Ribbons : 4

Re: Dual 8 pin PCIE cable?

2019/10/29 09:42:15

(permalink)

DeadlyMercury

I think that is not about "cheap" - but about good looking and less cables.

But still there is not much difference about running single or two pci-e wires for gpu.

If only EVGA sold something to help out with that...

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Dual 8 pin PCIE cable?

2019/10/29 09:47:34

(permalink)

HeavyHemi

bob16314

kevinc313

bob16314

Guys, keep in mind that I'm just talking about the current-handling capability for those who think "Oh, it will get hot and melt", or something..That was demonstrated to be somewhat true in ye olde days of yore and on Low-Tier/El Cheapo units, which most of them don't even come with a Y splitter cable anyway, and especially when using junk adapters.

I wasn't saying it would melt. I was saying there would be heating and voltage drop to the point that the card wouldn't operate well under load, even if everything was within spec. Common issue in high power low voltage DC electronics.

Mmmk..And the Voltage Drop in 1 foot of AWG 18 copper wire passing 12VDC in a solid wire using Standard 8A (max) rated terminals is approx. 0.849% or 0.102V..Braided wire used in PSU cables would be slightly more..Not enough to matter, especially when considering that the voltage tolerance on the nominal +12VDC line is +/- 5%, or a Min/Max of 11.4VDC to 12.6VDC as stated in the Intel Power Supply Design Guide.

Have you considered the issue could be more related to supplying the entire current via one connector on the PSU? Back in the day, it was rather trivial to trip a HX1000 if you were running SLI and did not spread your load correctly.

It was internally two separate 500 watt power supplies one for each rail. While these are 'virtual rails' or single rails, you're still again, using one molex style connector on a PSU. You're running upwards of 20+ amps on the connector. That all being said, generally speaking you will be okay using a single dual head cable. Especially if you're running stock. However, if you're overclocking or having stability issues, using two or using two for troubling shooting is just common sense. There's too many cases of just using two, solving a stability issue for it to be a myth.

+1 Seen plenty of 2080 ti owners correct their stability issues by running two cables over one.

|