sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

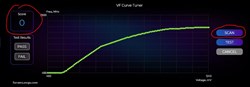

Guys, So I finally got my EVGA RTX 2080 Ti Ultra Hybrid setup in my system. I'm a little confused about 2 separate things (and please bear with me, I'm not a total n00b but I'm not an expert in these matters either. Boost Clock: When I look at the specs for this card anywhere, I see "Boost Clock: 1755Mhz" and "Memory Clock: 14000 Mhz". However, when I open Precision X1, it shows me "Clock: 1350Mhz" and "Memory: 7000Mhz". I do understand that is the "Base" speeds for memory & clock. So my question, do I need to do anything to get the 1755 Mhz Clock & 14000 Memory? Or does it happen automagically when you're using the graphics card, for example, while playing a game? If the latter is true, and you do in fact get the boost clocks speeds when the card is being utilized by a game, is there a way for me to check what Clock & Memory speeds I'm getting while playing a game, like an overlay or something? I use the Nvidia Geforce Experience for the Frame Rate overlay (as Steam Big Picture doesn't support the Frame Rate). Is there something like that which will also show me Clock & Memory speeds using a keyboard shortcut? Precision X1 Overclocking: I waned to achieve very simple & basic auto overclocking. I saw a couple of Precision X1 overview videos floating around on YouTube. Essentially I raised the "Target" tab values to max (120% Power Target / 87C GPU Temp), then switched over to the VF Curve Tuner, then hit "Scan". After the Scan completed, that little "Score" box changed to "+63" instead of "0". Then I hit "Apply", then I hit "Save".  After that when I look in the top header "Memory / CPU / Target" tab, I don't see any change from my default values. They still show what they always show me.   Essentially nothing changed after the whole "Scan" exercise. Am I doing something wrong or missing a step? How do I get the overclocking which Scan discovered (+63) to actually apply and be registered to the Card? Thanks for your help!! EDIT: Can't seem to add screenshots inline in my post (maybe because I'm new). I have 2 screenshots attached as PNG to show Precision X1.

post edited by sturmbelial - 2019/03/11 07:22:23

Attached Image(s)

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/11 13:20:39

(permalink)

☄ Helpfulby sturmbelial 2019/03/11 14:10:19

First question: No.

Second question: Yes.

Third question: Yeah, use the built in on screen display function that is built into px1.

Forth question: See my answer to your third question.

Since you got a score of +63 all you have to do is simply put +63 for the clock adjustment inside px1.

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/11 14:10:08

(permalink)

Sajin

First question: No.

Second question: Yes.

Third question: Yeah, use the built in on screen display function that is built into px1.

Forth question: See my answer to your third question.

Since you got a score of +63 all you have to do is simply put +63 for the clock adjustment inside px1.

Sajin, Thanks for your response!! I think that cleared up most of my queries. I did actually read up a few more post/articles/etc and discovered some more information which corresponds to your answers. I was missing the last step of adding the "+63" in the Clock box. Also discovered the On-Screen OSD functions provided by Precision X1. Exactly what I was looking for. A couple follow-ups. 1. A couple of articles I read suggested that after running the Scan and adding your result to the clock box, the Power & Temp sliders should be dragged back to the highest possible value (120/87), and left there. Is that correct? 2. It was also suggested to push the "Voltage" slider under the "CPU" tab to the highest value and leave it there, because, (quoting the article), "Scan doesn't do anything with it, and leaving it to highest value will ensure highest possible clock speeds with safe & stable voltage". Is that correct? 3. Lastly, does anything need to be done with the Memory clock slider? I saw some posts where people were putting 500 or 1000 in it. Does scan exclude Memory? If so, should we attempt to increase that, or leave it alone? Thanks again, I appreciate it.

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/11 14:16:34

(permalink)

☄ Helpfulby sturmbelial 2019/03/12 07:31:13

No problem.

1. Yep.

2. Yes.

3. You can definitely overclock the memory too if you want. Yes, scan does exclude the memory. Feel free to tweak it as you see fit.

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 07:32:56

(permalink)

Sajin

No problem.

1. Yep.

2. Yes.

3. You can definitely overclock the memory too if you want. Yes, scan does exclude the memory. Feel free to tweak it as you see fit.

Once again, very helpful answer! Thanks for your response. There is one other thing I meant to check (kept forgetting since first post). Do I need to save any of this to a Profile somehow, or once I'm done with all the steps above, if I just minimize Precision X1, it will always load the saved settings on startup? I see the numbers for the profiles on the side. I'm not really clear how to use them and if they need to be used at all. Thanks again, appreciate your time.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 08:01:59

(permalink)

☄ Helpfulby sturmbelial 2019/03/12 08:36:27

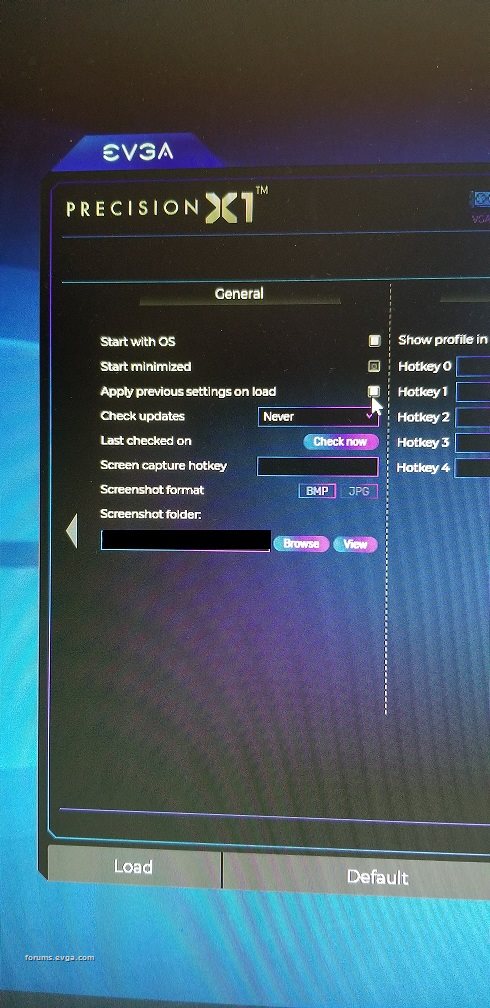

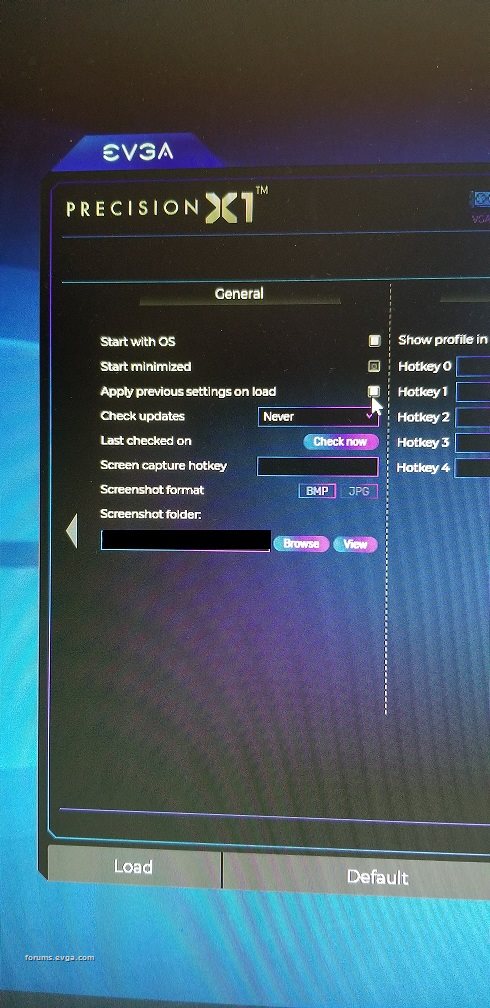

You must Save to a Profile Number - click on any 0-9 Then Apply & then Save for Auto load of Last Open Profile there are a couple of items to check mark to make it work - launch on Reboot & Load previously saved Profile# You need to select - Both of these: 1) "Start on with OS" 2) "Apply previous settings on load" WARNING: when trying to Find your best OC, Do Not select the "apply previous settings on load" --> if its an unstable setting you do not want these to load and crash your Rig on restart ---- right  then click: Apply & then Save (these are needed to save changes to a profile, cant remember if its need to save the auto start, have not changed mine in months)

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 08:37:05

(permalink)

Cool GTX

You must Save to a Profile Number - click on any 0-9

Then Apply & then Save

for Auto load of Last Open Profile there are a couple of items to check mark to make it work - launch on Reboot & Load previously saved Profile#

You need to select - Both of these:

1) "Start on with OS"

2) "Apply previous settings on load"

WARNING: when trying to Find your best OC, Do Not select the "apply previous settings on load" --> if its an unstable setting you do not want these to load and crash your Rig on restart ---- right

then click: Apply & then Save (these are needed to save changes to a profile, cant remember if its need to save the auto start, have not changed mine in months)

Ah!! Perfect!! Exactly what I was looking for. Thank you so much for such a detailed response. Will try this with my setup. Thank you.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 08:42:13

(permalink)

Happy to Help From the Scan test Value to see if more is possible & stable---> Small adjustment to your GPU MHz - maybe 20 MHz at a time & test for stability Why 20 MHz -- that is 1 Step  From Max OC --> I reduce by 10% for best day to day stability  edit from 13 to 20 MHz

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 11:09:29

(permalink)

Cool GTX

Happy to Help

From the Scan test Value to see if more is possible & stable---> Small adjustment to your GPU MHz - maybe 13 MHz at a time & test for stability

Why 13 MHz -- that is 1 Step

From Max OC --> I reduce by 10% for best day to day stability

Gotcha!! Once I've setup the Scan value - I'll attempt this. Thanks much!

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 11:21:58

(permalink)

For turing cards it's actually 15 mhz steps instead of 13.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 11:43:09

(permalink)

Sajin

For turing cards it's actually 15 mhz steps instead of 13.

Thanks - learned something  Not sure why Nvidia changed from 13 MHz with 10 series cards to 20MHz for the 20 series cards ?

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 11:52:49

(permalink)

Cool GTX

Sajin

For turing cards it's actually 15 mhz steps instead of 13.

Thanks - learned something

Not sure why Nvidia changed from 13 MHz with 10 series cards to 20MHz for the 20 series cards ?

No problem. I'm not sure either.

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 14:18:01

(permalink)

What is the best way to test/check for stability after overclocking? I'm not interested in Benchmarks & Scores, etc., so my OC'ing will be pretty auto (OC Scanner), plus maybe just a tad more if there's no crashes. System is used for Gaming only and just want a smooth, stable & safe gaming system which doesn't crash.

Thanks for all your responses guys - very helpful!

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 14:21:00

(permalink)

sturmbelial

What is the best way to test/check for stability after overclocking? I'm not interested in Benchmarks & Scores, etc., so my OC'ing will be pretty auto (OC Scanner), plus maybe just a tad more if there's no crashes. System is used for Gaming only and just want a smooth, stable & safe gaming system which doesn't crash.

Thanks for all your responses guys - very helpful!

Test each of your games with the oc applied would be the best way.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 14:37:02

(permalink)

Sajin

sturmbelial

What is the best way to test/check for stability after overclocking? I'm not interested in Benchmarks & Scores, etc., so my OC'ing will be pretty auto (OC Scanner), plus maybe just a tad more if there's no crashes. System is used for Gaming only and just want a smooth, stable & safe gaming system which doesn't crash.

Thanks for all your responses guys - very helpful!

Test each of your games with the oc applied would be the best way.

Happy to Help Benchmarks are consistent - therefore you make a change & see what the result was --> Handy even if You not looking to set a world records I like to take my Max Stable OC & back down those setting 10% for daily stability  Games can be more sensitive to OC than benchmarks - you wont know till you try

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

ProDigit

iCX Member

- Total Posts : 465

- Reward points : 0

- Joined: 2019/02/20 14:04:37

- Status: offline

- Ribbons : 4

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/12 15:34:48

(permalink)

I would manually overclock, don't use the tools that do it for you. XOC is excellent for that! Just overclock in small steps, under load, until the thermal and voltage sensors show there's some throttling going on. 1- Boost clock is a temporary speed boost, until your heat sink reaches a specific temperature; usually 60-70F; then it throttles down to stock speeds. You'll never run in full boost mode, unless if you provide sufficient cooling. 2- Overclocking using XOC or nVidia afterburner, you can best do during a stress test; not before. This way, you can see the actual GPU speeds. When a GPU isn't throttled up it can show anything; from like 100 something Mhz to full on boost; including ram speeds (which usually idle around 850Mhz). It'll also show you when your GPU is throttling or crashing. The goal is to have a nice flatline, without dips or unevenness in temperature, voltage, GPU and VRAM frequency. The higher you increase the frequency and voltage, the higher the chance on instability. You'll have to play around a bit with it; find the sweet spot, and then throttle down by a few percent (safe margin). I wouldn't touch voltage until you've reached max stable overclock; as increasing voltage usually means hotter running cards. 3- The +63Mhz you got, is either a safe overclock that can be increased upon; or may be limited to your cooling solution. If your GPU runs at anything over 80C, it's probably a cooling situation; as most of my cards with proper cooling run at between 75 to 77C. I would recommend to set fan speed to 100%, or as high as possible (preferably keeping the temperatures below 83C), and try to optimize your case for better cooling. I for instance, switched from a fan pushing cold air in the front of the case, to a larger fan sucking warm air out of the rear of the case. And taped off any holes that would just get cold air in the case in the wrong places. I also taped off holes that could reintroduce warm exhaust air back into the case. And focused on providing air gaps with cool air, where the system needs it (usually in the front of the case, in places where cool air would end up near the GPU); and have the fans suck out the hot air in the back, and channel it away from the PC. In some cases, adding a small piece of packaging tape and cardboard in the right place, can decrease GPU temperatures by 1-2C. It may not seem like much; but it adds up; and allows me to overclock by a few Mhz more. Most Turing cards can be overclocked by 100-125Mhz; more if you have the option to place your computer in a very cold room (or outside, heck, it is winter). Most speed gains are gotten from overclocking the memory as well. Some cards allow you to overclock the VRAM by as much as 1000Mhz, but most are around 600-700Mhz!

post edited by ProDigit - 2019/03/12 15:43:23

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 10:51:26

(permalink)

@Sajin & @Cool GTX, Thanks for all your help! Made some progress today, but have some questions. This is what I did: - Moved Power & GPU Temp Sliders to Max

- Hit "Scan"

- When it finished, "PASS" lit up and the "Score" box said "+66"

- I entered "66" in the "Clock" box for GPU & hit Enter

- Moved the Voltage Slider to Max

- Power & GPU Temp remained at Max so I didn't have to do anything

- Entered "500" in the Clock box for Memory and hit Enter

- Clicked on the number "9" in the 0-9 panel (the number changed color I think)

- Clicked "Apply", then "Save"

- Went to Setup and checked both "Start with OS" and "Apply previous settings on load".

- Clicked "Apply", then "Save"

- Closed Precision X1 & Reboot the system.

Now the Questions: - After system rebooted, Precision X1 started up and sat in the Taskbar. So I clicked to launch it.

- At this point, as shown in screenshot below:

- I can see the number "9" is selected/highlighted, therefore I'm assuming profile is loaded.

- I can see the "500" I entered in the Clock Box & the Memory Speed at 7500Mhz

- I can see the Voltage, Power & GPU Temp sliders as Max as I had set them

- I don't see "66" in the Clock Box and only 1350Mhz speed. I want to confirm if this correct. I'm assuming "+66" has been registered and 1350Mhz is the current clock speed (since no game is being played), and that the +66 will really go into affect when the GPU is being used & stressed, like in gaming. Is that correct?

- In the Setup, I had also setup some options in the OSD section. I didn't touch the "Show in OSD" and "Show in tray icon" color palettes, but I did set the hotkeys. Mainly Ctrl+B to toggle OSD. I didn't touch or select anything else. Left everything else default. Clicked "Apply", then "Save".

However, when I'm playing a game, I press Ctrl+B and nothing happens. I can't get the OSD to show up. Nothing happens when I press Ctrl+G or Ctrl+H either. Can you look at this screenshot and tell me if I'm doing something wrong or missing something?

Appreciate all your help!!

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 10:57:49

(permalink)

1) is Open minimized checked  2) Did you "hit" Apply button, then Save ---> after entering the Value ? ---> Highlight the Profile number if it is Not Already selected ---> Click GPU box, enter 66 then Enter key - the Apply Button -- then Save Button 3) OSD is a know issue for many unfortunately --- I do not use it myself. Nothing on your screen shot jump out as Missing - sorry - Did you Use Apply & then Save to the Profile --> try a reboot

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 11:02:58

(permalink)

sturmbelial

@Sajin & @Cool GTX,

Thanks for all your help! Made some progress today, but have some questions.

This is what I did:

- Moved Power & GPU Temp Sliders to Max

- Hit "Scan"

- When it finished, "PASS" lit up and the "Score" box said "+66"

- I entered "66" in the "Clock" box for GPU & hit Enter

- Moved the Voltage Slider to Max

- Power & GPU Temp remained at Max so I didn't have to do anything

- Entered "500" in the Clock box for Memory and hit Enter

- Clicked on the number "9" in the 0-9 panel (the number changed color I think)

- Clicked "Apply", then "Save"

- Went to Setup and checked both "Start with OS" and "Apply previous settings on load".

- Clicked "Apply", then "Save"

- Closed Precision X1 & Reboot the system.

Now the Questions:

- After system rebooted, Precision X1 started up and sat in the Taskbar. So I clicked to launch it.

- At this point, as shown in screenshot below:

- I can see the number "9" is selected/highlighted, therefore I'm assuming profile is loaded.

- I can see the "500" I entered in the Clock Box & the Memory Speed at 7500Mhz

- I can see the Voltage, Power & GPU Temp sliders as Max as I had set them

- I don't see "66" in the Clock Box and only 1350Mhz speed. I want to confirm if this correct. I'm assuming "+66" has been registered and 1350Mhz is the current clock speed (since no game is being played), and that the +66 will really go into affect when the GPU is being used & stressed, like in gaming. Is that correct?

- In the Setup, I had also setup some options in the OSD section. I didn't touch the "Show in OSD" and "Show in tray icon" color palettes, but I did set the hotkeys. Mainly Ctrl+B to toggle OSD. I didn't touch or select anything else. Left everything else default. Clicked "Apply", then "Save".

However, when I'm playing a game, I press Ctrl+B and nothing happens. I can't get the OSD to show up. Nothing happens when I press Ctrl+G or Ctrl+H either. Can you look at this screenshot and tell me if I'm doing something wrong or missing something?

Appreciate all your help!!

Sounds like the software just didn't save some of your settings.

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 11:11:03

(permalink)

You are both 100% correct!! The software just did not save my GPU Clock settings for some odd reason. I did Apply & Save in that original try. I just did it again, reboot the system, and when Precision X1 came up, I see the "66" in the clock box now, along with all of my other settings! Everything is saved & looking good! YAY!!   Now my 2nd issue which stays unresolved:  The OSD Settings are definitely saved because I see "Ctrl + B" in the hotkey box. But I can't get the OSD to show. Ctrl+B during gameplay does not show me anything anywhere. Is there anything missing here? Thank you so much!!!

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 11:12:34

(permalink)

Cool GTX

1) is Open minimized checked

2) Did you "hit" Apply button, then Save ---> after entering the Value ?

---> Highlight the Profile number if it is Not Already selected

---> Click GPU box, enter 66 then Enter key - the Apply Button -- then Save Button

3) OSD is a know issue for many unfortunately --- I do not use it myself. Nothing on your screen shot jump out as Missing - sorry

- Did you Use Apply & then Save to the Profile --> try a reboot

Yes, Open Minimized is checked. On reboot, it sits in the Task Bar. Ah! So the OSD is a known issue. Hmmm, do you know how I can check FPS & Clock Speed during gameplay then? Thanks!

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 11:22:36

(permalink)

sturmbelial

Hmmm, do you know how I can check FPS & Clock Speed during gameplay then?

Thanks!

Use msi afterburners osd. Note: No there won't be any interference between the two programs if you run both x1 and msi afterburner at the same time as long as you only use afterburner for the osd.

post edited by Sajin - 2019/03/13 11:25:42

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 14:29:00

(permalink)

Sajin

sturmbelial

Hmmm, do you know how I can check FPS & Clock Speed during gameplay then?

Thanks!

Use msi afterburners osd.

Note: No there won't be any interference between the two programs if you run both x1 and msi afterburner at the same time as long as you only use afterburner for the osd.

Only thing I don't like about that is adding an additional app to the environment. Was hoping for a one-stop-shop. The issue here is that MSI Afterburner uses the exact same OC Scanner API which Precision X1 does. Neither is better or worse. The same auto-overclocking I was able to achieve in Precision X1, I can do with MSI Afterburner. The difference here is that Afterburner will also do the OSD, which Precision X1 seems to be failing at currently (may be fixed with future release). I was hoping to use Precision X1 as it's an EVGA app and my card is EVGA. Same reason I was using ASUS GPU Tweak II because my GTX 1080 was ASUS ROG STRIX. If I have to install Afterburner/RTSS, I may as well use that OC Scanner and duplicate my effort in that, so I have a one-stop-shop for OC & OSD. Wasn't aware OSD in Precision X1 was bugged and known currently.

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 15:09:28

(permalink)

Precision has always had issues with it's OSD.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 15:43:55

(permalink)

sturmbelial

Only thing I don't like about that is adding an additional app to the environment. Was hoping for a one-stop-shop. The issue here is that MSI Afterburner uses the exact same OC Scanner API which Precision X1 does. Neither is better or worse. The same auto-overclocking I was able to achieve in Precision X1, I can do with MSI Afterburner.

The difference here is that Afterburner will also do the OSD, which Precision X1 seems to be failing at currently (may be fixed with future release). I was hoping to use Precision X1 as it's an EVGA app and my card is EVGA. Same reason I was using ASUS GPU Tweak II because my GTX 1080 was ASUS ROG STRIX.

If I have to install Afterburner/RTSS, I may as well use that OC Scanner and duplicate my effort in that, so I have a one-stop-shop for OC & OSD.

Wasn't aware OSD in Precision X1 was bugged and known currently.

1) the Scan Tool is From Nvidia, that is why they are the same  2) AB can't control more than 1 Fan So if you go AB route - set a Static fan speed in X1 & "save" then close X1 Set all fans to same speed for best sound quality @ highest RPM you do not mind 3) There is history between the AB coder & EVGA - possibly intellectual rights issues now. After they parted ways it is My Understanding - EVGA had to go back to square 1 & reinvent the wheel -- being careful to avoid using previous code. I have read a lot of different things & this is My understanding - nowhere did I find such a condensed version of the OSD "issue" So, hey I could be wrong

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 15:56:33

(permalink)

Cool GTX

sturmbelial

Only thing I don't like about that is adding an additional app to the environment. Was hoping for a one-stop-shop. The issue here is that MSI Afterburner uses the exact same OC Scanner API which Precision X1 does. Neither is better or worse. The same auto-overclocking I was able to achieve in Precision X1, I can do with MSI Afterburner.

The difference here is that Afterburner will also do the OSD, which Precision X1 seems to be failing at currently (may be fixed with future release). I was hoping to use Precision X1 as it's an EVGA app and my card is EVGA. Same reason I was using ASUS GPU Tweak II because my GTX 1080 was ASUS ROG STRIX.

If I have to install Afterburner/RTSS, I may as well use that OC Scanner and duplicate my effort in that, so I have a one-stop-shop for OC & OSD.

Wasn't aware OSD in Precision X1 was bugged and known currently.

1) the Scan Tool is From Nvidia, that is why they are the same

2) AB can't control more than 1 Fan

So if you go AB route - set a Static fan speed in X1 & "save" then close X1

Set all fans to same speed for best sound quality @ highest RPM you do not mind

3) There is history between the AB coder & EVGA - possibly intellectual rights issues now. After they parted ways it is My Understanding - EVGA had to go back to square 1 & reinvent the wheel -- being careful to avoid using previous code. I have read a lot of different things & this is My understanding - nowhere did I find such a condensed version of the OSD "issue" So, hey I could be wrong

Wow! I did not know about all that. The history is very interesting!! I'm sure the OSD issue will be resolved at some point in future releases. Hmm, after reading the fan issue, now I'm not so sure about AB. And I really dislike it's GUI Interface. Considering going with HWInfo/RTSS for the OSD. Looking into that possibility and keeping Precision X1 for OC. Not really sure right now. Question for your along that line - Say tomorrow a new version of Precision X1 is released. Whenever I install a new version, will I need to repeat the OC steps from scratch to finish. Thanks for all your input. This has been very helpful & informative, and loved that little history note.

|

Cool GTX

EVGA Forum Moderator

- Total Posts : 31342

- Reward points : 0

- Joined: 2010/12/12 14:22:25

- Location: Folding for the Greater Good

- Status: offline

- Ribbons : 123

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/13 17:05:15

(permalink)

When software OR drivers are change it would be a good idea to "confirm stability" with your past OC. Though your past OC should work ---> there are No guarantees It is always a bigger issue for new OC members when they Upgrade a GPU .... & they swear the GPU is a dud ... Sorry you need to go back & confirm your MB/CPU/RAM OC is still stable with the new GPU installed Hey, sometimes pushing the BCLK will help benchmark runs & OC in general --> but you are also messing with the PCIe Bus speed  Small steps - test it several times, make sure the Temps are stable & then make your next adjustment .... repeat Or just use the Scan tools number if you need to make a quick adjustment. Then move to the RAM on your graphics card ..... adjust / test GL & Have Fun with it

Learn your way around the EVGA Forums, Rules & limits on new accounts Ultimate Self-Starter Thread For New Members

I am a Volunteer Moderator - not an EVGA employee

Older RIG projects RTX Project Nibbler

When someone does not use reason to reach their conclusion in the first place; you can't use reason to convince them otherwise!

|

sturmbelial

New Member

- Total Posts : 18

- Reward points : 0

- Joined: 2019/02/25 17:42:21

- Status: offline

- Ribbons : 0

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/18 07:27:32

(permalink)

Cool GTX

When software OR drivers are change it would be a good idea to "confirm stability" with your past OC.

Though your past OC should work ---> there are No guarantees

It is always a bigger issue for new OC members when they Upgrade a GPU .... & they swear the GPU is a dud ... Sorry you need to go back & confirm your MB/CPU/RAM OC is still stable with the new GPU installed

Hey, sometimes pushing the BCLK will help benchmark runs & OC in general --> but you are also messing with the PCIe Bus speed

Small steps - test it several times, make sure the Temps are stable & then make your next adjustment .... repeat

Or just use the Scan tools number if you need to make a quick adjustment. Then move to the RAM on your graphics card ..... adjust / test

GL & Have Fun with it

Good Morning, I did get everything setup & did some testing this weekend. I did in fact setup HWInfo/Riva Tuner Statistics Server for OSD (Since Precision X1 does clearly have a known issue), however, I could not get the Toggle Hotkey to work. Tried all sorts of various combinations but nothing worked. I also ended up finding the customization options a little too involved for my taste, so ultimately, uninstalled both and reverted to Sajin's advice of installing MSI Afterburner/RTSS for OSD only. It works perfectly fine right out of the box, so I'm all set for my OSD. OC'ing with Precision X1 seems to be working really well, I'm happy to report. Memory clock shows a consistent & stable 7500 Mhz (with my +500). GPU clocks are hovering around 2050 Mhz (with my +66), which exceeds the boost clock of 1755 Mhz for my card by a mile. GPU Temperature hovers around 52 degrees. Max I saw it reach after hours of gaming was 57 degrees, for a short period only. I'm getting consistent & stable 60 FPS on my 3840 x 2160 (4K) display with all settings (excepts AA) fully maxed out to Ultra on all the games I attempted. This is with VSYNC "ON" because unfortunately get screen tearing on my display (TCL 6 Series 55" UHD/HDR/Dolby Vision 55R617). If I turn VSYNC "OFF", FPS jumps to 75 - 85, but I can't play with screen tearing so I have to contend with 60 FPS for now (until I upgrade my TV). I probably don't have any need for pushing the OC any further since I'm getting the results I wanted. If I did wish to play with it a little further, you had suggested increasing single steps of 15 Mhz. Is my understanding correct that every "+1" in the Clock box is equal to one step, that is, 15 Mhz? So would I go about increasing the +66 to +67 and so forth? Thanks much for your help!!

|

Sajin

EVGA Forum Moderator

- Total Posts : 49227

- Reward points : 0

- Joined: 2010/06/07 21:11:51

- Location: Texas, USA.

- Status: online

- Ribbons : 199

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/18 11:28:18

(permalink)

Each step is 15 mhz. +1-14 will do nothing.

|

ProDigit

iCX Member

- Total Posts : 465

- Reward points : 0

- Joined: 2019/02/20 14:04:37

- Status: offline

- Ribbons : 4

Re: Confused about Clock Speed & Precision X1 Scan.

2019/03/18 17:11:17

(permalink)

Next thing is to lower power consumption.

It is best to leave the voltage slider to 0, instead of turning it up all the way.

Not that it does the card any damage, it just makes it run a lot hotter, until the software will dial down the voltage anyway.

I myself set the slider to 0, occasionally to 25 or 33%, just to get a specific frequency working; as some overclocks jump between voltage, and thus between frequencies as well.

The voltage slider is to provide a more consistent GPU speed.

Increasing it will only generate more heat, and is only recommended if you want to max out your performance (provided you have sufficient cooling).

Also, your power slider is set to 124%, but the card only registers 66W usage.

I presume that you took a screenshot when the card was idling, or near to idling?

Under load the numbers might look different.

Do a GPU stress test (like Furmark, or 3DMark, Futuremark, or whatever), while you keep the X1 HWM on top.

It'll show you what your card is doing.

I usually look at GPU frequency and temps, RAM frequency, and GPU voltage (4 charts).

|