The-Hunter

Superclocked Member

- Total Posts : 233

- Reward points : 0

- Joined: 2009/03/02 11:22:57

- Status: offline

- Ribbons : 1

Re:SR2 with PCIe Drives and SLI

2010/04/02 17:20:38

(permalink)

EVGATech_NickM

Sorry but we tested in house with a couple waterblock cards and a couple raid controllers on the SR-2 and it will not fit.

The Hydro Copper cards are slightly larger than a single slot starting where the vreg heatsink starts. A shorter card like a network adapter or sound card will fit but a longer card like the Areca controllers (tested with a 1210 and a 1231 or longer GPUs) will touch against the vreg heatsink on the Hydro Copper cards. Cards can be about 6 inches in length before they make contact with the vreg heatsink.

thank you NickM Are you talking about this one? GTX 480 on water - they do look "slim" but i see the heat sink bulge out a bit aye, why not make the next one a full copper plate like this: A solution could be this concept:  if the with of the EVGA heat sink part of the cooler is the issue, why not remove the heat sink and send all the heat to the GPU block or do we need that heat sink there or is there more heat than the VRM that needs to removed under that area?  if that wont work for us, we have a full cover block in the making here when we get those replies and the full 512 shader version is out of the fermi card, I will go shopping also please pay attention to this post: http://www.xtremesystems.org/forums/showpost.php?p=4303400&postcount=24 from http://www.xtremesystems.org/forums/showthread.php?t=247891 and while we are talking about water cooling parts.. note this news, this from a pretty well trusted source in that community on the topic of what is the best CPU block "right now". Note: you need to swap a internal part to get that performance.

Cosmos II water cooled, EVGA SR-X, Intel E5-2687W x2, EVGA Titan Black Hydrocopper signature x3, 1 x Dell 30" 308WFP, 96Gb 1600Mhz ram, Creative XB-X-FI, 256GB OCZ SSD, Storage controller: Areca 1222 in Raid 0 with 3 x, 2 TB Seagate HD, EVGA 1500W PSU __________________________________________________

|

The-Hunter

Superclocked Member

- Total Posts : 233

- Reward points : 0

- Joined: 2009/03/02 11:22:57

- Status: offline

- Ribbons : 1

Re:SR2 with PCIe Drives and SLI

2010/04/02 17:34:13

(permalink)

estwash

Hunter - thx for the words :) family appreciates it.

originally, was going to go straight folding w/4way and c1060's...1 c1060 is amazing dif in Adobe work, unless people use it, there's really no way to show the CUDA benefits.

BUT, once I saw the sr-2 was coming, and, since have already budget prepped for MM case for folding, am going to go the sr-2 route instead of the 4way class board. AND, here's my planned setup:

pci-e 1&2 - gtx 480 (or wait til summer brings 485 with full core use)

pci-e 3&4 - same

pci-e 5&6 - same

pci-e 7 - c2050 (if out in summer, or take c1060 from other sys and use it in mean while)

wanted to put RAID in freed up slot 6 (water cooled gpu's)...

I was hoping to sli for personal and work use, and since CUDA based Fermi 400 series, hoped the gpu's could also serve as gpgpu's alongside the Tesla c1060 (or upcoming c2050)...

I am persuaded at this point, that it's going to be functional and beneficial to use the 6th slot for RAID card, while wc'ing the gpu's. the Tesla card gets hot, but don't think wc'ing is an issue there.

my guilty question, seriously, is regarding being able to fold@home (background performance), and yet in sli 3 monitor live in my games.

I'm looking forward to Adobe's upgrades, and Fermi's support of them for work...and, am anxious to see the high quality of gaming on high res monitors........AND, hope it doesn't hinder the folding@home activity...DO I NEED TO interrupt anything to "game" and "work" keep using the system? I'm hoping not to, but if it does, I'll leave the sys for folding, and keep my asus sys for gaming/work, just upgrade 1 gpu...if I have to.

thx

I game one 1 GPU and run folding on 2 sometimes, My simple logic on that question is, If it works for me why should it not work for you. The Tesla card gets hot sure, water cool it.. I don't use SLI much when doing this thou, I actually seldom run SLI. I only use it on things I find 100% stable and that I so far can count on one hand sadly. I always have been a fan of single GPU per card, and after my ATI 4870x2 experience wont go back to a dual GPU setup on one card anytime soon. If don't find my applications that I want to run over SLI and stable across the board, I wont SLI them. I just cant have my machine die on me for all the tasks that run on it. I much prefer having all one one machine such as my setup versus another separate server for my VMware work jobs. Games are fun, but my work jobs much more important. I believe NVidia did the right choice when they designed the fermi chip. They produced a monster chip so we could have 1 single chip, versus two. We wont see its full potential just yet before the full set of shaders that are in the chip are enabled. At that stage I don't understand why you need a separate folding GPU. It would be the same chip.

Cosmos II water cooled, EVGA SR-X, Intel E5-2687W x2, EVGA Titan Black Hydrocopper signature x3, 1 x Dell 30" 308WFP, 96Gb 1600Mhz ram, Creative XB-X-FI, 256GB OCZ SSD, Storage controller: Areca 1222 in Raid 0 with 3 x, 2 TB Seagate HD, EVGA 1500W PSU __________________________________________________

|

estwash

New Member

- Total Posts : 10

- Reward points : 0

- Joined: 2010/02/19 09:15:53

- Status: offline

- Ribbons : 0

Re:SR2 with PCIe Drives and SLI

2010/04/06 13:40:24

(permalink)

Hunter, sorry for delay, got back this am. appreciate the follow-up! both in contacting Spotswood for me (through email, he's going to help my case inquiries :) )....and, in the gpu and sli questions.

I am actually considering a single gpu, since I'm still not fully utilizing anything triple monitor worthy...my 30" high res is more than wow factor for the high quality of fermi's 480 gpu. I think at most a 2way might peak games, but mostly currently beyond anything I play...so, maybe that would be the limit.

also, since the EVGA tech guy says intelligent RAID cards won't fit even between water-cooled (longer RAID cards) gpu's, I'd be forced to abandon my add-on RAID, which I can't...so, at most, 2 Fermi's and 1 Tesla with my RAID card - OR - 2 Tesla's for gpgpu and 1 Fermi with RAID...most likely the latter. I use c1060 already, but am anxious for the c2000 series with more than double core potential at less than 2xc1060 heat and power issues...they get way hot, but I've not seen anything waterblock wise thus far for wc'ing them.

thx much !! while I'm not a true gamer perhaps, and am a bit too willing to trade sli for my Tesla's and RAID, I STILL VALUE and want what the 400 series Fermi based nVidia gpu's will offer! so, I'm excited to some day soon finish the build, and put it to use.

I won't be able to return to forums for a couple of weeks, but will check in to follow the data input once I get home...be good! thx :) and, thx again for letting Spotswood know!

|

The-Hunter

Superclocked Member

- Total Posts : 233

- Reward points : 0

- Joined: 2009/03/02 11:22:57

- Status: offline

- Ribbons : 1

Re:SR2 with PCIe Drives and SLI

2010/04/06 14:47:08

(permalink)

estwash

Hunter, sorry for delay, got back this am. appreciate the follow-up! both in contacting Spotswood for me (through email, he's going to help my case inquiries :) )....and, in the gpu and sli questions.

I am actually considering a single gpu, since I'm still not fully utilizing anything triple monitor worthy...my 30" high res is more than wow factor for the high quality of fermi's 480 gpu. I think at most a 2way might peak games, but mostly currently beyond anything I play...so, maybe that would be the limit.

also, since the EVGA tech guy says intelligent RAID cards won't fit even between water-cooled (longer RAID cards) gpu's, I'd be forced to abandon my add-on RAID, which I can't...so, at most, 2 Fermi's and 1 Tesla with my RAID card - OR - 2 Tesla's for gpgpu and 1 Fermi with RAID...most likely the latter. I use c1060 already, but am anxious for the c2000 series with more than double core potential at less than 2xc1060 heat and power issues...they get way hot, but I've not seen anything waterblock wise thus far for wc'ing them.

thx much !! while I'm not a true gamer perhaps, and am a bit too willing to trade sli for my Tesla's and RAID, I STILL VALUE and want what the 400 series Fermi based nVidia gpu's will offer! so, I'm excited to some day soon finish the build, and put it to use.

I won't be able to return to forums for a couple of weeks, but will check in to follow the data input once I get home...be good! thx :) and, thx again for letting Spotswood know!

hey m8, please see the full cover water block that is in production that is linked in my above post and here again, that solves the raid issue in 100% with no further modifications needed to place that block, or potentially any other full cover watter block if it has a similar design right beside a areca raid card.

Cosmos II water cooled, EVGA SR-X, Intel E5-2687W x2, EVGA Titan Black Hydrocopper signature x3, 1 x Dell 30" 308WFP, 96Gb 1600Mhz ram, Creative XB-X-FI, 256GB OCZ SSD, Storage controller: Areca 1222 in Raid 0 with 3 x, 2 TB Seagate HD, EVGA 1500W PSU __________________________________________________

|

napster69

New Member

- Total Posts : 10

- Reward points : 0

- Joined: 2009/03/02 07:37:52

- Status: offline

- Ribbons : 0

Re:SR2 with PCIe Drives and SLI

2010/04/12 21:17:33

(permalink)

slot 1=OCZ Z Drive R2

slot 2=OCZ Z Drive R2

slot 3=GTX 480/ Danger Den wb

slot 4=Areca Raid Card

slot 5=GTX 480/ Danger Den wb

slot 6=Creative X-Fi

slot 7=GTX 480/ Danger Den wb

|

dreamofash

New Member

- Total Posts : 7

- Reward points : 0

- Joined: 2010/03/18 05:28:59

- Status: offline

- Ribbons : 0

Re:SR2 with PCIe Drives and SLI

2010/04/13 04:29:14

(permalink)

A question about the block diagram of the PCIE slots. Is the NF200 connected like this? I want to make this clear so that I wont fill up the original X16 bandwith. That's why I need to know how all the slots are connected to each of the NF200 chips. If the bottom diagram seems fine I want to use the SLOT7 for Graphic and reserve Slot5&6, and use the other 4 other cards up to X16.

X16 -- NF200 -- X16 (Through NF200) -- X16(X8) SLOT 1

| |

| ------------- X8 SLOT 2

|

------- X16 (Through NF200) -- X16(X8) SLOT 3

|

------------- X8 SLOT 4

X16 -- NF200 -- X16 (Through NF200) -- X16(X8) SLOT 5

| |

| ------------- X8 SLOT 6

|

------- X16 (Through NF200) -------- X16 SLOT 7

I wonder if EVGA is going to show the block diagram for all the chips/slots on the board. That will really help....

|

Trogdoor2010

Superclocked Member

- Total Posts : 235

- Reward points : 0

- Joined: 2010/03/31 06:45:24

- Status: offline

- Ribbons : 0

Re:SR2 with PCIe Drives and SLI

2010/04/13 10:03:48

(permalink)

dreamofash

A question about the block diagram of the PCIE slots. Is the NF200 connected like this? I want to make this clear so that I wont fill up the original X16 bandwith. That's why I need to know how all the slots are connected to each of the NF200 chips. If the bottom diagram seems fine I want to use the SLOT7 for Graphic and reserve Slot5&6, and use the other 4 other cards up to X16.

X16 -- NF200 -- X16 (Through NF200) -- X16(X8) SLOT 1

| |

| ------------- X8 SLOT 2

|

------- X16 (Through NF200) -- X16(X8) SLOT 3

|

------------- X8 SLOT 4

X16 -- NF200 -- X16 (Through NF200) -- X16(X8) SLOT 5

| |

| ------------- X8 SLOT 6

|

------- X16 (Through NF200) -------- X16 SLOT 7

I wonder if EVGA is going to show the block diagram for all the chips/slots on the board. That will really help....

Considering how all out they went with this board, creating an entirely new form factor and all, I wonder why they didn't just put in an 8th PCIe slot. Also, the OCZ z-drive R2 looks really freaking awesome. I just hope you can use smaller, lower cost, lower capacity nand flash chips filling all the modular slots and maintain the same speed. I only NEED about 160GB for the OS/Program drive (as per my current needs), but filling all the slots should allow for the full raid 0 across all the chips and therefore max speed. SLC chips should also be available for faster speeds. Also, it would be awesome if in the R3 they build a slot or two for an ECC RAM module to be installed, that way we can have up to a 4GB buffer. Just imagine how fast that would be for file transfers to the drive...

System Specs: Core2 quad q9450 2.66Ghz XFX 780i Motherboard 4GB ram ZOTAC GTX 480 1536MB Graphics Card Geforce 9400 1024MB graphics card (For Tri-Screen Action) 700W Power Supply Windows 7 160GB HD (OS+Progs) 750GB HD (Short Term Backup) 2TB JBOD Array (Long Term Storage) Bottleneck? WAT!? :p

|

The-Hunter

Superclocked Member

- Total Posts : 233

- Reward points : 0

- Joined: 2009/03/02 11:22:57

- Status: offline

- Ribbons : 1

Re:SR2 with PCIe Drives and SLI

2010/05/25 15:32:49

(permalink)

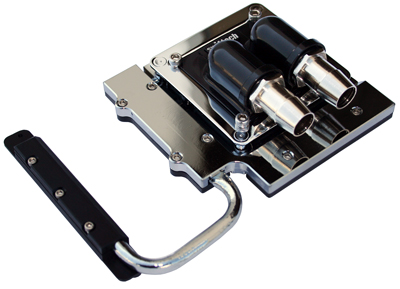

this little monster is out end of june.  ARC-1880ix-24 probably the fastest thing on the planet when its finally shipping, lets hope both the EVGA PSU and the SR-2 mobo is shipping by then

Cosmos II water cooled, EVGA SR-X, Intel E5-2687W x2, EVGA Titan Black Hydrocopper signature x3, 1 x Dell 30" 308WFP, 96Gb 1600Mhz ram, Creative XB-X-FI, 256GB OCZ SSD, Storage controller: Areca 1222 in Raid 0 with 3 x, 2 TB Seagate HD, EVGA 1500W PSU __________________________________________________

|

doorules

CLASSIFIED Member

- Total Posts : 4148

- Reward points : 0

- Joined: 2007/12/18 02:08:14

- Location: Newfoundland

- Status: offline

- Ribbons : 21

Re:SR2 with PCIe Drives and SLI

2010/05/28 02:53:00

(permalink)

still not seeing the need for multiple raid cards, you can have multiple arrays working off the same card, i have 3 470's in 16x slots with an LSI 9260-8i in the last 16x slot, runs great, no way i have oversaturated the mobo bandwidth either, both the gpu's and the ssd's are running at spec speeds without issue, i have overclocked the heck out of the gpu's and still have not seen any performance loss because of bandwidth issues...and the 9260-8i can handle the newer 6 GB transfer speed ssd's when made more mainstream

post edited by doorules - 2010/05/28 04:43:18

|

kelemvor

iCX Member

- Total Posts : 331

- Reward points : 0

- Joined: 2006/05/21 13:55:22

- Location: Largo, FL

- Status: offline

- Ribbons : 0

Re:SR2 with PCIe Drives and SLI

2010/05/28 08:45:14

(permalink)

Just thought I'd throw this out there. Have you given any consideration to SAS 15k drives like the seagate savvio? I'd expect them to outperform ssd's in many situations based on what I've read.

Our enterprise standards division requires that I use Raid5 rather than 1 or 10 on server builds; so I can't provide any detail about performance as it "should" be.

We use multiple raid cards in all of our servers. Primarily for redundancy. Like all electronics, RAID controllers do fail sometimes. You don't want to risk losing data over a HDC failure (well, depending on how valuable your data is, how volatile the change rate is, and how well you can tolerate downtime to recover from a backup if that's what you choose to do).

|

bucyrus5000

CLASSIFIED Member

- Total Posts : 3153

- Reward points : 0

- Joined: 2010/10/30 22:58:36

- Location: In a van, down by the river

- Status: offline

- Ribbons : 8

|

bucyrus5000

CLASSIFIED Member

- Total Posts : 3153

- Reward points : 0

- Joined: 2010/10/30 22:58:36

- Location: In a van, down by the river

- Status: offline

- Ribbons : 8

Re:SR2 with PCIe Drives and SLI

2010/11/02 08:09:48

(permalink)

Can I run 2 Quadro 5000 in SLI, a Tesla C2070 GPU Computing Module, and a OCZ RevoDrive X2 PCIe SSD?

Rant about what Grinds Your Gears HERE! Need help finding places to shop for computer goods? Find stores HERE and review them.

|

sam nelson

iCX Member

- Total Posts : 365

- Reward points : 0

- Joined: 2014/01/06 21:07:07

- Status: offline

- Ribbons : 9

Re:SR2 with PCIe Drives and SLI

2018/01/15 05:37:35

(permalink)

I have something you might like. I will send you a pm

|