merc.man87

FTW Member

- Total Posts : 1289

- Reward points : 0

- Joined: 2009/03/28 10:20:54

- Status: offline

- Ribbons : 6

As always, let's try to keep this as clean as possible, and professional, and of course, provide some links for your sources if you need to back something up. I am going to start, i am not very familiar with the "Architecture" of GPU's and exactly how they work, that is my bad, all of my knowledge mostly comes from reputable hardware review websites, and yes, i have over 5o websites just for that bookmarked so i can get a non biased look and review. So i was searching around for a better explanation of the different ways Nvidia and Ati handles Tessellation, and i ran accross the post, so lets discuss the information suggested and provied, and correct any fallacies that may be present to the community. "Hi there. I'm no expert on this matter, and would not mind further clarification. But mixing facts with personal guesses I came to the folowing conclusion:

What people are calling the tesselator on Direct3D 11 compatible hardware, are in fact 3 different things: 1. The Hull Shader, 2. The Tesselator, 3. Domain Shader

To achive the effect of tesselated geometry you have to use the 3 stages in the pipeline. The hardware tesselator in the ATI card is only the number 2 item, that fits between 2 new programable software shader stages. There is not enough info on the Nvidia card to be sure if it really has a hardware tessellator or if that stage is also executed on the programable cores using software as was implied until recently by Charlie.

To see in those benchmark graphics the drop in perfomance when mesh tesselation gets upped is not incompatible with the idea that the tesselator is working according to spec, on the ATI card, and I'll try to explain why.

The main objective of the tesselator usage, is to avoid having to do heavy vertex interpolation on animated meshes on the joints with lots of bones that are needed to do realistic animation. The same applies with doing vertex interpolation when doing mesh morphing with lots of weights in facial animation. Those are heavy calculations that would get heavily multiplied when using finer meshes with lots more of finer details. It's someting that is not linear, and doubling the vertex count on U and V directions on a square patch, would square the number of vertices that would need to be processed at later stages. This would become a bottle neck for several reasons: a) when meshes are far away, they would not need all that detail. b) most of the detail needed would be on the silhouette of the close-up objects. In the majority of triangles/quads facing the viewer, all those vertices generated by the tesselator or of using finer detail meshes would be "lost" as redundant and uncecessary, taxing the geometry processor at the following stage. So something had to be devised, and the tessellator is the needed solution to better scale into the future.

Having said that, this does not necessarily mean that using the "tesselator" (the 3 stages of it) to tessellate a coarser geometry in real-time to produce the finer detail where it's needed, and visible, will necessarily be "free" from a perfomance point of view, even if the stage 2 tesselator (the stage that ATI chip has implemented in hardware and the only fixed funcion of the 3 stages) is doing it's job for "free". The explanation lies in the fact that for it to be active, there are 2 extra programable stages that will be using the programable cores of the ship, 1) to select where detail is or is not needed (the Hull shader) and 2) to do, for instance, displacement mapping to add aditional detail where it's really needed and visible (The Domain Shader).

When using tessellation there will be two additional programmable pipeline stages doing calculations AND the fixed function tesselator in between the two. So, even if the middle one is doing work "for free" not having a perfomance penalty on the system, the other two (Hull and Domain shaders) that form part of the tesselation system, do have impact on perfomance, because they are competing for the global unified compute resources of programable processing cores. Not counting the additional bookeeping or managing FIFO queues of the new generated vertices. It needs to be balanced, but nevertheless, the cost of using the tesselator will be a lot less than sending a much detailed finer mesh, and having to animate all those irrelevant vertices in most cases. This gives selective detail only when/where needed, and allows developpers to deploy the same meshes as assets to achieve various degrees of detail depending on the computing resources of each card, from low to hi-end. Even if perfomance of using a finer detailed mesh would mean a drop to 1/4 of the FPS using the tessellator, by not using it would mean to drop to less than 1/16 the FPS.

From what I could understand of these presentation:

http://www.hardwarecanucks.com/forum...roscope-5.html

It seems that Nvidia's solution to tesselation means they are using mostly a software aproach to the 3 stages of tessellation. In the "PolyMorph engine" it's mentioned a "Tessellator", but from what I read it seems that it's hardware to improve the vertex fetching abilities. They seem to have gone from a vertex fetch per clock, on the G200b, to 8 vertices fetches per clock, using that parallel vertex fetching mechanism. It surely will improve with Tesselation by not allowing vertex fetches to be an imediate bottleneck on the system. And they will use that speed-up in 8x vertex fetching abilities to do the intermediary non-programable tessellation on sofware, which will use some of the cores to do the work that is done in hardware on the ATI's implementation.

To sumarize:

ATI : 1 software + 1 hardware + 1 software tessellation stages

Nvidia : 1 software + 1 software + 1 software tessellation stages

Nvidia compensates the lack of dedicated hardware tesselator by increasing the vertex fetch stage x8 in parallell in regard to previous generation, allowing 8 new vertices to be processed per clock.

ATI seems to do it sequentially 1 per clock (migh be wrong on this one), but does not have to allocate extra programable cores to do the fixed function part, freeing those cores to process pixels or other parts of the programable pipeline stages.

Only when Fermi is released will be possible comparisions with real usage scenarios. Only time will tell what's the best approach to that problem, and it will all depend on price/perfomance/wattage, as has been said here.

But with either solution, it would not make sense to expect constant perfomance levels (FPS) independent of the tesselation level, since there will always be the programable part of the tessellation there to steal computing resources from the other processing stages (at least if one wants to do "intelligent" selective tessellation and not by brute force).

In that sense, ATI Radeon Tessellation is NOT broken in any way. Part of it is free, the other part is not. I guess that those synthetic benchmarks like Uniengine that seem to indicate good perfomance on the Nivdia card, might be based on the fact that they are crancking up uniformly the tessellation load, and not selectively, ie, with Domain and Hull Shader off, like the ATI Radeons used to work on previous iterations. ATI opted for selective tessellation, so there might not be needed an huge vertex processing increase. Nvidia is recomending heavier tessellation, because of the paralell vertex processing (8x) it implemented, but it might come at the cost of less perfomance when doing heavy pixel computations or other work because of the decrease in remaining computational cores."

http://www.semiaccurate.com/forums/showpost.php?p=22193&postcount=33

post edited by merc.man87 - 2010/03/09 13:27:20

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 08:21:40

(permalink)

Hey merc...good idea with this thread. It's been discussed down below a few times and there is some confusion. If you could, could link the above post? I have a funny feeling where the information comes from :P Here's a good overview of the architecture of Fermi: http://www.anandtech.com/...oc.aspx?i=3721&p=2 I am not an expert by any stretch of the imagination. However, it's pretty clear what Nvidia has done to improve tessellation performance. And, accordingly, is in the hardware itself. The ATI description is a bit misleading as it makes people think there is a separate 'chip' so to speak. No, it's just another collection of shaders with fixed functions. Essentially, the same thing as what Nvidia does...but in ATI's case it's outside their 'cores' so to speak. Nvidia put them inside the the GPC. First, from Hardware Canucks article: "By now you should all remember that the Graphics Processing Cluster is the heart of the GF100. It encompasses a quartet of Streaming Multiprocessors and a dedicated Raster Engine. Each of the SMs consists of 32 CUDA cores, four texture units, d edicated cache and a PolyMorph Engine for fixed function calculations. This means each GPC houses 128 cores and 16 texture units. According to NVIDIA, they have the option to eliminate these GPCs as needed to create other products but they are also able to do additional fine tuning as we outline below." See, Fermi breaks down things into 'clusters' so to speak. GPC has 4 SM's. In each SM, you have 32 'cores'...each with their own hardware 'tessellator' (read above, look at polymorph engine). In short, it essentially a mini-CPU (taken from the Anandtech article). Right. We all here? Okay, so in effect Nvidia has broken down the GPU into what amounts to a cluster of mini-CPU's so to speak. Each having it's own hardware implemented tesselator (well, geometry unit...but tessellator will work). What does this provide? Simple. Parallel processing. ATI uses a fixed function geometry processor which is outside their SM's (or whatever you care to call them) which is serial in nature. Nvidia took a drastically different approach. They essentially subdivided that monolith bit and placed it where the work is done...in the SM's. It's still hardware implemented, just very different than the 'traditional' method. What you're seeing here is basically what would amount to almost a farm of processors (including the tessellator). This, of course, will increase throughput and speed of really heavy computations. Think in terms of a small render farm for lack of better analogy. So, in reality, while we are being told that ATI is using a dedicated 'chip' for tessellation...it's sort of misleading. Nvidia is as well, it's just implemented very differently than ATI (and, in theory, should offer better performance for geometry based calculations). Now...here's something that is interesting. http://www.anandtech.com/...oc.aspx?i=3721&p=2 See, I don't know much about hardware details...but I do know about parallel processing. In short, what Nvidia did is very, very difficult. Anandtech does a good job of explaining out of order processing. This is where I think some of the 'hardware vs. software' confusion comes from. In short, to make this all work...the PE's need to be in communication with each other. If one instruction is out of order, the whole thing goes splat. There are 16 of these things on the 480 card so you can just imagine how difficult that is to work with. If anything, you gotta give Nvidia credit...that stuff is not frigging easy. In fact, it's brutal. ATI's design is a lot simpler and far easier to implement than what Nvidia did. Now, the question is what will happen when you turn up AA/AF? Well, as I read it, the SM's will handle all this still...leaving the geometry calculations outside to be processed by the polymorph engine. Fine. Dandy. But will this crush the performance? I don't know but I certainly would think if they are being processed separately performance shouldn't be drastically reduced (compared to how ATI does it of course). ATI essentially frees up more shaders for pixel rendering so I suppose anything can happen though ;>) I think this pretty much sums up what's going on...from the HC article: "As we already mentioned, the HD 5000 series isn’t tailor-made for a DX11 environment while NVIDIA’s architecture was designed from the ground up to do just that." At least that's how I read it. Perhaps an EVGA tech wants to chime in and explain in better detail? Purty please?

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

fanboy

FTW Member

- Total Posts : 1882

- Reward points : 0

- Joined: 2007/05/20 16:40:00

- Status: offline

- Ribbons : 9

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 09:07:10

(permalink)

I don't know if i fully understand it or not but say a game has basic code for tessellation and the way Nvidia made the GF100 does it mean they have to write software in the driver to handle that code say for each game like Sli profiles as to break it down and send it were it needs to go so everything runs smooth or it's not going to know what to do with it..

Xeon X5660 6core Evga E758 X58 3 way Sli A1 rev 1.2 OCZ Gold DRR3 1600Mhz 6Gb WD Black 500Gb 32Mb CX Sapphire R9-280 Corsair TX Series CMPSU-950TX 950W Eyefinity 5760 x 1080

|

mwparrish

CLASSIFIED Member

- Total Posts : 3278

- Reward points : 0

- Joined: 2009/01/08 15:27:28

- Status: offline

- Ribbons : 23

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 09:42:33

(permalink)

Yeah, I can't wait to see how Fermi plays out in real life. The architecture is impressive and the level of parallel processing is something to behold.

I still think there is still likely some issues to work out on the software side to handle all the new tech. If tessellation programming in the Polymorph engine can be update with new drivers -- there is likely some big performance gains yet to be engineered.

Just like the ATI 58xx has benefited greatly from refined drivers, including 10.3 beta which has even more gains from 10.2. I think the GF100 drivers will be a great source of continued improvement.

I'm loving all of this both the ATI and moreso the NVIDIA. Can't wait to re-upgrade to Fermi later this year. The 5870s are amazing. If Fermi is more impressive... Wow, look out.

Intel Core i7 3930K - 4.7 GHz | ASUS Rampage IV Extreme | 8x4GB G.Skill Ripjaws Z DDR3-2133 2x EVGA GTX 780 Ti SC | BenQ XL2420TX - 1920x1080 120Hz LCD | Logitech G9x | Corsair Vengeance 1500

5x Crucial 128GB SSD | LG BD-R Drive | Danger Den Torture Rack | Enermax Galaxy EVO 1250W

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 09:56:43

(permalink)

FB, yes...like everything....software will be the key to it all. You gotta tell those dumb circuits what to do. ;>)

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

MrTwoVideoCards

New Member

- Total Posts : 25

- Reward points : 0

- Joined: 2007/11/11 12:18:21

- Status: offline

- Ribbons : 0

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:12:36

(permalink)

fanboy

I don't know if i fully understand it or not but say a game has basic code for tessellation and the way Nvidia made the GF100 does it mean they have to write software in the driver to handle that code say for each game like Sli profiles as to break it down and send it were it needs to go so everything runs smooth or it's not going to know what to do with it..

In a way. Most of the code will be client-side in the shader initiation. This means that most of the code will correspond to whatever hardware DX11 set as a standard to program for. In this case if Nvidia or ATI wants to have SLI or Crossfire efficient Tessellation, then they'd have to likely do that via the driver. However as a game maker you can also choose how that code itself speaks to the driver. On the game makers part they can also control which bits are sent to which GPU. A lot of engines now days like Unreal and Source use Multi-GPU "$commands" set on materials, particles, geometry sometimes that can be rendered on the second GPU. It's not as easy as touching these things based on which engine you're using, but in example: - I program Tessellation code into my engine.

- I set which objects are effected by said technology (Videocard). Now this is the tricky part. I can leave it as is, basic, nothing more than just simple tessellation. Or, I can choose to have specific objects that are often real-time, and easier to split per-GPU be rendered by the second GPU.

- If I took the second, I'd go with having my NPC's or physics props handled by the second GPU, allowing the 1st GPU to worry about world rendering. However then I'd be wasting a hefty amount of GPU power, and would be putting too much stress on a single card. Instead I could create parallel code to handle tessellation on both GPUs at once. This would mean sending the information and splitting it onto both GPU's, and then having it computed, and sent back finally.

- If both GPUs handled world rendering globally, you'd see huge performance increases with tessellation on. However I'd be doing a lot of heavy calculating that round, to make sure each GPU gets the right amount of divided information. Nvidia's GPUs do this per GPU, rather than on multi GPUs. This might be tricky to do unless Nvidia pulls off some magic on their end.

Because of how tricky it might be exactly, I'd go with having more objects overall on the 2nd GPU if I could. Static props, Dynamic Props, NPC's, Animated objects via shaders. This in theory could also allow a game developer to have extremely highly detailed NPC's if the engine saw a 2nd GPU, and put all the rendering of said objects on the 2nd GPU. I hope I've given you guys some insight into how this works.

post edited by MrTwoVideoCards - 2010/03/09 10:15:18

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:12:49

(permalink)

mwparrish

Yeah, I can't wait to see how Fermi plays out in real life. The architecture is impressive and the level of parallel processing is something to behold.

Indeed. I may come across as a 'fanboy'. In reality, nothing could be further from the truth. Lots of people here say they are fans of tech...yet...they regurgitate FUD like it was fact (or at least get caught up in all the drama). For example, all the references to Mr. WhatshisfaceoverthereatSA and all the rumor mongering that took place. Me? I truly am impressed by bold moves in design. Fermi, like it or lump it, was just that. Will it pan out? Who knows...maybe...maybe not. But you gotta give credit where credit is due here. I don't know of many people who would have the intestinal fortitude to go with this architecture. It's a massive gamble and if it doesn't go well...yeah...ouch. That's going to hurt them for years to come. But, considering Nvidia's past history...there really are only a few blips. So, meh...play the hunch I guess ;>). Don't get me wrong: I am also very impressed with the ATI tech. It's solid, refined, and certainly something worth looking into if you're a Wintel gamer. But, to be fair, Nvidia did in fact put a lot of effort into this chip. It sort of reminds me of when DEC made the Alpha. Of course the Alpha didn't fare so well...but it was quite impressive technology to say the least. Blew everything else out of the water in technical computing. So, yeah. What will happen is anyones guess really. I am hoping for EVGA's sake that all this pays off to be honest. One thing I do know for certain: Fermi is definitely not 'too hot, too slow, and unmanufacturable' or we wouldn't even be having this discussion.

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:15:40

(permalink)

Great post, MTVC. Thank you for that.

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

MrTwoVideoCards

New Member

- Total Posts : 25

- Reward points : 0

- Joined: 2007/11/11 12:18:21

- Status: offline

- Ribbons : 0

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:20:22

(permalink)

fredbsd

Great post, MTVC. Thank you for that.

I'm here to help!

|

fanboy

FTW Member

- Total Posts : 1882

- Reward points : 0

- Joined: 2007/05/20 16:40:00

- Status: offline

- Ribbons : 9

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:36:32

(permalink)

So if we talk about Ati's way of doing it then the basic code for tessellation threw the DX 11 API as wrote by the game developer is less work unless you program it for more detail which Ati has in say a game like Dirt 2 ..but the heaven benchmark is just running basic code for tessellation on Ati hardware where as Nvidia tweaked there driver for more performance gains in tessellation .. is that right?

post edited by fanboy - 2010/03/09 10:39:38

Xeon X5660 6core Evga E758 X58 3 way Sli A1 rev 1.2 OCZ Gold DRR3 1600Mhz 6Gb WD Black 500Gb 32Mb CX Sapphire R9-280 Corsair TX Series CMPSU-950TX 950W Eyefinity 5760 x 1080

|

rjohnson11

EVGA Forum Moderator

- Total Posts : 85038

- Reward points : 0

- Joined: 2004/10/05 12:44:35

- Location: Netherlands

- Status: offline

- Ribbons : 86

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:44:21

(permalink)

Guys you know we can't talk about fermi too much but what I will tell you is that drivers can only improve performance to a certain degree and that goes for both ATI and NVIDIA. From there on it's hardware that determines performance and that's all I can say at this time.

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 10:57:09

(permalink)

rjohnson11

Guys you know we can't talk about fermi too much but what I will tell you is that drivers can only improve performance to a certain degree and that goes for both ATI and NVIDIA. From there on it's hardware that determines performance and that's all I can say at this time.

Yep. Understood and thanks for taking part ;>). The original discussion was about how Fermi handles tessellation vs. how ATI handles it. Hence, we basically are sticking to what has already been published by Nvidia themselves (and all the previews the various hardware sites have put out). I would imagine even EVGA could chime in as it is already released information about Fermi. I like this thread. Calm, cool, collected. This is good.

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

mwparrish

CLASSIFIED Member

- Total Posts : 3278

- Reward points : 0

- Joined: 2009/01/08 15:27:28

- Status: offline

- Ribbons : 23

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 11:07:31

(permalink)

rjohnson11

Guys you know we can't talk about fermi too much but what I will tell you is that drivers can only improve performance to a certain degree and that goes for both ATI and NVIDIA. From there on it's hardware that determines performance and that's all I can say at this time.

Ah, I see what you did there...  Of course, that only makes logical sense. By all accounts, Fermi should crush the Cypress chip if the specs we've been fed about the core remain intact and the clock speeds are strong enough. I just know both camps are constantly improving their drivers for legitimate performance gains, too, and it appears that those refinements are paying off.

Intel Core i7 3930K - 4.7 GHz | ASUS Rampage IV Extreme | 8x4GB G.Skill Ripjaws Z DDR3-2133 2x EVGA GTX 780 Ti SC | BenQ XL2420TX - 1920x1080 120Hz LCD | Logitech G9x | Corsair Vengeance 1500

5x Crucial 128GB SSD | LG BD-R Drive | Danger Den Torture Rack | Enermax Galaxy EVO 1250W

|

chizow

CLASSIFIED Member

- Total Posts : 3768

- Reward points : 0

- Joined: 2007/01/27 20:15:08

- Status: offline

- Ribbons : 30

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 11:16:55

(permalink)

Interesting discussion, I agree however that the quoted post in the OP should be properly linked and referenced. In any case, I'd point out first and foremost the author of that post makes it seem as if the performance penalties of the domain and hull shader are unexpected. He seems to be surprised the hull/domain shaders aren't fixed function and are in fact programmable, but also share from the common resource pool of the unified pixel/vertex shaders. This is by design, as the trend continues it should be obvious that there is a move from fixed-function to programmable and this continues with DX11 where unified shaders need to be able to handle hull/domain/pixel/vertex shaders. Here's a great primer on DX11 and the new Tesselation pipeline, you'll see it does show clearly programmable hull/domain shaders with a tesselator inbetween: http://www.anandtech.com/showdoc.aspx?i=3507&p=6 And on the next page, it discusses the pros/cons of fixed-function hardware, which can basically summarized as speed at the expense of low-utility vs. flexibility with the benefit of high-utility: http://www.anandtech.com/showdoc.aspx?i=3507&p=7 AnandTech

The argument between fixed function and programmable hardware is always one of performance versus flexibility and usefulness. In the beginning, fixed function was necessary to get the desired performance. As time went on, it became clear that adding in more fixed function hardware to graphics chips just wasn't feasible. The transistors put into specialized hardware just go unused if developers don't program to take advantage of it. This made a shift toward architectures where expanding the pool of compute resources that could be shared and used for many different tasks became a much more attractive way to go. In the general case anyway. But that doesn't mean that fixed function hardware doesn't have it's place. And this balance is what brings us full circle to why Nvidia's implementation of GF100's tesselator/geometry engine is so brilliant. Instead of just tacking on a separate fixed-function tesselator, they rebuilt the entire engine to incorporate their tesselator into each SM cluster, putting it physically closer to the hull/domain shaders, giving it OoO execution capabilities, and making it programmable to the extent it can be used for both tesselation AND standard geometry using the same transistors. http://www.anandtech.com/video/showdoc.aspx?i=3721&p=3 AnandTech

To put things in perspective, between NV30 (GeForce FX 5800) and GT200 (GeForce GTX 280), the geometry performance of NVIDIA’s hardware only increases roughly 3x in performance. Meanwhile the shader performance of their cards increased by over 150x. Compared just to GT200, GF100 has 8x the geometry performance of GT200, and NVIDIA tells us this is something they have measured in their labs. So basically, Nvidia took a traditional weakness that had long been neglected (geometry shader), increased it eight-fold, moved it closer to the dependent functional units, made it programmable and out-of-order, all while maintaining DX11 compliance with regard to tesselation. To further clarify, they took two traditionally fixed-function units, geometry and tesselator, and combined them, so instead of having 2 groups of fixed-function hardware they now have those resources combined to be used for either, or as needed. The practical benefit is obvious, there's nothing wasting away as fixed-function, whatever the scene needs it has all of that geometry+tesselator transistor budget at its disposal. While impressive on paper, will it make a huge difference in every game? No. It will show benefits with regard to tesselation and perhaps some gains in games that were bottlenecked with regard to geometry. The latter will be harder to gauge in real world performance, but if you start seeing some abnormally high increases in certain games in upcoming benchmarks, the redesign of the geometry engine might be the cause.

Intel Core i7 5930K @4.5GHz | Gigabyte X99 Gaming 5 | Win8.1 Pro x64 | Corsair H105

2x Nvidia GeForce Titan X SLI | Asus ROG Swift 144Hz 3D Vision G-Sync LCD | 2xDell U2410 | 32GB Acer XPG DDR4 2800

Samsung 850 Pro 256GB | Samsung 840EVO 4x1TB RAID 0 | Seagate 2TB SSHD

Yamaha VSX-677 A/V Receiver | Polk Audio RM6880 7.1 | LG Super Multi Blu-Ray

Auzen X-Fi HT HD | Logitech G710/G502/G27/G930 | Corsair Air 540 | EVGA SuperNOVA P2 1200W

|

Moltenlava

FTW Member

- Total Posts : 1814

- Reward points : 0

- Joined: 2008/04/14 04:57:22

- Status: offline

- Ribbons : 28

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 11:19:22

(permalink)

Thanks, that seems to explain why Nvidias Fermi cards do so well in Uniengine. We shall have to see how well it performs in real world scenarios.

This kind of reminds me of PhysX in Vantage and running PhysX on the GPU that also does the rendering, during the CPU PhysX test the actual rending load on the card is low so the PhysX score can look good because PhysX is free to use most of the GPU's resources but when playing actual games with PhysX having the card just doing the rendering and having a dedicated PhysX card is really the way to go.

|

chizow

CLASSIFIED Member

- Total Posts : 3768

- Reward points : 0

- Joined: 2007/01/27 20:15:08

- Status: offline

- Ribbons : 30

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 11:22:54

(permalink)

MrTwoVideoCards

Because of how tricky it might be exactly, I'd go with having more objects overall on the 2nd GPU if I could. Static props, Dynamic Props, NPC's, Animated objects via shaders. This in theory could also allow a game developer to have extremely highly detailed NPC's if the engine saw a 2nd GPU, and put all the rendering of said objects on the 2nd GPU. I hope I've given you guys some insight into how this works.

What you're describing is incorrect....this is how Hydra handles multi-GPU rendering, SLI uses simple AFR (alternate frame rendering) where each GPU renders the entire scene independently of the other. While Hydra sounds great on paper, load balancing or selective rendering of objects based on API calls has its own set of issues, you can read about them here: http://www.anandtech.com/video/showdoc.aspx?i=3713&p=4

Intel Core i7 5930K @4.5GHz | Gigabyte X99 Gaming 5 | Win8.1 Pro x64 | Corsair H105

2x Nvidia GeForce Titan X SLI | Asus ROG Swift 144Hz 3D Vision G-Sync LCD | 2xDell U2410 | 32GB Acer XPG DDR4 2800

Samsung 850 Pro 256GB | Samsung 840EVO 4x1TB RAID 0 | Seagate 2TB SSHD

Yamaha VSX-677 A/V Receiver | Polk Audio RM6880 7.1 | LG Super Multi Blu-Ray

Auzen X-Fi HT HD | Logitech G710/G502/G27/G930 | Corsair Air 540 | EVGA SuperNOVA P2 1200W

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 11:26:40

(permalink)

This is my favorite thread about Fermi ever. ;>)

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

merc.man87

FTW Member

- Total Posts : 1289

- Reward points : 0

- Joined: 2009/03/28 10:20:54

- Status: offline

- Ribbons : 6

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 13:29:30

(permalink)

So far, good thread, i have linked the source under the original post, yes i know, it's that website, but that was about the most interesting post i ran across while practicing google fu.

|

ShockTheMonky

CLASSIFIED Member

- Total Posts : 2882

- Reward points : 0

- Joined: 2006/01/28 18:41:25

- Status: offline

- Ribbons : 45

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 14:22:00

(permalink)

Enough of the technicalities. I WANT TO SEE SOME ACTION!

" Psst. Zip up. Your ignorance is showing." " I don't suffer from insanity. I enjoy every minute of it!" " Can an Atheist get insurance for acts of god?

|

chwisch87

Superclocked Member

- Total Posts : 219

- Reward points : 0

- Joined: 2009/04/22 23:20:19

- Location: Atlanta

- Status: offline

- Ribbons : 0

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 15:33:18

(permalink)

I have never liked that anandtech article. It really sounds like too much nVidia marketing speak. "As we already mentioned, the HD 5000 series isn’t tailor-made for a DX11 environment while NVIDIA’s architecture was designed from the ground up to do just that." Really?? That sound like something that could come out of the mouth of a nVidia tech? Now that being said. Its sort of like the PS3. "The Cell can do graphics too." In this case GF100 does need a separate tessellation area when the entire chip can do it. It plays to nVidia's strength too which is software. ATi having also been hardware, its no surprise that they would have a hardware based and not software based solution. One wonders how much drivers could improve tessellation on 5000 series. Probably not much. I would imagine just enough to get the chip at least comparable to GF100 in the level of tessellation that most DX11 games will do. Most I don't think are going to go as far has what we have seen from nVidia. Games are made for consoles and then ported to PC's remember :). However to an no where near the potential of the GF100.

post edited by chwisch87 - 2010/03/09 15:35:34

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 15:39:15

(permalink)

chwisch87

I have never liked that anandtech article. It really sounds like too much nVidia marketing speak.

"As we already mentioned, the HD 5000 series isn’t tailor-made for a DX11 environment while NVIDIA’s architecture was designed from the ground up to do just that."

Really?? That sound like something that could come out of the mouth of a nVidia tech?

Now that being said. Its sort of like the PS3. "The Cell can do graphics too." In this case GF100 does need a separate tessellation area when the entire chip can do it. It plays to nVidia's strength too which is software. ATi having also been hardware, its no surprise that they would have a hardware based and not software based solution. One wonders how much drivers could improve tessellation on 5000 series. Probably not much. I would imagine just enough to get the chip at least comparable to GF100 in the level of tessellation that most DX11 games will do. Most I don't think are going to go as far has what we have seen from nVidia. Games are made for consoles and then ported to PC's remember :). However to an no where near the potential of the GF100.

That quote is actually from the Hardware Canucks article. If you have the time, there's a whole slew of previews up top there. Pretty much all of them explain the tech in great detail...and so on. Chiz pretty much explained it in technical terms. It's still hardware...just different than what ATI did. Very different.

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

mwparrish

CLASSIFIED Member

- Total Posts : 3278

- Reward points : 0

- Joined: 2009/01/08 15:27:28

- Status: offline

- Ribbons : 23

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 15:45:14

(permalink)

I"ll also add that no developer is going to heavily tessellate a game from the outset. One developer may -- and it'll end up like Crysis did. Chokes on any and every machine it runs on until 3 years after release. Exaggeration -- yes, but you get the point.

Tessellation will be ideal for limited usage -- hair, intricate and player focused complex objects, fluid dynamics, etc. The point isn't to tessellate everything like those plastic surgery addicts you hear about. It's for the right things, in the right places. That's how reasonable budget savvy developers will utilize it. Especially as long as games are console based and ported. Hopefully, that trend will find a way to reverse itself as better hardware makes it into consoles.

Intel Core i7 3930K - 4.7 GHz | ASUS Rampage IV Extreme | 8x4GB G.Skill Ripjaws Z DDR3-2133 2x EVGA GTX 780 Ti SC | BenQ XL2420TX - 1920x1080 120Hz LCD | Logitech G9x | Corsair Vengeance 1500

5x Crucial 128GB SSD | LG BD-R Drive | Danger Den Torture Rack | Enermax Galaxy EVO 1250W

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 15:52:18

(permalink)

mwparrish

I"ll also add that no developer is going to heavily tessellate a game from the outset. One developer may -- and it'll end up like Crysis did. Chokes on any and every machine it runs on until 3 years after release. Exaggeration -- yes, but you get the point.

Tessellation will be ideal for limited usage -- hair, intricate and player focused complex objects, fluid dynamics, etc. The point isn't to tessellate everything like those plastic surgery addicts you hear about. It's for the right things, in the right places. That's how reasonable budget savvy developers will utilize it. Especially as long as games are console based and ported. Hopefully, that trend will find a way to reverse itself as better hardware makes it into consoles.

Precisely. And that's why folks shouldn't fuss too much over what they have purchased (or will purchase). This actually goes right back to where we started last Fall when the early adopters of the ATI platform were throwing DX11 down the throats of those who said the exact same thing. Moreover, that's when Nvidia said DX11 won't be that important from the get go...and they caught holy hell for it. I have 2 x 260's in my rig. While the performance isn't going to match the latest and greatest, they still do fine for practically every game out there. Yep, they are only DX10 rated (heck I don't even use that) but there was no immediate need to upgrade. So, in the end, while all of this is neat stuff...it won't matter for quite some time. So, if you purchased the ATI solution..you're fine. If you're waiting for Fermi, you'll be fine. If you have the 2xx series in your rig, you'll be fine. Isn't life grand?

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

donta1979

Primarch

- Total Posts : 9050

- Reward points : 0

- Joined: 2007/02/11 19:27:15

- Location: In the land of Florida Man!

- Status: offline

- Ribbons : 73

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/09 16:16:48

(permalink)

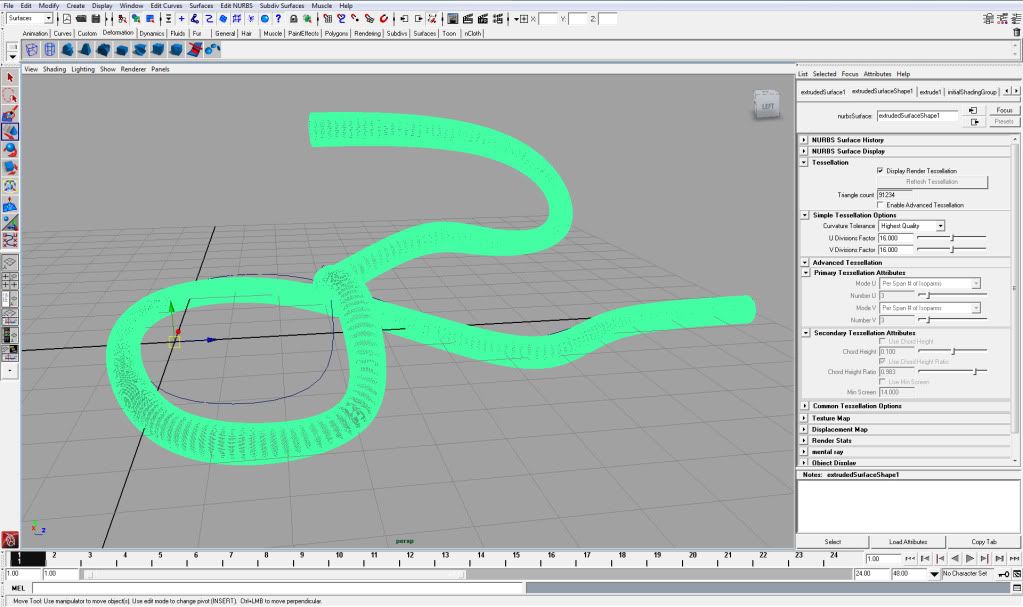

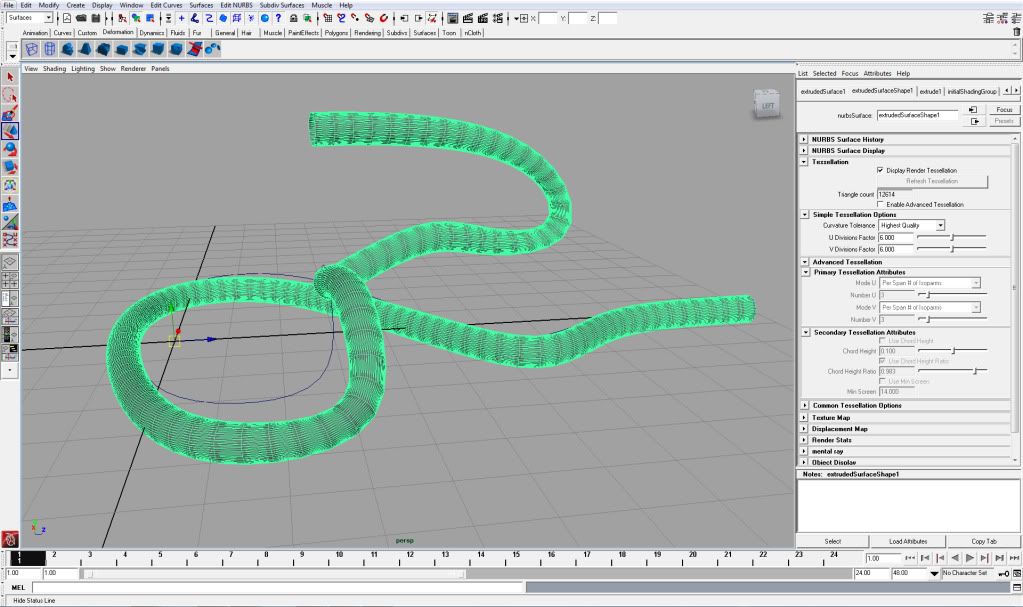

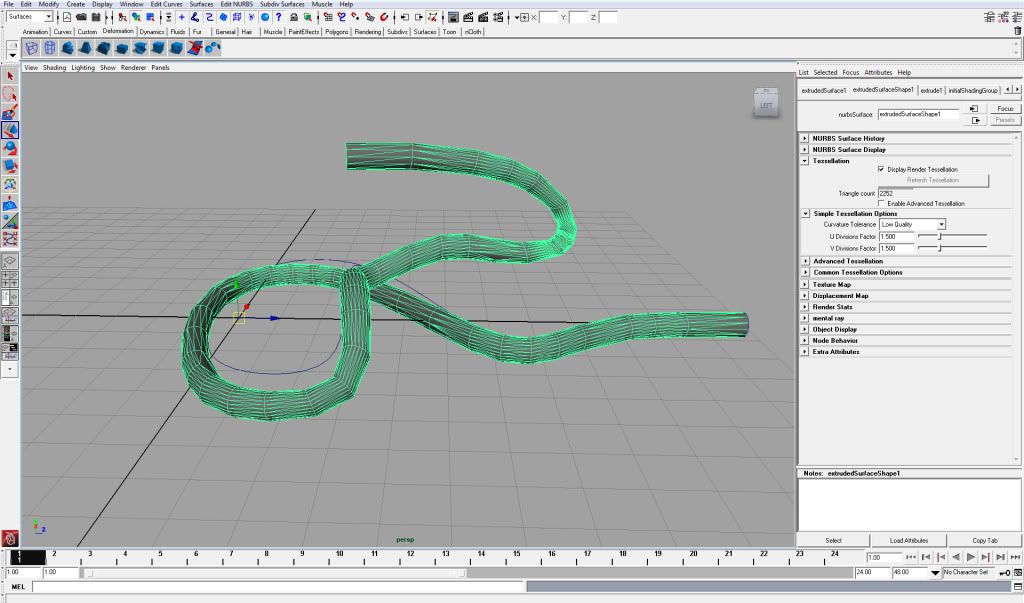

Tessellation its more hardware driven not software like rj said software/drivers will only do so much. Here is an actual example of what Tessellation is so you can view it with your own eyes. It GREATLY depends on your hardware, on top of it you take the nvidia demo with the hair, each stand of hair has a rig basically an invisable bone system. But before I go off on a long tangent it is hardware dependent, what nvidia or ati has done is able to make Tessellation with thier gpus go alot smoother within the confines of thier own programming for thier hardware, I guantee if i pump up the Tessellation like I have in these examples in that tech demo the gpu would start to chug. Here in Maya at not the highest level of Tessellation I could go I could do 600x600 or higher but Yeah I dont want to wait for my computer to take that long to tessalte that much of the model.  Here is the the Tessellation at a lower lvl than the first screen shot I took, notice how it gets a little blocky  Here is tessellation set to its lowest lvl  Now what nvidia and ati has done is this able to convert parts of the model"aka hair grass etc" at its bending points able to tessellate in real time. But once again Like rj said drivers/software can only do so much its more about the cpu or in ati/nvidias case have the gpus do it all. Even in the 3d app I used it also depends on the gpu, due to how much it can display as the model tessellates. Say if I gave that entire nurbs model model a tessellation of 600x600 in the U and V div factors, it would take it a couple minutes for it to make those tessellation calculations. as it divides the nurbs model up in more places make it more bendable, smoother in appearance.

Heatware

Retired from AAA Game Industry

Jeep Wranglers, English Bulldog Rescue

USAF, USANG, US ARMY Combat Veteran

My Build

14900k, z790 Apex Encore, EK Nucleus Direct Die, T-Force EXTREEM 8000mhz cl38 2x24 Stable"24hr Karhu" XMP, Rog Strix OC RTX 4090, Rog Hyperion GR701 Case, Rog Thor II 1200w, Rog Centra True Wireless Speednova, 35" Rog Swift PG35VQ + Acer EI342CKR Pbmiippx 34", EK Link FPT 140mm D-RGB Fans. Rog Claymore II, Rog Harpe Ace Aimlabs Edition, Cyberpunk 2077 Xbox One Controller, WD Black SN850x/Samsung 980+990 PRO/Samsung 980. Honeywell PTM7950 pad on CPU+GPU

|

MrTwoVideoCards

New Member

- Total Posts : 25

- Reward points : 0

- Joined: 2007/11/11 12:18:21

- Status: offline

- Ribbons : 0

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/10 00:49:45

(permalink)

chizow

MrTwoVideoCards

Because of how tricky it might be exactly, I'd go with having more objects overall on the 2nd GPU if I could. Static props, Dynamic Props, NPC's, Animated objects via shaders. This in theory could also allow a game developer to have extremely highly detailed NPC's if the engine saw a 2nd GPU, and put all the rendering of said objects on the 2nd GPU. I hope I've given you guys some insight into how this works.

What you're describing is incorrect....this is how Hydra handles multi-GPU rendering, SLI uses simple AFR (alternate frame rendering) where each GPU renders the entire scene independently of the other. While Hydra sounds great on paper, load balancing or selective rendering of objects based on API calls has its own set of issues, you can read about them here: http://www.anandtech.com/video/showdoc.aspx?i=3713&p=4

No really, you can set specific renderables on a 2nd GPU. But what you also stated is correct. The difficulty itself would be troublesome indeed.

|

smcclelland

Superclocked Member

- Total Posts : 138

- Reward points : 0

- Joined: 2008/11/21 12:38:57

- Status: offline

- Ribbons : 0

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/11 08:59:37

(permalink)

Heh, it's pretty cool seeing software I design posted here :)

To touch base on more of the tesselation pipeline and some things that donta pointed out, DX11 uses our (Maya's) catmull clark subdivision surface model as a means of calculating the tesselation. The SDK currently for DX11 supports this information through the FBX file format which can then be converted using a runtime converter and then displayed in the runtime environment on the GPU. The advantages here aren't just tesselation for deformations but also for things like creasing, holes and other subdivision surface enhancements that go beyond what a typical polygonal object can do. Edge creasing is probably one of the bigger things that people can do here and it's by far the best method to use when doing things like hard surface modeling such as cards or rigid objects.

For people looking to experiment with this stuff you can grab Maya or 3dsmax and use either the Smooth Mesh Preview in Maya or Turbosmooth in 3dsmax to get the results and then export out using FBX 2010. If you grab the March 2010 DX11 SDK there should be some code in there you can compile for the DX11 runtime previewer I believe so you can actually load up your mesh in the runtime and tesselate it on the fly to see what sort of performance you get.

I'm at GDC this week but I'll be following this thread closely and hopefully I can provide more insight on the tesselation pipeline if people are interested as I've been working with it for some time now and it's great seeing the hardware really grow into this tech. I'll check and see if I can post more info too to show the entire process from DCC to Runtime on the card. I've actually been meaning to put together a blog post on our corporate site about all of this and the workflows as well.

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/11 09:32:13

(permalink)

This thread just gets better and better. I would recommend the OP for a BR for bringing up a factual, technical discussion that has thus far been enlightening and informative w/o getting into bashing each other over the head.

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|

chris-nyc

FTW Member

- Total Posts : 1063

- Reward points : 0

- Joined: 2009/04/17 16:50:49

- Status: offline

- Ribbons : 4

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/11 09:34:20

(permalink)

While most of it is way over my head, I gotta say this has been one of the best threads in a long time. Lots of great information here! As fred suggested, the OP should get a blue ribbon for starting it.

Asus RIVBE • i7 4930K @ 4.7ghz • 8gb Corsair Dominator Platinum 2133 C8

2xSLI EVGA GTX 770 SC • Creative X-Fi Titanium • 2x 840 SSD + 1TB Seagate Hybrid

EVGA Supernova 1300W• Asus VG278H & nVidia 3d Vision

Phanteks Enthoo Primo w/ custom watercooling:

XSPC Raystorm (cpu & gpu), XSPC Photon 170, Swiftech D5 vario

Alphacool Monsta 360mm +6x NB e-loop, XT45 360mm +6x Corsair SP120

|

merc.man87

FTW Member

- Total Posts : 1289

- Reward points : 0

- Joined: 2009/03/28 10:20:54

- Status: offline

- Ribbons : 6

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/11 13:19:22

(permalink)

Sweet goodness! I didn't even realize i got a BR. Again, can't wait until actual form members can get there hands on some ferbie goodness so we can do an apples to apples comparison across a broad range.

|

fredbsd

FTW Member

- Total Posts : 1622

- Reward points : 0

- Joined: 2007/10/16 13:23:08

- Location: Back woods of Maine

- Status: offline

- Ribbons : 10

Re:Nvidia's form of Tessellation compared to AMD/ATI's form of Tessellation

2010/03/11 18:01:20

(permalink)

merc.man87

Sweet goodness! I didn't even realize i got a BR. Again, can't wait until actual form members can get there hands on some ferbie goodness so we can do an apples to apples comparison across a broad range.

I'll be honest...I recommended you for a BR. Why? Because you bring a serious discussion up...and it remains logical..without the normal FUD associated w/ fermi. IMHO, we need more threads like this.

"BSD is what you get when a bunch of Unix hackers sit down to try to port a Unix system to the PC. Linux is what you get when a bunch of PC hackers sit down and try to write a Unix system for the PC."

|