rjohnson11

EVGA Forum Moderator

- Total Posts : 102300

- Reward points : 0

- Joined: 2004/10/05 12:44:35

- Location: Netherlands

- Status: offline

- Ribbons : 84

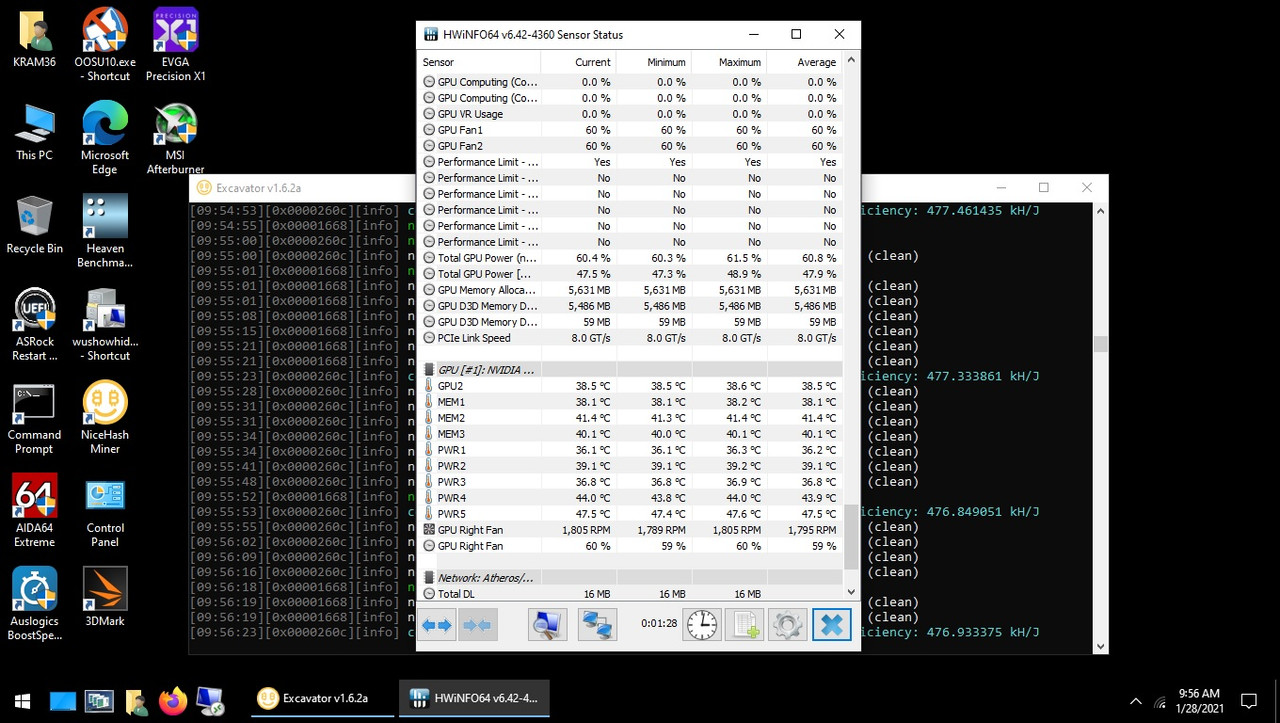

GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum | Tom's Hardware Popular hardware monitoring tool HWInfo64 has just received a new update, version 6.42 that adds support for "GDDR6X Memory Junction Temperature." This feature will allow you to check your VRAM temperature if you have a GDDR6X equipped Ampere card like the RTX 3080 or RTX 3090. This could be particularly useful if you own a Founders Edition model, as several months ago we reported on a discovery from Igor's lab in which GDDR6X modules on the RTX 3080 Founders Edition model ran dangerously hot. After some testing with the new HWInfo64 version 6.42, we've validated these same high VRAM temperatures that Igor discovered. Looping Metro Exodus at 4K Ultra settings for several minutes on an RTX 3080 Founders Edition allowed the GDDR6X modules to hit a peak temperature of 102C. The TJmax for GDDR6X is 95C. However, this changes when enabling DLSS and Ray Tracing. The RTX 3080 with Metro Exodus at the same settings hit a peak temperature of 94C. We also tested an RTX 3090 Founders Edition in Cyberpunk 2077 with DLSS and Ray Tracing enabled. GDDR6X temperatures for that card peaked at 100C. But when it comes to Ethereum mining, temperatures go to a whole other level: When mining on both the RTX 3080 and RTX 3090, we found that the GDDR6X modules would peak at a much higher 110C, and the GPU would downclock itself severely to compensate for the ridiculously high VRAM temperature. This occurred on multiple different boards, from various vendors. And that's before applying any overclocking settings, which some miners like to do in order to chase every last bit of hashing performance. There are ways to reduce the GDDR6X temperatures, which appear to cause the 3080 and 3090 to throttle only once the temperature reaches 110C. We used MSI Afterburner to drop the GPU and GDDR6X clocks as far as possible (-450 MHz on the core and -502 MHz on the RAM), which didn't really do much. After a few minutes, the cards would still drop down to much lower GPU clocks (around 900-950 MHz), though mining performance was still decent (95MH/s). Decreasing the power limit to just 60 percent finally allowed the VRAM temperatures to drop down to 90C, but then mining performance also dropped to around 60-65MH/s. These high junction temperatures do give us some cause for concern, but it's not clear how they'll affect the cards long term. For gaming, with the GDDR6X running at 100C or lower, that appears to be within tolerance from Nvidia's perspective. 24/7 mining on the other hand with memory running at 100-110C seems like the sort of thing that could cause premature card failure. We don't have precise answers as to why Nvidia is allowing the GDDR6X chips to hit these temperatures. We also don't know exactly what "GDDR6X Memory Junction Temperature" means, as far as the other GDDR6X chips are concerned. Presumably it's the hottest part of any of the GDDR6X chips, measured internally. And again, Nvidia's cards don't appear to start throttling GPU clocks until the temperature hits 110C. If you find your GDDR6X temperatures run super hot with HWInfo64, it's a good idea to keep your graphics card at stock settings. And if you're mining, either decrease your power limit or hope that these 100-110C memory temperatures don't pose a problem. Don't say we didn't warn you. Some very good advice here especially if you are mining.

|

Grey_Beard

CLASSIFIED Member

- Total Posts : 2233

- Reward points : 0

- Joined: 2013/12/23 11:50:37

- Location: The Land of Milk and Honey

- Status: offline

- Ribbons : 10

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 04:25:10

(permalink)

Looks like buying these cards used is not a good idea.

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 05:51:49

(permalink)

I'm calling BS on this. The only real way to know is buy a card like an EVGA iCX card that has sensors for the memory and the proper program to read the sensors. I know for a fact GDDR6 at 256bit bus only runs at 43°C MAX on a card with a good cooler mining Ethereum. There is no way GDDR6X is over double the temperature.

post edited by kram36 - 2021/01/28 06:31:35

|

aka_STEVE_b

EGC Admin

- Total Posts : 17692

- Reward points : 0

- Joined: 2006/02/26 06:45:46

- Location: OH

- Status: offline

- Ribbons : 69

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 06:17:26

(permalink)

Not to sure that's completely accurate , but I'll bet it's still something insane.

AMD RYZEN 9 5900X 12-core cpu~ ASUS ROG Crosshair VIII Dark Hero ~ EVGA RTX 3080 Ti FTW3~ G.SKILL Trident Z NEO 32GB DDR4-3600 ~ Phanteks Eclipse P400s red case ~ EVGA SuperNOVA 1000 G+ PSU ~ Intel 660p M.2 drive~ Crucial MX300 275 GB SSD ~WD 2TB SSD ~CORSAIR H115i RGB Pro XT 280mm cooler ~ CORSAIR Dark Core RGB Pro mouse ~ CORSAIR K68 Mech keyboard ~ HGST 4TB Hd.~ AOC AGON 32" monitor 1440p @ 144Hz ~ Win 10 x64

|

Bruno747

CLASSIFIED Member

- Total Posts : 3909

- Reward points : 0

- Joined: 2010/01/13 11:00:12

- Location: Looking on google to see what Nvidia is going to o

- Status: offline

- Ribbons : 5

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 07:32:27

(permalink)

kram36

I'm calling BS on this. The only real way to know is buy a card like an EVGA iCX card that has sensors for the memory and the proper program to read the sensors. I know for a fact GDDR6 at 256bit bus only runs at 43°C MAX on a card with a good cooler mining Ethereum. There is no way GDDR6X is over double the temperature.

Are you sure about that? Pretty certain evga doesn't manufacture the individual ram chips or the temp sensors in the actual gpu. So if evga is adding a sensor to the side or under a ram chip it is gonna be vastly different reading than one built into the die. And the die is going to be way way more accurate. Who knows icx may be using the built in die sensor on memory In that case, the place that just added support may not be decoding the signal properly.

X399 Designare EX, Threadripper 1950x, Overkill Water 560mm dual pass radiator. Heatkiller IV Block Dual 960 EVO 500gb Raid 0 bootable, Quad Channel 64gb DDR4 @ 2933/15-16-16-31, RTX 3090 FTW3 Ultra, Corsair RM850x, Tower 900

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 07:45:03

(permalink)

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 07:46:02

(permalink)

Bruno747

kram36

I'm calling BS on this. The only real way to know is buy a card like an EVGA iCX card that has sensors for the memory and the proper program to read the sensors. I know for a fact GDDR6 at 256bit bus only runs at 43°C MAX on a card with a good cooler mining Ethereum. There is no way GDDR6X is over double the temperature.

Are you sure about that? Pretty certain evga doesn't manufacture the individual ram chips or the temp sensors in the actual gpu. So if evga is adding a sensor to the side or under a ram chip it is gonna be vastly different reading than one built into the die. And the die is going to be way way more accurate.

Who knows icx may be using the built in die sensor on memory In that case, the place that just added support may not be decoding the signal properly.

My readings with HWInfo64 version 6.42 which match exactly with EVGA's Precision X1.

post edited by kram36 - 2021/01/28 08:06:13

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 10:13:14

(permalink)

Mining hammers memory. Power target only has a small effect on memory performance. And the people mining for profit are overclocking their memory as far as they can, and reducing core power consumption to whatever is most profitable. The memory is still being hammered; that's where the mining money lies.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 10:49:52

(permalink)

ty_ger07

Mining hammers memory. Power target only has a small effect on memory performance. And the people mining for profit are overclocking their memory as far as they can, and reducing core power consumption to whatever is most profitable. The memory is still being hammered; that's where the mining money lies.

What does that have to do with the temp of the memory, especially the claimed temp from the OP? If you keep the GPU temp down, it helps keep the memory temp down, providing the cooler is made properly, like the RTX 3070 and 3080 FTW3 coolers. What kills components? Temp kills components.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 12:11:14

(permalink)

I don't think that core temperature would have a large affect on internal memory temperature, but feel free to prove me wrong.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 13:07:04

(permalink)

ty_ger07

I don't think that core temperature would have a large affect on internal memory temperature, but feel free to prove me wrong.

Yes it would as both the GPU and memory share the same cooling block. I did have a EVGA GTX 1070 that didn't have any cooling for the memory. It was the blower style and wow I was surprised when I took it apart to install a water block on it and found nothing touching the memory chips. I guess EVGA figure the air being blown over the top was good enough.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 14:39:54

(permalink)

Feel free to prove me wrong.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 15:00:53

(permalink)

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 16:41:39

(permalink)

kram36

ty_ger07

Feel free to prove me wrong.

Consider yourself proven wrong. In less than 2 min running the card at stock clocks, the memory temp went up with a lower memory clock speed and the miner lost MH/s speed.

Huh? What does that have to do with anything you said earlier? You can't change your standpoint to something nonsensical and then call that proof. Verify that the memory thermal throttles by default, then overclock the memory to the max, reduce core power target to whatever is most profitable, and then show that the memory no longer thermal throttles. That is what you said you were going to prove.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 16:47:32

(permalink)

ty_ger07

kram36

ty_ger07

Feel free to prove me wrong.

Consider yourself proven wrong. In less than 2 min running the card at stock clocks, the memory temp went up with a lower memory clock speed and the miner lost MH/s speed.

Huh? What does that have to do with anything you said earlier. You can't change your standpoint to something nonsensical and then call that proof.

Don't change the subject. Higher GPU temp lead to a higher memory temp. I just added in that the memory at a lower clock speed, getting the same usage as before and the temp went up. It sure didn't go up because the memory clock speed went down. Memory temp went up because the GPU temp went up. I proved it, when you said prove me wrong.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/28 19:21:01

(permalink)

All you have proven is that the memory temperature varies some related to the core heat generation. We already knew that. It's obvious. But that doesn't prove you right and the article wrong. Prove me wrong. Prove the article wrong. You need to prove that YOUR card's memory throttles by default (to prove that your card is a valid test platform to compare with the results reported on), you need to prove what is the most profitable core power limit for your card (to prove your statement about miner's typical power target), and you need to prove that at that most profitable power limit, your card's memory no longer throttles (to prove what you said about the article being wrong due to the way miner's actually use their cards [according to you]).

post edited by ty_ger07 - 2021/01/28 21:51:02

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 03:37:12

(permalink)

ty_ger07

All you have proven is that the memory temperature varies some related to the core heat generation. We already knew that. It's obvious. But that doesn't prove you right and the article wrong.

Prove me wrong. Prove the article wrong.

You need to prove that YOUR card's memory throttles by default (to prove that your card is a valid test platform to compare with the results reported on), you need to prove what is the most profitable core power limit for your card (to prove your statement about miner's typical power target), and you need to prove that at that most profitable power limit, your card's memory no longer throttles (to prove what you said about the article being wrong due to the way miner's actually use their cards [according to you]).

Oh just stop it. ty_ger07

I don't think that core temperature would have a large affect on internal memory temperature, but feel free to prove me wrong.

I proved you wrong...period.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 04:23:26

(permalink)

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 04:27:43

(permalink)

ty_ger07

Fail

I proved you wrong...period.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 04:33:12

(permalink)

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 04:36:01

(permalink)

ty_ger07

Not even close.

Easily proved you wrong, only took 2 min to do so.

post edited by kram36 - 2021/01/29 04:38:10

|

Hoggle

EVGA Forum Moderator

- Total Posts : 10102

- Reward points : 0

- Joined: 2003/10/13 22:10:45

- Location: Eugene, OR

- Status: offline

- Ribbons : 4

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 04:41:08

(permalink)

This is why ICX is really nice on the EVGA cards and it's one of the features I really consider when picking a card so that I don't have to wonder about what the cards temps are doing.

Also please end the fighting in this thread. It's not helping the discussion move forward to see people just going back and forth at each other.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 05:25:48

(permalink)

kram36

ty_ger07

Not even close.

Easily proved you wrong, only took 2 min to do so.

You didn't though. You are using a straw man argument. Any outside observer knowledgeable on the topic can easily realize that you have proven nothing. You said that the article was wrong because the author doesn't know how a miner actually uses their card. And then, as "proof" that the article is wrong, you set up your card in the completely opposite way any miner would set up their card. That is not proof. You have some strange ways. Your "proof" was to purposely limit the memory heat generation as much as possible (by underclocking it), and then prove that when the memory is not generating its own heat, it's internal temperature is largely based on external factors. It's a "duh!" proof. It is completely irrelevant to the topic of discussion. What you initially came to prove was that after mining for hours, the internal temperature of overclocked memory while mining ethereum (the way miners actually use it, according to you), is more dependent on outside factors. You haven't proven that. In my opinion, the internal temperature is more affected by the application and the memory performance, than outside factors.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 05:52:35

(permalink)

ty_ger07

kram36

ty_ger07

Not even close.

Easily proved you wrong, only took 2 min to do so.

You didn't though. You are using a straw man argument. Any outside observer knowledgeable on the topic can easily realize that you have proven nothing.

You said that the article was wrong because the author doesn't know how a miner actually uses their card.

And then, as "proof" that the article is wrong, you set up your card in the completely opposite way any miner would set up their card. That is not proof.

You have some strange ways.

Your "proof" was to purposely limit the memory heat generation as much as possible (by underclocking it), and then prove that when the memory is not generating its own heat, it's internal temperature is largely based on external factors. It's a "duh!" proof. It is completely irrelevant to the topic of discussion.

What you initially came to prove was that after mining for hours, the internal temperature of overclocked memory while mining ethereum (the way miners actually use it, according to you), is more dependent on outside factors. You haven't proven that. In my opinion, the internal temperature is more affected by the application and the memory performance, than outside factors.

Dude you can't be that much of a dunce. I proved you wrong in less than a 2 min run. Also Hoggle

Also please end the fighting in this thread. It's not helping the discussion move forward to see people just going back and forth at each other.

I proved you wrong, so stop with your nonsense.

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21174

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 05:55:16

(permalink)

Proper proof would take hours. You can't prove internal memory operating temperatures while mining within minutes. Certainly you can't prove that they don't know how miners use their cards in actuality, by mining with the card in the completely wrong way.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

veganfanatic

CLASSIFIED Member

- Total Posts : 2119

- Reward points : 0

- Joined: 2015/06/20 18:08:41

- Status: offline

- Ribbons : 1

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 08:24:11

(permalink)

I have several ex-mining cards and after some refurbishing with MX-4 they seem to work fine.

I have noted that the GTX 1060 I have with one fan is not such a good idea. The dual fan cooler is worth it in the long run.

Corsair Obsidian 750D Airflow Edition + Corsair AX1600i PSUMy desktop uses the ThinkVision 31.5 inch P32p-20 Monitor.My sound system is the Edifier B1700BT Corsair Obsidian 750D Airflow Edition + Corsair AX1600i PSUMy desktop uses the ThinkVision 31.5 inch P32p-20 Monitor.My sound system is the Edifier B1700BT

|

badboy64

SSC Member

- Total Posts : 921

- Reward points : 0

- Joined: 2006/06/05 15:11:40

- Location: Fall River USA

- Status: offline

- Ribbons : 0

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 14:11:13

(permalink)

So the memory temp reaches 100C only when mining or does it do this for benchmarking and gaming also?

post edited by badboy64 - 2021/01/29 14:25:50

14th Intel® Core™ i9 14900KF CPU 3.2GHz@6.0ghz, Memory 2x24GB GSkill Trident Z Trident Z5 7200 mhz DDR5 Ram,4,000 GB MSI M480 PRO 4TB , Motherboard eVga 690 Dark , Operating System Windows 11 Pro 64-Bit, Msi Suprim X24G 4090, Monitor Acer CG437K, Logitech G910, Razer Lancehead Tournament Edition, Thermaltake View 91 RGB plus, eVga 1600w P2 PSU, Custom watercooling.   Speed Way Score 11,055 points. https://www.3dmark.com/sw/1112818

|

kram36

The Destroyer

- Total Posts : 21477

- Reward points : 0

- Joined: 2009/10/27 19:00:58

- Location: United States

- Status: offline

- Ribbons : 72

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 15:55:23

(permalink)

badboy64

So the memory temp reaches 100C only when mining or does it do this for benchmarking and gaming also?

I doubt the numbers are true, but gaming would make the memory run hotter and cause the memory to throttle down.

|

badboy64

SSC Member

- Total Posts : 921

- Reward points : 0

- Joined: 2006/06/05 15:11:40

- Location: Fall River USA

- Status: offline

- Ribbons : 0

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/29 16:31:55

(permalink)

kram36

badboy64

So the memory temp reaches 100C only when mining or does it do this for benchmarking and gaming also?

I doubt the numbers are true, but gaming would make the memory run hotter and cause the memory to throttle down.

I completely agree with you and I have the same temp readings with Precision X1 and AIDA64 are the same temps as HWInfo64. My memory temps never go above 72C when overclocked 500+mhz when gaming or benchmarking.

post edited by badboy64 - 2021/01/29 16:35:17

14th Intel® Core™ i9 14900KF CPU 3.2GHz@6.0ghz, Memory 2x24GB GSkill Trident Z Trident Z5 7200 mhz DDR5 Ram,4,000 GB MSI M480 PRO 4TB , Motherboard eVga 690 Dark , Operating System Windows 11 Pro 64-Bit, Msi Suprim X24G 4090, Monitor Acer CG437K, Logitech G910, Razer Lancehead Tournament Edition, Thermaltake View 91 RGB plus, eVga 1600w P2 PSU, Custom watercooling.   Speed Way Score 11,055 points. https://www.3dmark.com/sw/1112818

|

veganfanatic

CLASSIFIED Member

- Total Posts : 2119

- Reward points : 0

- Joined: 2015/06/20 18:08:41

- Status: offline

- Ribbons : 1

Re: GDDR6X in 3080 and 3090 Hits 110C While Mining Ethereum

2021/01/30 17:33:42

(permalink)

Looking at Krams screenshots v mine, i use a 4K panel which is wide than is this forum template

Corsair Obsidian 750D Airflow Edition + Corsair AX1600i PSUMy desktop uses the ThinkVision 31.5 inch P32p-20 Monitor.My sound system is the Edifier B1700BT Corsair Obsidian 750D Airflow Edition + Corsair AX1600i PSUMy desktop uses the ThinkVision 31.5 inch P32p-20 Monitor.My sound system is the Edifier B1700BT

|