atfrico

Omnipotent Enthusiast

- Total Posts : 12753

- Reward points : 0

- Joined: 2008/05/20 16:16:06

- Location: <--Dip, Dip, Potato Chip!-->

- Status: offline

- Ribbons : 25

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/10 18:08:13

(permalink)

You can say AMD GPUs are slower than Nvidia's, but the fact of the matter is that old AMD GPUs can run Ray Tracing just fine  . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster.  So please continue ranting AMD, what the Red Team is doing is putting some sugar and sprinkles on top of that salty wound tothose who pay premium on a false advertisement Tech

T hose who abuse power, are nothing but scumbags! The challenge of power is how to use it and not abuse it. The abuse of power that seems to create the most unhappiness is when a person uses personal power to get ahead without regards to the welfare of others, people are obsessed with it. You can take a nice person and turn them into a slob, into an insane being, craving power, destroying anything that stands in their way. Affiliate Code: 3T15O1S07G

|

veganfanatic

CLASSIFIED Member

- Total Posts : 2119

- Reward points : 0

- Joined: 2015/06/20 18:08:41

- Status: offline

- Ribbons : 1

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/10 18:38:27

(permalink)

I have used both Radeon and GeForce cards galore. I use fact and not supposition and I am more careful over games that use one vendors SDK as opposed to using a more conventional game engine.

An example is the nVidia hairworks that runs poorly on Radeon cards so I refuse such games for comparisons

Corsair Obsidian 750D Airflow Edition + Corsair AX1600i PSUMy desktop uses the ThinkVision 31.5 inch P32p-20 Monitor.My sound system is the Edifier B1700BT Corsair Obsidian 750D Airflow Edition + Corsair AX1600i PSUMy desktop uses the ThinkVision 31.5 inch P32p-20 Monitor.My sound system is the Edifier B1700BT

|

lehpron

Regular Guy

- Total Posts : 16254

- Reward points : 0

- Joined: 2006/05/18 15:22:06

- Status: offline

- Ribbons : 191

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/10 20:55:38

(permalink)

seth89

I’m not buying the “Nvidia Cards are future proof and AMD cards are not.”.

Navi and Zen are in Xbox and PS5... aren't all games really built around that? Not all PC games are console ports. If all you play is RTS, like the Anno series that never shows up in any console, the port conspiracy loses credibility. Besides, a game is independent of quality, meaning, consoles lock in the quality level, PC does not. This port conspiracy every believes in is just a myth. I mean honestly, if you want your games to never be console ports, stay away from those genres (any racing or any first-person, etc). If you can't help it, that's not the console's problem.

|

GTXJackBauer

Omnipotent Enthusiast

- Total Posts : 10323

- Reward points : 0

- Joined: 2010/04/19 22:23:25

- Location: (EVGA Discount) Associate Code : LMD3DNZM9LGK8GJ

- Status: offline

- Ribbons : 48

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/10 21:26:06

(permalink)

Use this Associate Code at your checkouts or follow these instructions for Up to 10% OFF on all your EVGA purchases: LMD3DNZM9LGK8GJ

|

-Jinky-

iCX Member

- Total Posts : 309

- Reward points : 0

- Joined: 2009/07/12 15:03:14

- Status: offline

- Ribbons : 0

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 05:41:24

(permalink)

It comes in beer?!?!?! Sign me up pls

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 14:55:59

(permalink)

atfrico

You can say AMD GPUs are slower than Nvidia's, but the fact of the matter is that old AMD GPUs can run Ray Tracing just fine . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster. . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster.

So please continue ranting AMD, what the Red Team is doing is putting some sugar and sprinkles on top of that salty wound tothose who pay premium on a false advertisement Tech

First the AMD GPU does NO RAY TRACE - it is done with either a AMD CPU or Intel CPU the GPU does rasterization and the ray is computed with the CPU and sent back to GPU however since you have to complete the scene using DLSS with NVidia or extra compute power - the scene is delayed until the scene can be fully rendered this is the BIG hit - it takes time rendering this way Also, NVIDIA use DLSS to denoise. From what I have seen, denoising ray-tracing is pretty important right now. Whilst I am confident AMD could develop Tensor cores should they desire (it is just matrices). Windows October 2018 Update] The Fallback Layer emulation runtime works with the final DXR API in Windows October 2018 update.The emulation feature proved useful during the experimental phase of DXR design. But as of the first shipping release of DXR, the plan is to stop maintaining this codebase. The cost/benefit is not justified and further, over time as more native DXR support comes online, the value of emulation will diminish further. That said, the code functionality that was implemented is left in place for any further external contributions or if a strong justification crops up to resurrect the feature. We welcome external pull requests on extending or improving the Fallback Layer codebase. So everything else that DOESN"T HAVE RT CORES or RT capable has to use CPU implementation if amd used GPU power then it would even take a bigger hit to its rendering pipeline, which is why AMD have designed and patented a next-gen solutionSO AMD will have a part hardware and software implementaion of RT- not as fast but will do What AMD has to also add in the next gen is AI- denoising is important otherwise they are gonna take a hit on performance CPU cores are good at doing RT - but you need a lot of them- just like Nvidia RT core

post edited by Xavier Zepherious - 2019/07/11 15:02:18

|

atfrico

Omnipotent Enthusiast

- Total Posts : 12753

- Reward points : 0

- Joined: 2008/05/20 16:16:06

- Location: <--Dip, Dip, Potato Chip!-->

- Status: offline

- Ribbons : 25

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 17:32:40

(permalink)

GTXJackBauer

atfrico

You can say AMD GPUs are slower than Nvidia's, but the fact of the matter is that old AMD GPUs can run Ray Tracing just fine . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster. . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster.

So please continue ranting AMD, what the Red Team is doing is putting some sugar and sprinkles on top of that salty wound tothose who pay premium on a false advertisement Tech

Are you drinking that fanboy beer again?

So you'd been drinking it this long and did not bother to share it?  How dare you?  . I guess you have the platinum membership on this by now, by all means sign me up buddy  Nvidia's GPU/CPU hybrid clock is ticking  Xavier Zepherious

atfrico

You can say AMD GPUs are slower than Nvidia's, but the fact of the matter is that old AMD GPUs can run Ray Tracing just fine . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster. . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster.

So please continue ranting AMD, what the Red Team is doing is putting some sugar and sprinkles on top of that salty wound tothose who pay premium on a false advertisement Tech

First the AMD GPU does NO RAY TRACE - it is done with either a AMD CPU or Intel CPU

the GPU does rasterization and the ray is computed with the CPU and sent back to GPU

however since you have to complete the scene using DLSS with NVidia or extra compute power - the scene is delayed until the scene can be fully rendered

this is the BIG hit - it takes time rendering this way

Also, NVIDIA use DLSS to denoise. From what I have seen, denoising ray-tracing is pretty important right now. Whilst I am confident AMD could develop Tensor cores should they desire (it is just matrices).

Windows October 2018 Update] The Fallback Layer emulation runtime works with the final DXR API in Windows October 2018 update.The emulation feature proved useful during the experimental phase of DXR design. But as of the first shipping release of DXR, the plan is to stop maintaining this codebase. The cost/benefit is not justified and further, over time as more native DXR support comes online, the value of emulation will diminish further. That said, the code functionality that was implemented is left in place for any further external contributions or if a strong justification crops up to resurrect the feature. We welcome external pull requests on extending or improving the Fallback Layer codebase.

So everything else that DOESN"T HAVE RT CORES or RT capable has to use CPU implementation

if amd used GPU power then it would even take a bigger hit to its rendering pipeline, which is why AMD have designed and patented a next-gen solution

SO AMD will have a part hardware and software implementaion of RT- not as fast but will do

What AMD has to also add in the next gen is AI- denoising is important otherwise they are gonna take a hit on performance

CPU cores are good at doing RT - but you need a lot of them- just like Nvidia RT core

. My gosh, I did not know that! Thanks for the clarification on just pointing the Nvidia features that does not justify the price tag Nvidia imposed to the RTX cards at launch, but the prices are different now, since most reviewers/customers notice that HBM2 memory and the 7mm GPU cost more than the DDR6 Memory and the 12mm norm size chip RTX cards have.

T hose who abuse power, are nothing but scumbags! The challenge of power is how to use it and not abuse it. The abuse of power that seems to create the most unhappiness is when a person uses personal power to get ahead without regards to the welfare of others, people are obsessed with it. You can take a nice person and turn them into a slob, into an insane being, craving power, destroying anything that stands in their way. Affiliate Code: 3T15O1S07G

|

GTXJackBauer

Omnipotent Enthusiast

- Total Posts : 10323

- Reward points : 0

- Joined: 2010/04/19 22:23:25

- Location: (EVGA Discount) Associate Code : LMD3DNZM9LGK8GJ

- Status: offline

- Ribbons : 48

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 19:53:23

(permalink)

Use this Associate Code at your checkouts or follow these instructions for Up to 10% OFF on all your EVGA purchases: LMD3DNZM9LGK8GJ

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 20:09:54

(permalink)

Xavier Zepherious

First the AMD GPU does NO RAY TRACE - it is done with either a AMD CPU or Intel CPU

the GPU does rasterization and the ray is computed with the CPU and sent back to GPU

There is a Crytek video which says that is wrong. ;) It used GPU compute to ray trace using an AMD card. Microsoft DirectX 12 documentation also says that GPU compute can be used for ray tracing. NVIDIA's 1000 series uses GPU compute to ray trace, right? Or is that not correct? https://devblogs.microsof...ft-directx-raytracing/I am really confused by your claims. Can you please provide some sources and links?

post edited by ty_ger07 - 2019/07/11 20:35:07

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

atfrico

Omnipotent Enthusiast

- Total Posts : 12753

- Reward points : 0

- Joined: 2008/05/20 16:16:06

- Location: <--Dip, Dip, Potato Chip!-->

- Status: offline

- Ribbons : 25

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 20:49:35

(permalink)

GTXJackBauer

atfrico

. My gosh, I did not know that! Thanks for the clarification on just pointing the Nvidia features that does not justify the price tag Nvidia imposed to the RTX cards at launch, but the prices are different now, since most reviewers/customers notice that HBM2 memory and the 7mm GPU cost more than the DDR6 Memory and the 12mm norm size chip RTX cards have. . My gosh, I did not know that! Thanks for the clarification on just pointing the Nvidia features that does not justify the price tag Nvidia imposed to the RTX cards at launch, but the prices are different now, since most reviewers/customers notice that HBM2 memory and the 7mm GPU cost more than the DDR6 Memory and the 12mm norm size chip RTX cards have.

No that's not what he said. It's saying that hardware RT is the way to go. AI cores great as well as a back up for a boost in performance when needed. This is great news for Nvidia and not so much for AMD BUT if AMD CPUs start packing a ton more cores, they might catch up.  (See what I did there.) Look how many RT CORES there are on a 2080 Ti, than get back to me. (See what I did there.) Look how many RT CORES there are on a 2080 Ti, than get back to me.

P.S. - I'll buy you some of that beer once you run out.

lol....RT cores, RT hardware? you made my day.  Ray Tracing can be run in AMD cards using the Shading Cores  grasshopper  . You don't believe me, grab that fanboy beer, pop a squad in your couch and have a wonderful read with this article https://www.pcworld.com/article/3401598/amd-releases-two-affordable-navi-based-radeon-rx-cards-and-lays-out-ray-tracing-plans.html Like Brad said in another thread: "the distortion field from Nvidia" is so strong is making its followers believe that is an unique type of software that requires a specific unique hardware to run it. It is not. Click Tyger's link, pretty much that sums it all. Microsoft is laying out the truth about Ray Tracing and what it is used for and what hardware can run it. You need to educate yourself first, then post a valid argumentative observation about Ray Tracing. It is like hearing mumble rap, Nvidia fans spitting so much words, you are not even able to understand them or make sense on what they are talking about. It is ok to defend your bad decision in buying an over priced GPU, because in reality is not your fault. You were lure to buy and believe in in a lie and false advertisement technology. RTX GPUs has more RT cores (shader cores) than AMD? yes, it does and that is the reason why the GPU is faster, not much really, but lets be real about the tech itself. Take AMD GPU memory, the chip and compare it with the RTX cards, which tech is expensive to get? can i please have one of those beers now? That way I can prove the company i am fanboy and rooting for....... It is called the best bang for my money!

post edited by atfrico - 2019/07/11 21:06:50

T hose who abuse power, are nothing but scumbags! The challenge of power is how to use it and not abuse it. The abuse of power that seems to create the most unhappiness is when a person uses personal power to get ahead without regards to the welfare of others, people are obsessed with it. You can take a nice person and turn them into a slob, into an insane being, craving power, destroying anything that stands in their way. Affiliate Code: 3T15O1S07G

|

GTXJackBauer

Omnipotent Enthusiast

- Total Posts : 10323

- Reward points : 0

- Joined: 2010/04/19 22:23:25

- Location: (EVGA Discount) Associate Code : LMD3DNZM9LGK8GJ

- Status: offline

- Ribbons : 48

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/11 22:20:54

(permalink)

atfrico

lol....RT cores, RT hardware? you made my day.

Ray Tracing can be run in AMD cards using the Shading Cores  grasshopper grasshopper . You don't believe me, grab that fanboy beer, pop a squad in your couch and have a wonderful read with this article https://www.pcworld.com/article/3401598/amd-releases-two-affordable-navi-based-radeon-rx-cards-and-lays-out-ray-tracing-plans.html . You don't believe me, grab that fanboy beer, pop a squad in your couch and have a wonderful read with this article https://www.pcworld.com/article/3401598/amd-releases-two-affordable-navi-based-radeon-rx-cards-and-lays-out-ray-tracing-plans.html

Like Brad said in another thread: "the distortion field from Nvidia" is so strong is making its followers believe that is an unique type of software that requires a specific unique hardware to run it. It is not. Click Tyger's link, pretty much that sums it all. Microsoft is laying out the truth about Ray Tracing and what it is used for and what hardware can run it.

You need to educate yourself first, then post a valid argumentative observation about Ray Tracing. It is like hearing mumble rap, Nvidia fans spitting so much words, you are not even able to understand them or make sense on what they are talking about.

It is ok to defend your bad decision in buying an over priced GPU, because in reality is not your fault. You were lure to buy and believe in in a lie and false advertisement technology. RTX GPUs has more RT cores (shader cores) than AMD? yes, it does and that is the reason why the GPU is faster, not much really, but lets be real about the tech itself. Take AMD GPU memory, the chip and compare it with the RTX cards, which tech is expensive to get?

can i please have one of those beers now? That way I can prove the company i am fanboy and rooting for....... It is called the best bang for my money!

Again, you're misinterpreting what's being said here. Let me know when you're done with that keg and I'll buy you a six pack to lessen the effects.

Use this Associate Code at your checkouts or follow these instructions for Up to 10% OFF on all your EVGA purchases: LMD3DNZM9LGK8GJ

|

atfrico

Omnipotent Enthusiast

- Total Posts : 12753

- Reward points : 0

- Joined: 2008/05/20 16:16:06

- Location: <--Dip, Dip, Potato Chip!-->

- Status: offline

- Ribbons : 25

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/12 17:03:19

(permalink)

GTXJackBauer

Again, you're misinterpreting what's being said here.

Let me know when you're done with that keg and I'll buy you a six pack to lessen the effects.

Nah, I am just pulling a Kram  I will finish the keg the moment you get off that distortion field and denial programming from Nvidia

T hose who abuse power, are nothing but scumbags! The challenge of power is how to use it and not abuse it. The abuse of power that seems to create the most unhappiness is when a person uses personal power to get ahead without regards to the welfare of others, people are obsessed with it. You can take a nice person and turn them into a slob, into an insane being, craving power, destroying anything that stands in their way. Affiliate Code: 3T15O1S07G

|

seth89

CLASSIFIED ULTRA Member

- Total Posts : 5290

- Reward points : 0

- Joined: 2007/11/13 11:26:18

- Status: offline

- Ribbons : 14

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/12 17:47:07

(permalink)

Xavier Zepherious

atfrico

You can say AMD GPUs are slower than Nvidia's, but the fact of the matter is that old AMD GPUs can run Ray Tracing just fine . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster. . Demo or not, it is a proven fact, oh, i forgot, AMD was able to get Nvidia to lower the price of the RTX GPUs and optimize it to run a bit faster.

So please continue ranting AMD, what the Red Team is doing is putting some sugar and sprinkles on top of that salty wound tothose who pay premium on a false advertisement Tech

First the AMD GPU does NO RAY TRACE - it is done with either a AMD CPU or Intel CPU

the GPU does rasterization and the ray is computed with the CPU and sent back to GPU

however since you have to complete the scene using DLSS with NVidia or extra compute power - the scene is delayed until the scene can be fully rendered

this is the BIG hit - it takes time rendering this way

Also, NVIDIA use DLSS to denoise. From what I have seen, denoising ray-tracing is pretty important right now. Whilst I am confident AMD could develop Tensor cores should they desire (it is just matrices).

Windows October 2018 Update] The Fallback Layer emulation runtime works with the final DXR API in Windows October 2018 update.The emulation feature proved useful during the experimental phase of DXR design. But as of the first shipping release of DXR, the plan is to stop maintaining this codebase. The cost/benefit is not justified and further, over time as more native DXR support comes online, the value of emulation will diminish further. That said, the code functionality that was implemented is left in place for any further external contributions or if a strong justification crops up to resurrect the feature. We welcome external pull requests on extending or improving the Fallback Layer codebase.

So everything else that DOESN"T HAVE RT CORES or RT capable has to use CPU implementation

if amd used GPU power then it would even take a bigger hit to its rendering pipeline, which is why AMD have designed and patented a next-gen solution

SO AMD will have a part hardware and software implementaion of RT- not as fast but will do

What AMD has to also add in the next gen is AI- denoising is important otherwise they are gonna take a hit on performance

CPU cores are good at doing RT - but you need a lot of them- just like Nvidia RT core

Radeon Image Sharpening https://www.youtube.com/watch?v=7MLr1nijHIo I think AMD has a version of DLSS that is applied to all games without special coding. Would this be consider denoising?

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 11:05:51

(permalink)

ty_ger07

Xavier Zepherious

First the AMD GPU does NO RAY TRACE - it is done with either a AMD CPU or Intel CPU

the GPU does rasterization and the ray is computed with the CPU and sent back to GPU

There is a Crytek video which says that is wrong. ;) It used GPU compute to ray trace using an AMD card.

Microsoft DirectX 12 documentation also says that GPU compute can be used for ray tracing. NVIDIA's 1000 series uses GPU compute to ray trace, right? Or is that not correct?

https://devblogs.microsof...ft-directx-raytracing/

I am really confused by your claims. Can you please provide some sources and links?

read that paper - no where does it says it used GPU compute for all of it or any of it seen another that explained it better - that it leaves a callback(that is developer call back to implement RT pipeline - that is how everything is done using C++ - using callbacks) otherwise RT is done by CPU - nvidia applies a callback for RTX cards - to use a specific driver series of code (they have done more by providing a callback and use of cuda for older cards - but not farther back than 1000 series cards because the hit would even be bigger on those cards - be just as easy to use CPU and enable RT) i think someone has tried older cards than 1000 series but the hit i so great it pretty much a lame dunk to try - because performance is quite bad this means unless the graphic card Manufacturer provided new code to use Cuda cores- DX12 defaults to CPU Or unless you are some Developer and can write new Specific Cuda code for a Nvidia GPU or write AMD new callback code(and write code so it could work on AMD with a big hit) - basically....you don't do this (and Coding doesn't means it uses any or all of the AMD GPU- they could have used CPU and remember extra software to interpret and recofig a stream processor takes up performance from both a software and hardwware side of it) also any use of GPU compute to do RT ties up a core doing normal shader work - so you take a big hit using GPU compute because it has to do RT BT boxes rather than shader work - that slows up the work and that work has to happen Prior to any shader work for each cell those bounding boxes (BT) are better done on general purpose CPU (Exactly what they say) or specific hardware designed for it and with disposable CPU cores coming with New chips - like why do you need more than 4 to game??? well with 2x or 3x or 4x the cores sitting idle - maybe RT would be a good thing for them to do - maybe denoising too

post edited by Xavier Zepherious - 2019/07/13 11:23:21

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 11:19:37

(permalink)

seth89

Radeon Image Sharpening

https://www.youtube.com/watch?v=7MLr1nijHIo

I think AMD has a version of DLSS that is applied to all games without special coding.

Would this be consider denoising?

No they are not the same - uses different algorithms and accomplishes different goals sharpening usually is edges - denoising is usually replacing noise(or bads cells between known good ones -this generally results in a bit of blurring- because you are approx the color between 2 or 3 points) with AI it a best guess with a lots of pics in its database to look at - since it been rendered ti many times to count

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 11:23:20

(permalink)

Xavier Zepherious

ty_ger07

Xavier Zepherious

First the AMD GPU does NO RAY TRACE - it is done with either a AMD CPU or Intel CPU

the GPU does rasterization and the ray is computed with the CPU and sent back to GPU

There is a Crytek video which says that is wrong. ;) It used GPU compute to ray trace using an AMD card.

Microsoft DirectX 12 documentation also says that GPU compute can be used for ray tracing. NVIDIA's 1000 series uses GPU compute to ray trace, right? Or is that not correct?

https://devblogs.microsof...ft-directx-raytracing/

I am really confused by your claims. Can you please provide some sources and links?

read that paper - no where does it says it used GPU compute for all of it or any of it

...

The primary reason for this is that, fundamentally, DXR is a compute-like workload. It does not require complex state such as output merger blend modes or input assembler vertex layouts. A secondary reason, however, is that representing DXR as a compute-like workload is aligned to what we see as the future of graphics, namely that hardware will be increasingly general-purpose, and eventually most fixed-function units will be replaced by HLSL code.

...

A new command list method, DispatchRays, which is the starting point for tracing rays into the scene. This is how the game actually submits DXR workloads to the GPU.

...

It is designed to be adaptable so that in addition to Raytracing, it can eventually be used to create Graphics and Compute pipeline states, as well as any future pipeline designs.

...

over the past few years, computer hardware has become more and more flexible: even with the same TFLOPs, a GPU can do more.

Where does it say CPU compute for all of it or any of it? Xavier Zepherious

this means unless the graphic card Manufacturer provided new code to use Cuda cores- DX12 defaults to CPU

Ah ha! There we have it. Now we are back to page one of this thread. Thank you. Now I wonder why you dragged this on so long just to full circle to where we started. AMD cards are capable of ray tracing. As I said: ty_ger07

When AMD thinks RT is relevant, it will be able to implement "method 2" using GPU cores with its existing card. Or "method 1" with a future product.

Or unless you are some Developer and can write new .... Crytek has done something in their engine to ray trace on either NVIDIA or AMD cards using GPU compute. Kind of repeating myself.....

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 13:07:39

(permalink)

Crytek provided a really poor implementation of RT on AMD or on ANY GPU Yet CRYENGINE’s solution is seemingly able to bypass some (not all) of this hardware requirement. This was achieved utilising the company’s own lighting system, building upon the voxel cone tracing already within the engine. “Before we added mesh ray tracing into the engine, we already had a lighting system that used a lot of ray tracing,” Kajalin says, “also known as voxel cone tracing. That meant we didn’t have to build an entirely new lighting system from scratch. https://www.gamasutra.com/view/news/286023/Graphics_Deep_Dive_Cascaded_voxel_cone_tracing_in_The_Tomorrow_Children.php Voxel Cone tracing is NOT RAY TRACING they are similar - but not the same - voxel cone takes more memory and is slower (software intensive) -but it provides a generic way to deal with RT and you will not get the same visuals Voxel provides a means to do some RT on mobile which was why people complained about the poor RT implementation using this method Ray Trace is what you want ...it will lead to path trace which is the future And this is from Crytek w e prepare voxel representation of the scene geometry (at run-time, on CPU, asynchronously and incrementally). cryteks version requires CPU+GPU to do RT basically "voxelization is performed on the CPU with raytracing" rendering is GPU

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 13:18:17

(permalink)

I see lots of words, no links which seem very relevant, no relevant sources cited, and lots of foot-in-mouth syndrome; but nothing changes the fact that AMD's hardware is able to ray trace whenever there is enough motivation. Where we stand right now regarding the leapfrogging topic: This AMD card is faster and cheaper than NVIDIA's competing card. The NVIDIA competing card's one notable feature is ray tracing which it is pretty much not able to pull off at a level of performance which would be consider acceptable for every day use; and is therefore usually not utilized for anything other than demonstration purposes. The majority of games do not support ray tracing and the majority of consumers do not own a ray tracing capable game. This AMD card is capable of ray tracing, but at what performance level, who knows. This irrelevant and purely academic discussion is getting pretty tiresome. And then we are back to the fact that AMD leapfrogged NVIDIA with a card which is faster and cheaper than NVIDIA's competing card.

post edited by ty_ger07 - 2019/07/13 14:00:28

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 14:03:49

(permalink)

read Crytek's own words in their demo - the Demo is Voxel cone traced - they added some elements of Mesh based Ray trace - where the bullets could not be rendered correctly with voxel trace and there they said they only added a small number of them otherwise performance would suffer under real ray trace at that time they added a little particle RT as well this was a hybrid Demo of VOXEL TRACE with some RT elements One of the key factors which helps us to run efficiently on non-RTX hardware is the ability to flexibly and dynamically switch from expensive mesh tracing to low-cost voxel tracing, without any loss in quality. Furthermore, whenever possible we still use all the established techniques like environment probes or SSAO. These two factors help to minimize how much true mesh ray tracing we need and means we can achieve good performance on mainstream GPUs. Another factor that helps us is that our SVOGI system has benefitted from five years of development. However, RTX will allow the effects to run at a higher resolution. At the moment on GTX 1080, we usually compute reflections and refractions at half-screen resolution. RTX will probably allow full-screen 4k resolution. It will also help us to have more dynamic elements in the scene, whereas currently, we have some limitations. Broadly speaking, RTX will not allow new features in CRYENGINE, but it will enable better performance and more details. Neon Noir is a research and development project, but ray-traced reflections will come to the engine by the end of the year. Since the bullet casings are such small objects in the world, the SVOGI voxel grid didn’t pick them up and we had to turn them into dynamic ray-traced objects which is usually reserved for moving objects that are updated each frame. When we released the videothe limit for the amount of dynamic objects was a lot stricter than it is now. That’s why only about half the bullets had proper reflections in the video. https://www.cryengine.com/news/how-we-made-neon-noir-ray-traced-reflections-in-cryengine-and-more So they even had limitations doing this demo RTX gives them much more Most future games will have Ray trace - don't say there are not any - and most future top games will have it AMD did not leapfrog - - they are still slower than Nvidia - and they do not provide full RT - im sorry half bake RT doesn't cut it when it can't render properly with crytek and doing any RT on AMD(currently) will be a true hit -leaving out crytek's version - which is voxel 5700Xt does not beat 2070 super 5700 does not beat 2060 super Amd cards are price where they should be - competitively lower because they are not the best and they don't have RT

post edited by Xavier Zepherious - 2019/07/13 15:07:54

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 16:15:10

(permalink)

Good thing that NVIDIA's competing cards are not competitive, priced higher, and ray tracing is irrelevant. Are we on repeat? Maybe this can carry on to page 3.

AMD's cards are capable of ray tracing, faster, and cheaper.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

Vlada011

Omnipotent Enthusiast

- Total Posts : 10257

- Reward points : 0

- Joined: 2012/03/25 00:14:05

- Location: Belgrade-Serbia

- Status: offline

- Ribbons : 11

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 16:43:50

(permalink)

Now I'm sure that second hand GTX1080 and GTX1080Ti are better investment then AMD Navi. Approach of NVIDIA and AMD is different. NVIDIA drain last cent from customers and from intentional politic making new owners of expensive GPU jealous on 10-15% stronger uncute models. They then invest again. AMD politic is some weird approach where new series bring nothing improvement only lower price. AMD do that from period of Cypress and Cayman, first they launch ATI 5870, that was great card, last under name of ATI. Then suddenly next year they launched HD 6970 one hot, bad for OC GPU but little cheaper. Is it 5700 XT at all close to RTX2070. I was hoped that she will be closer to RTX2080? I believe GPU manufacturer reach dead spot and increasing price any more is not possible without serious losing in number of customers in thousands and now they will try to confuse market selling same performance 3-4 years later for half of original price under different package. I nicely remember how I played games on first DX11 card ever launched, ATI 5870. For DX9 she was killer, DX11, you need 3 GPU. DX11 games launch only year after 20-30fps in DX11. GTX580 was something better, then GTX780Ti was real enjoying in playing DX11 games. But she was launched 4 years after first DX11 support. Same is with RT and people recognize that now. When I upgrade my platform for year, then year later GPU and when show up some GPU capable to defeat 3 Way SLI GTX1080Ti market will have 20-25 games with nice Ray Tracing support... then I will think about RT. Now I don't read or look difference, I can't afford and they can't deliver playable fps.

post edited by Vlada011 - 2019/07/13 16:53:53

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 18:33:26

(permalink)

- Ark: Survival Evolved

- Assetto Corsa Competizione (September 12, 2018)

- Atomic Heart (2019)

- Battlefield V (October 11, 2018)

- Control (2019)

- Dauntless (Q4 2018)

- In Death

- Enlisted

- Final Fantasy XV

- The Forge Arena

- Fractured Lands

- Hitman 2 (November 13, 2018)

- Justice (October 26, 2018)

- JX3

- Mechwarrior V: Mercenaries (Q1 2019)

- Metro Exodus (February 22, 2019)

- PlayerUnknown’s BattleGrounds

- Remnant from the Ashes (2019)

- Serious Sam 4: Planet Badass (TBA)

- Shadow of the Tomb Raider (September 14, 2018)

- We Happy Few

That over 20 games with more to come

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 18:57:26

(permalink)

1) 20 out of millions of games? 20 out of 10,000 released per year?

2) That's not a list of games currently released and playable with ray tracing support. That's just a list of games which "promise" to support ray tracing at some point.

And that still makes zero difference for how poor the ray tracing support is for the rtx 2060 and 2070 ... the thing which makes this topic, again, completely irrelevant.

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 19:42:51

(permalink)

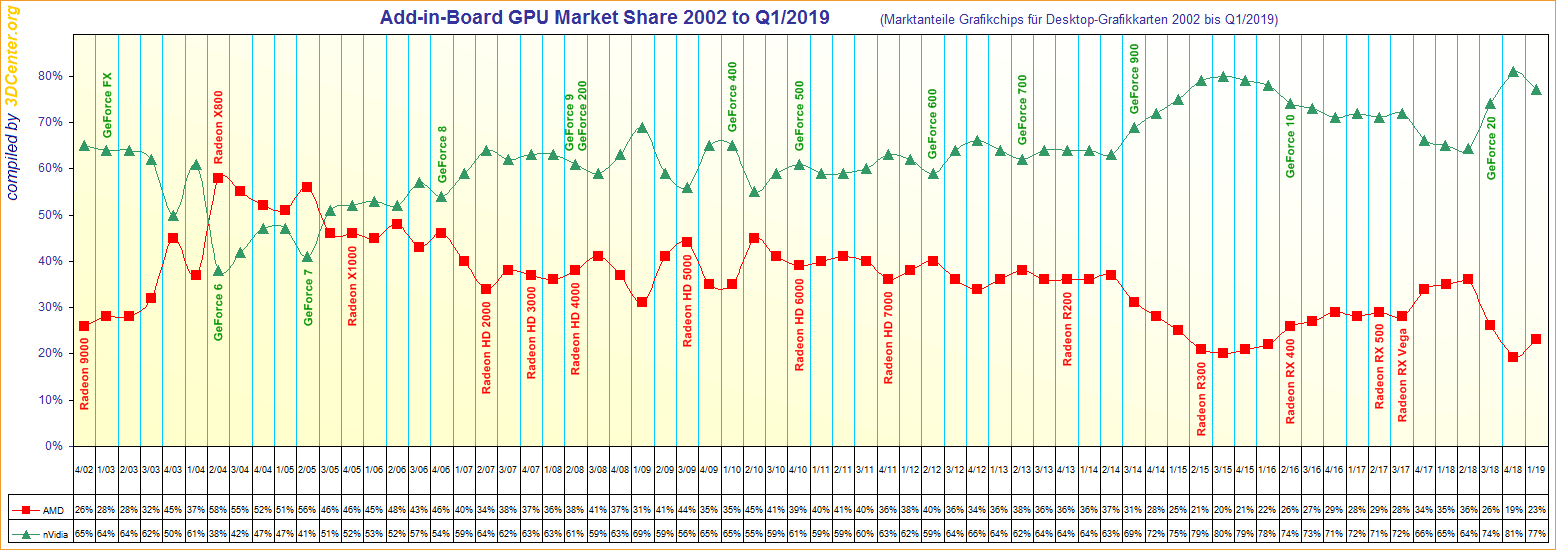

it's you who keeps making it pointless when AMD cannot beat NVidia even at basic GPU performance with a DIE SHRINK basically Nvidia telling us its can use 5 yr old parts(Fab tech) to beat your brand new one(7nm) and sell it for more $$ because they are the current gpu top dog - get away with it because AMD can't show up to do proper battle show me your best effort or go home benchmarks tell us everything there is no leapfrog - AMD are behind the 8-ball on this - and they cut into their own margins trying to sell cards at lower prices to gain what??? as far as i can see Nvidia grew it's share heck had AMD stuck to GCN and used 7nm they should have beaten RTX Turing The 7nm process offers 35% to 40% performance gains over 16nm or a >65% power reduction. instead what we got was another pile of doowey from AMD NVIDIA's market share rose as gamers started purchasing its GPUs. Its market share rose even though its overall GPU shipments fell 7.6%, as market share is relative to competitors' performances. The increase in its market share shows that NVIDIA did well compared to AMD in the overall weak GPU market.  \

post edited by Xavier Zepherious - 2019/07/13 19:55:19

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/13 20:00:02

(permalink)

and they cut into their own margins trying to sell cards at lower prices to gain what?

I don't think that they did. The rumor 3 or 4 months ago was RTX 2070 performance at less than $400. It wasn't until very very late that the rumored price jumped significantly. Then, at the last minute, AMD supposedly "dropped" its prices right back to what the rumor had been from the start. It was all rumor. Don't read into it. It's nice to see NVIDIA worry. History is fine, but it will be way more interesting to see where that chart heads in the next couple of years.

post edited by ty_ger07 - 2019/07/13 20:14:39

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

Viper453

SSC Member

- Total Posts : 630

- Reward points : 0

- Joined: 2012/03/13 17:52:50

- Status: offline

- Ribbons : 1

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/14 17:41:26

(permalink)

AMD did better this time around with the 5700 series and especially cpus. This is the closes they have been since the fury cards 4 years ago. They didn't really attack the 2080 or 2080 TI but not many ppl buy those cards anyways.

MIS 4090 Trio i9 10900K EVGA 1600 P+ G.SKILL TridentZ Series 3200 mhz cas 14 32gb OS drive 960 pro 2 tb 2x EVO 860 4TB Monitor ROG PQ32UQX / LG C2 "65

|

ty_ger07

Insert Custom Title Here

- Total Posts : 21171

- Reward points : 0

- Joined: 2008/04/10 23:48:15

- Location: traveler

- Status: offline

- Ribbons : 270

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/16 06:03:03

(permalink)

ASRock Z77 • Intel Core i7 3770K • EVGA GTX 1080 • Samsung 850 Pro • Seasonic PRIME 600W Titanium

My EVGA Score: 1546 • Zero Associates Points • I don't shill

|

ARMYguy

FTW Member

- Total Posts : 1050

- Reward points : 0

- Joined: 2005/06/23 12:06:19

- Status: offline

- Ribbons : 0

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/16 07:07:19

(permalink)

I don't know what everyone is going on about. Its been common knowledge forever now that you buy AMD if you want cheaper, worse parts. And you buy nVidia if you want premium, best parts. The new AMD gpus change nothing about this, they are still mid range and priced accordingly. On the CPU front, AMD does better with the new release, but intel still is the best cpu if you are gaming. You pay for the "best", even if the best is only so by a percentage. Both camps have charged top $ for the top spot, regardless of the actual performance improvement.

The RTX arguments just depends on the person. I personally haven't played around with ray tracing too much yet, although i loved the quake 2 with full RTX, was cool to see one of my old favorite games remade with that tech. Would i buy my setup just to play it? heck no, but i would have bought my setup regardless, as it is the fastest performance i can buy currently. I changed from a Titan V just cause the 2080 ti was slightly faster in some games. If AMD came out with something faster than my 2080s i would buy it. yeah i know there is a RTX titan, i just decided to skip the Titan series this time around.

Asus Strix Z790 F - Intel 13700 K - Gigabyte 4090 Windforce - 32gb DDR 5 - 1TB Samsung SSD 850 evo - Windows 10 Pro - 1TB Samsung M.2 860 - Inland 2 TB NVMe - Acer Predator X27

|

seth89

CLASSIFIED ULTRA Member

- Total Posts : 5290

- Reward points : 0

- Joined: 2007/11/13 11:26:18

- Status: offline

- Ribbons : 14

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/18 07:07:59

(permalink)

Xavier Zepherious

seth89

Radeon Image Sharpening

https://www.youtube.com/watch?v=7MLr1nijHIo

I think AMD has a version of DLSS that is applied to all games without special coding.

Would this be consider denoising?

No they are not the same - uses different algorithms and accomplishes different goals

sharpening usually is edges - denoising is usually replacing noise(or bads cells between known good ones -this generally results in a bit of blurring- because you are approx the color between 2 or 3 points)

with AI it a best guess with a lots of pics in its database to look at - since it been rendered ti many times to count

Interesting, maybe I'm wrong but this is some of what I'm looking at. NVIDIA DLSS Deep Learning Super Sampling ( DLSS) is an NVIDIA RTX technology that uses the power of AI to boost your frame rates in games with graphically-intensive workloads. With DLSS, gamers can use higher resolutions and settings while still maintaining solid frame rates. AMD RIS An intelligent contrast-adaptive sharpening algorithm that produces visuals that look crisp and detailed, with virtually no performance impact. Sound like they're the same thing, but do it differently. https://www.techspot.com/...pening-vs-nvidia-dlss/

|

Xavier Zepherious

CLASSIFIED ULTRA Member

- Total Posts : 6746

- Reward points : 0

- Joined: 2010/07/04 12:53:39

- Location: Medicine Hat ,Alberta, Canada

- Status: offline

- Ribbons : 16

Re: AMD Leapfrogs NVIDIA for the First Time in Years

2019/07/18 07:27:30

(permalink)

Not the same thing

DLSS the screen could be half missing and DLSS would fill in the spots because it's rendered it 1000,or 100,000 times before and fill in the spots that are missing

CAS or RIS is edge - or contrast sharpening - ie it alters the color of areas- making things brighter or darker to enhance edges and contrast of pixels

|