Update 2013/12/18: Added Titan SLI and Titan w/Titan PhysX with Double Precision enabled. Added FPS line graph, showing how bad the Titan + CPU runs really were. Converted all graphs for a much clearer view and also links to larger versions.--

So it came up

in another thread that a used Titan is a good purchase right now for the 6GB of ram for the next several years. Afterwhich, it may can be used as a PhysX engine. I know, most of us would be like, "What? A $1000 GPU for a PhysX engine?" Well, this got me thinking... Just what exactly are the results of using a beast of a GPU for a PhysX engine? The results are interesting...

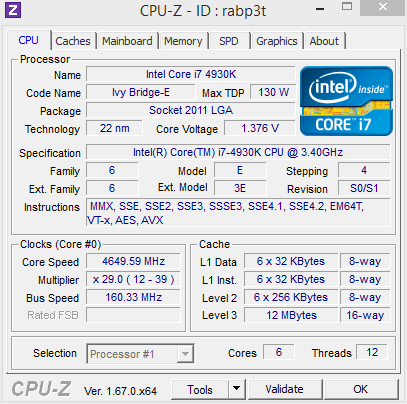

Testbed / Gaming machine / Full-time work PCIntel Core i7 4930k @ 4.7 Ghz, 160 Bclk, HT Enabled, 16 GB ram @ 2133 CAS9 (see sig)

2x

EVGA GTX Titan w/Skynet v3 1006 Mhz BIOS

@ 1202 Mhz core / 6,800 Mhz Memory1x EVGA GTX 460 EE

CPU-Z Validation:

http://valid.canardpc.com/rabp3t Skyn3t's GTX Titan "V3" BIOS 1006

Skyn3t's GTX Titan "V3" BIOS 1006The Titan BIOS, like almost all other 600 and 700 series BIOSes, limits overclocking. Mostly by the Power Target limiter of 106% on the Titan (115% on my 780 Classified, etc). Also, a lot of people report "+115" as an overclock, when that's not an overclock - the BIOS will boost to what it attempt to run at, and will lower the clock under a number of circumstances. This is fine for the average user that does nothing to the card for cooling; but, for those of us that knows how to manage cooling (or have better cooling), we need more room to grow.

Enter custom BIOSes you can flash to the card. EVGA has stated that under an RMA, the card must have its original BIOS and be write-locked. Otherwise flashing BIOSes does not void warranty. I really love EVGA.

There are a number of BIOSes available for the Titan now. I've tried out a lot for my 780 Classified and Titans, and feel the best support is given by Skyn3t and his brother over on the OCN forums. So, I picked his "V3 1006" BIOS for these tests. Verbatium from the scarce readme file:

Base core clock 1006Mhz

Boost Disabled

Voltage unlocked 1.212v

Default power target 350W with 125% slide = 439w

Max fan speed adjustable to 100%

Base core clock set waaay higher than the factory 837 Mhz base to 1006, a nice solid number that all air-cooled Titans can run with the stock fan profile and all. Boost being disabled allows us to run the Titan monster at exactly the same Mhz for each and every benchmark. The higher voltage limit allows us to eek out just a little more core speed. And the default power target increased allows us to run many more amps before the PT limit kicks it.

As mentioned above, the Titans are set to an overclock of:

1202 Mhz GPU Core*

6,800 Mhz Memory

1.212V

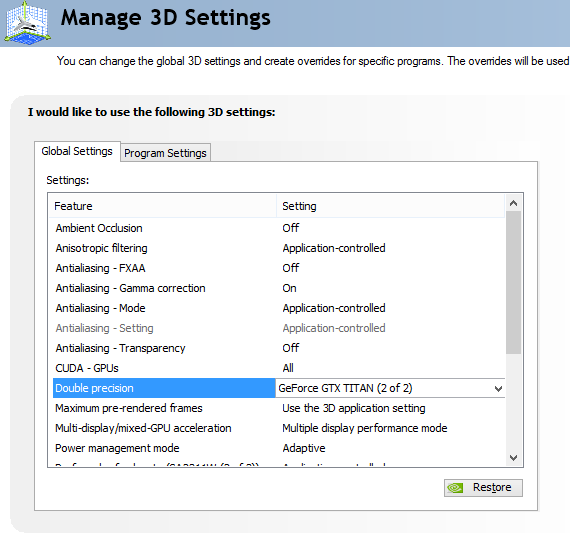

* When Double Precision is enabled on GPU2 for certain tests, the GPU Core is lowered 7 steps, or 105 Mhz down to 1097 Mhz. See below.

Enabling Double Precision with Custom BIOSI will admit, this had me a little scared at first because enabling Double Precision lowers the clock rates of the GPU core on the stock BIOS. In my tests, both 1006-stock and overclocked, that "lowering" seems to be right at about 105 Mhz on the dot (that's a multiplier reduction of 7 in Nvidia's GPU's terms, because the Nvidia TItan operates on a 15 Mhz multiplier rate. 7 * 15 = 105 Mhz).

But, I am running a custom BIOS made for overclocking and as a matter of fact has a ~150 Mhz overclock built into the "base, will not go lower" BIOS! So for a few first tests, I ran with overclocks disabled. With the 1006 Mhz BIOS, the core speed dropped down to 901 Mhz. With my 1202 Mhz overclock, when enabling DP the core speed dropped to 1097 Mhz. It made it through a few quick 3D tests, and the GPU2 Core temps stayed very low. So I felt comfortable then to go through the entire PhysX benchmark here.

To recap, when Double Precision (DP) is enabled in the tests below for GPU2, the GPU core speed drops down to 1097 Mhz from the overclocked 1202 Mhz setting. This is expected, and being a known DP drop of 105 Mhz with boost disabled in the BIOS, we have a nice solid base to run a benchmark against.

Benchmark SoftwareI first attempted to use PLAGame Benchmark, but I could never get the 2nd GPU to have any usage throughout the entire benchmark. So, I moved over to my copy of Batman Origins that came with my 780 Classifieds (gave one away here in the

Giveaway forums). It's really a great update to the franchise and I am so glad they got right of the Microsoft account crap with this version over the last Batman AC.

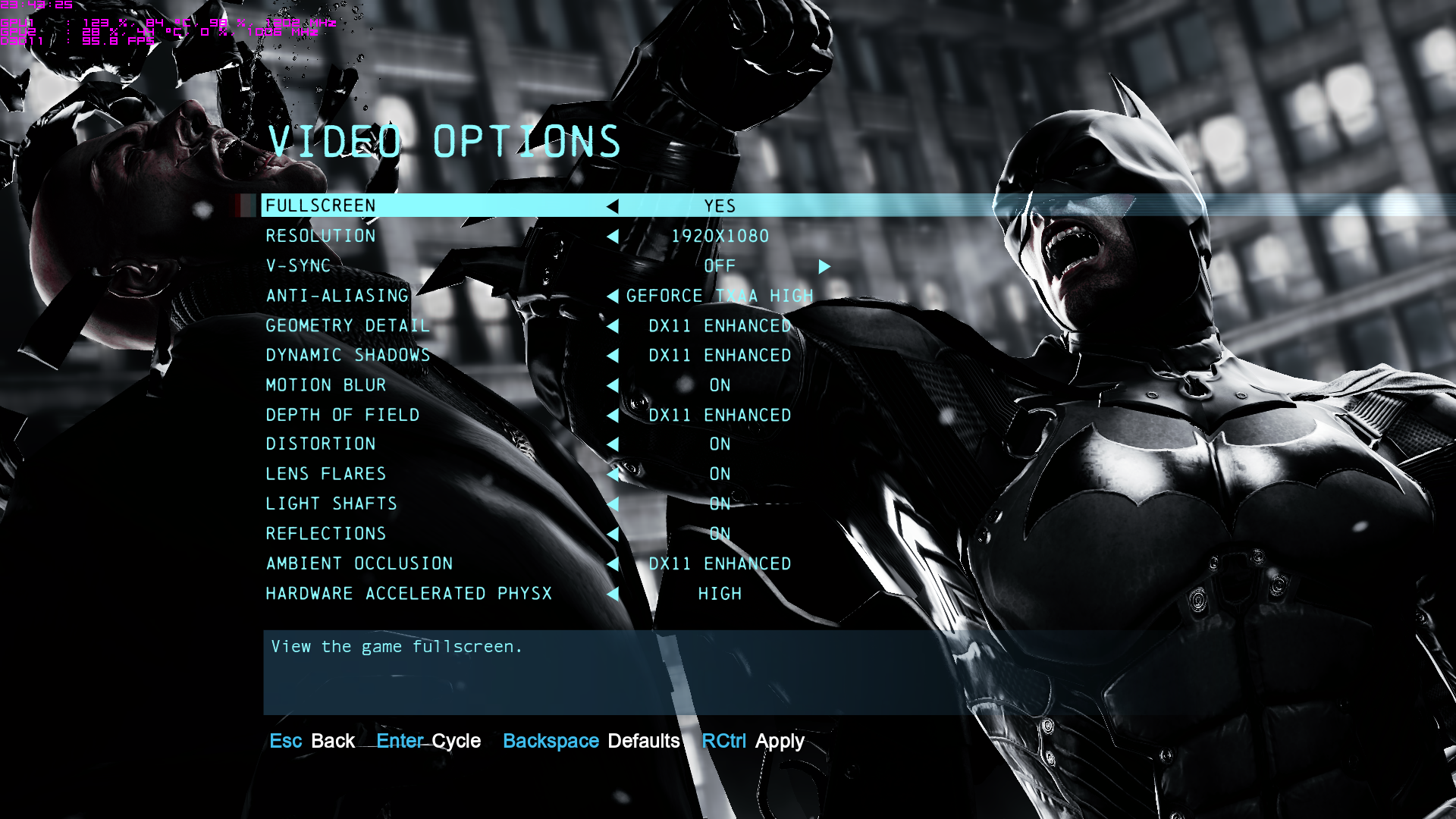

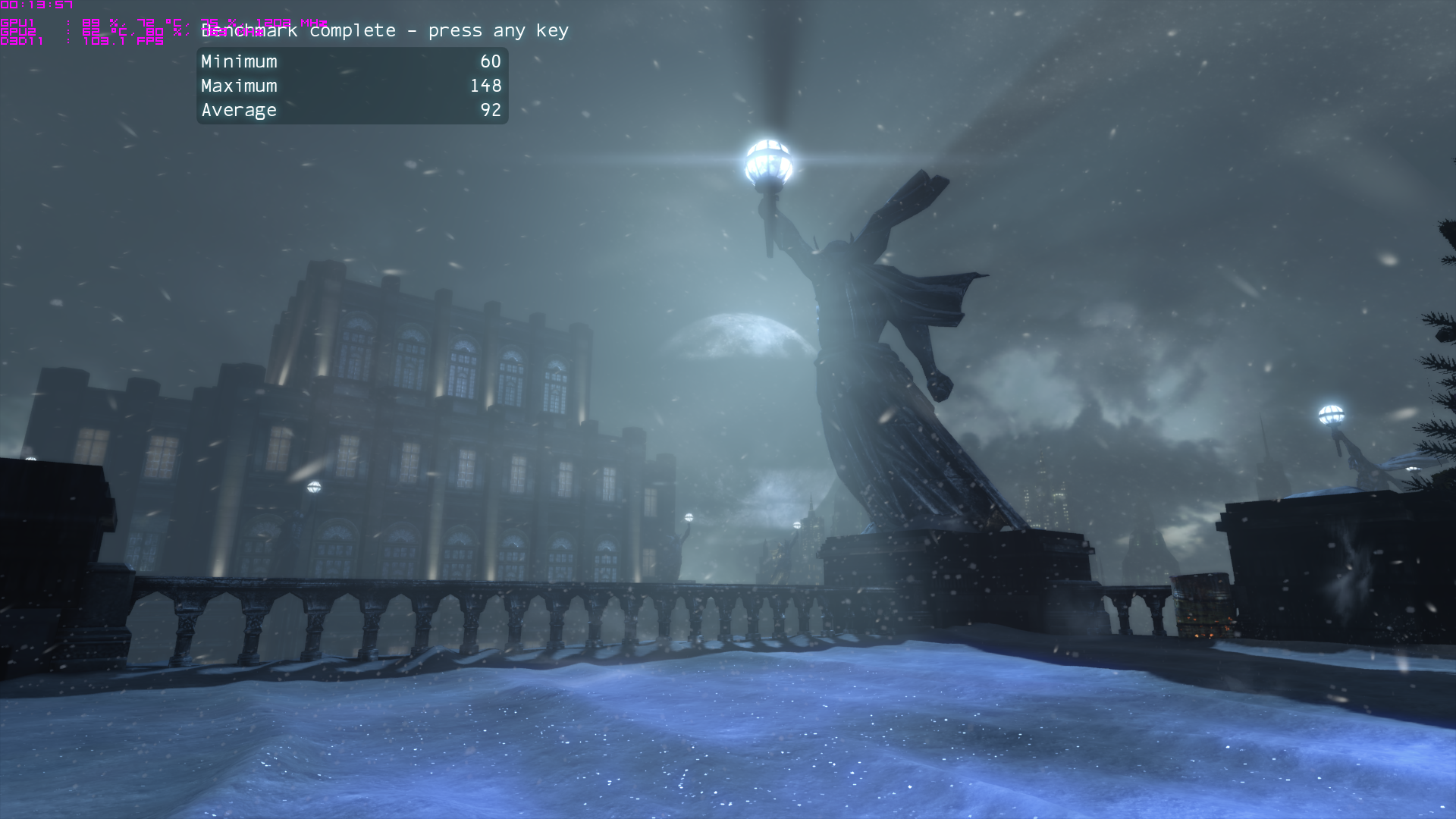

Batman: Arkham Origins Batman Origins' Graphics Setup

Batman Origins' Graphics SetupI went ahead and maxed every freakin' setting in the game. Why not? It's a Titan right?

Resolution: 1920x1080 (could not enable NVIDIA Surround of 5760x1080 on a single GPU with two identical GPUs in the system)

V-Sync: off

Anti-Aliasing: TXAA (aka 4xMSAA + TXAA)

DX11 graphics enabled in every option.

Hardware Accelerated PhysX: HIGH

FYI: TXAA HIGH translates to 4xMSAA + TXAA HIGH. I could have selected 8xMSAA alone. But I felt 4xMSAA + TXAA is as smooth as you'd ever want on a 1080p monitor.

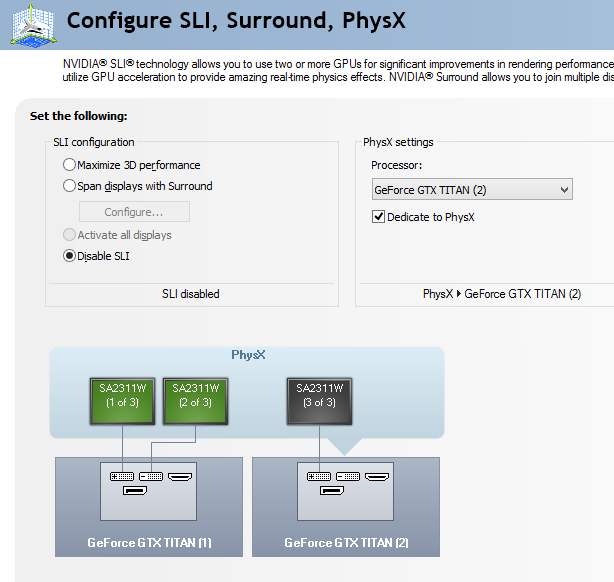

NVIDIA Control Panel SetupNow this gets annoying. I originally wanted to run at my standard 5760x1080 tri-monitor resolution to really beat down on the GPU1 and additional PhysX over such a wide view. But, NVIDIA must have some dumb rule that if you have two identical GPUs in the system, SLI is required for 2D Surround. Nothing I did or hack would get the 3rd monitor enabled over HDMI (all 3 connected to GPU1) when I had a 2nd Titan in the system as the dedicated PhysX. Sure, this works perfectly when I stick in my GTX 460. But not when I had a 2nd Titan in the system. Nvidia really wanted me to enable SLI. I even tried to disconnect the SLI bridge in which the Nvidia Control Panel and popups started yelling at me to "connect an SLI bridge." It continued to gray-out the 3rd monitor even then.

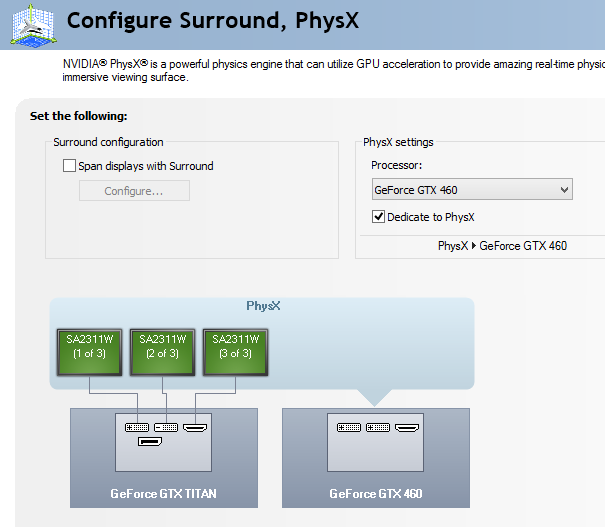

Back on point.. I used the Nvidia Control Panel to designate the which GPU or the CPU as the PhysX engine. As shown in the screenshot below in the upper-right.

SLI was always disabled when using the 2x Titans. I used the PhysX Settings to designated either Titan GPU1 or Titan GPU2 as the PhysX engine, with GPU2 checked off as "Dedicated to PhysX."

Alright then, on with the results!

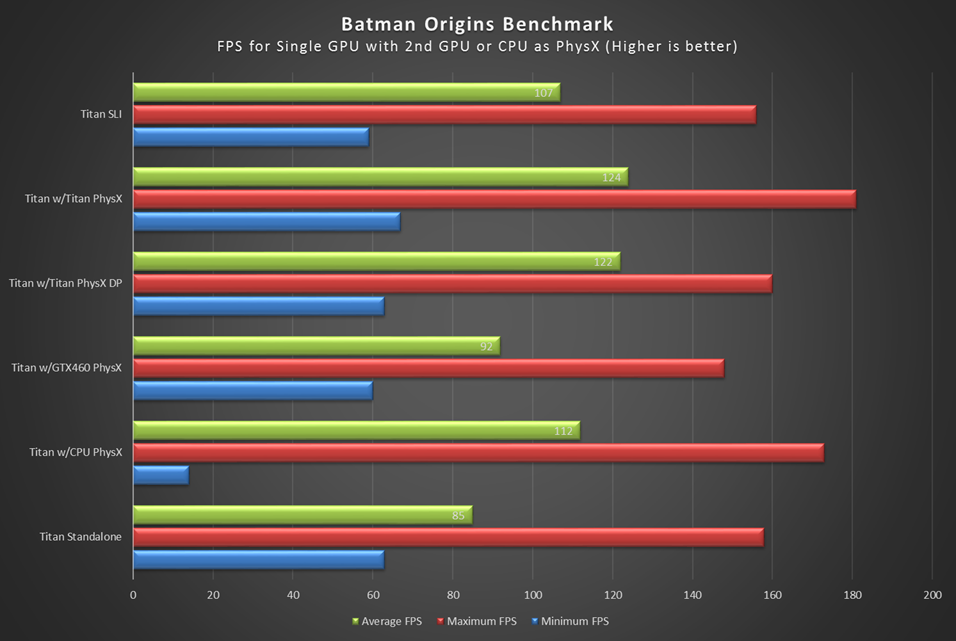

Batman Origins Benchmark FPS Results 2-way Titan SLI

Titan + 2nd Titan set as a Dedicated PhysX (not in SLI)

Titan + 2nd Titan set as Dedicated PhysX, with Double Precision enabled

Titan + GTX460 set as the dedicated PhysX engine

Titan + CPU set as the dedicated PhysX engine

A single Titan set as both the main display, as well as the PhysX engine.

The graphs speaks for themselves, and the "Minimum" really is a real-world minimum during the benchmark.

Note that if you have two Titans in SLI and want to play this PhysX game, or maybe another, then disable SLI and designate the 2nd GPU as your PhysX card. Unfortunately I run with Nvidia Surround, so I have to use both Titans in SLI for tri-monitors. But you can bet I am eye-balling my 780 Classified sitting in the closet next to me. Humm...

Double-Precision actually hurts, just a little. Most likely because of the lower GPU clock rate when enabling DP (it lowers 105 Mhz as I noted above).

About that Titan + CPU test: Don't be fooled thinking the CPU is just fine. It's the worse option of all as you can see in the FPS line graph above when it dips very very log for two scenes. Yes, the Titan w/CPU designated as the PhysX really does suck that bad, crawling at ~15 to ~30 FPS for a good long time. Also, I couldn't believe those numbers so I ran it three times - with a fresh reboot in-between. It's dead on every time. Point being: do NOT use the CPU for Nvidia PhysX of any kind! Disable PhysX before even attempting to use PhysX. It really stuttered hard at the beginning of most scenes and was not smooth at all.

I also felt using the Titan + GTX460 was actually a lot smoother than the standalone Titan runs, even though there were higher FPS maxes.

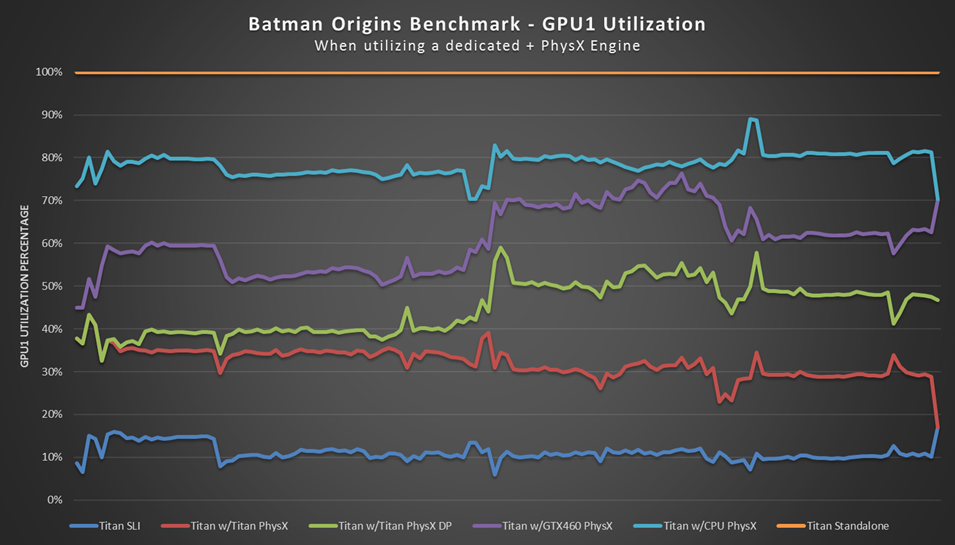

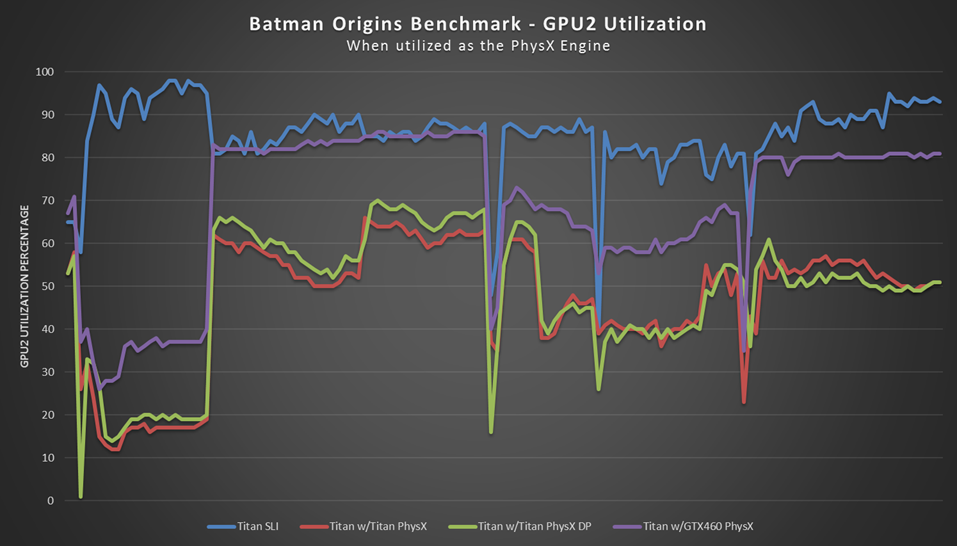

GPU Utilization

First, you can overlay both of these graphs if you like. The timecodes are exactly the same across both graphs.

You'll notice that the Titan Standalone run is pegged at 99% the entire time. To me, this is a clue that PhysX and Graphics Rendering within the game are equally matched at this resolution. Perhaps the game developers optimized all graphics rendering and PhysX rendering to be on a single GPU, as that is how the vast majority of players will use the game (and across consoles).

Note that the main GPU1 utilization actually doesn't peak to 100% when using a dedicated PhysX engine. But, depending on which PhysX engine you use, it really does effect

how much it does get used surprisingly.

This may indicate that the graphics rendering is bottlednecked by the response of the PhysX GPU performance: the faster the PhysX GPU, the less bottlenecked (blocked) the primary GPU. Also note that during the Titan SLI runs, Nvidia with "Auto" selected as my PhysX engine decided to use my GPU2. This may account for the very big usage difference between GPU1 and GPU2. AS a test, I manually selected GPU1 as the PhysX engine with SLI enabled. The graphs could almost be flipped from the Titan SLI show above - it was identical.

Conclusion I would summarize by saying to choose your PhysX engine wisely. Don't rely on that old 9800 GTX thinking it's just fine - it actually directly affects your FPS. You want the biggest and baddest "dedicated" GPU you got as your PhysX card. And, Double Precision doesn't matter - at least at this resolution.

I personally can only take this test with a grain of salt as I only game at 5760x1080 / 6000x1080 resolutions. With Nvidia restriction Surround mode on a single GPU with two identical GPUs in the system, I was unable to fully utilize the hardware for accurate results.

So yeah, if I had the money, I'll take a 3rd Titan as my PhysX card please.

And donate the GTX 460 for some other purpose...

post edited by eduncan911 - Friday, August 28, 2015 7:34 AM

Attached Image(s)